Related: SeaClear, Coordinate Frames

I used this concept to determine the position of the underwater robot in a pool, while taking the refraction of the water into consideration.

With this, I converted a 2D pixel coordinates into 3D world points, accounting for light refraction at the air-water interface

I assumed

- World Frame: Origin at ArUco marker, Z pointing up

- Camera Frame: Origin at camera optical center

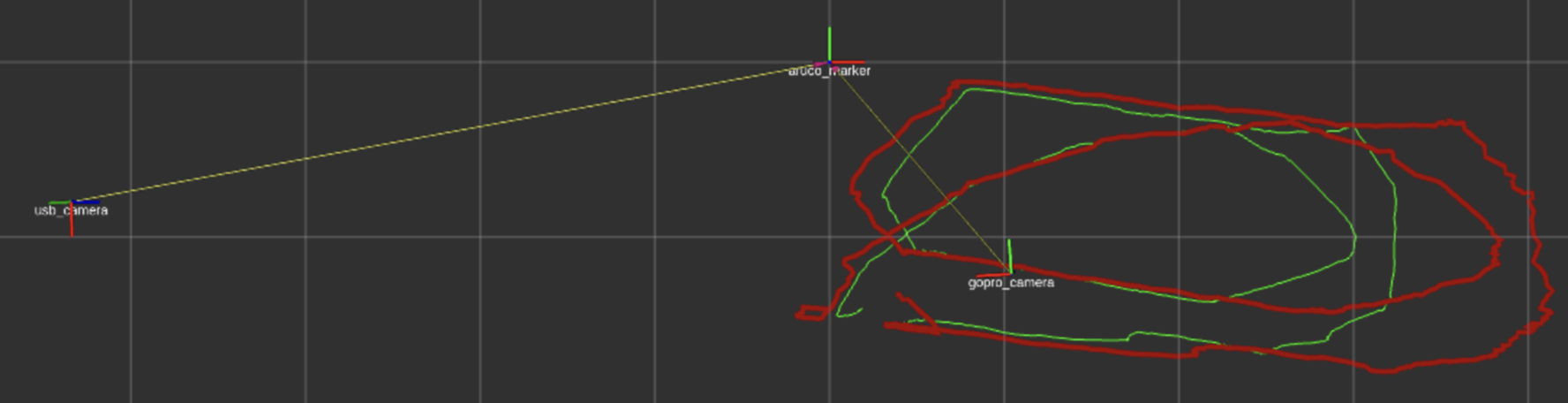

I get some nasty errors, likely due to the distortion parameters, since I implemented this refraction with the water. I could definitely see some improvements in the distances (especially around the edges of the FOV again).

Basically what happens: when I get to the edge of the FOV in the UsbCamera frame, I get some nasty drift (you can see in the plot image). I highly believe this is a problem because of the setup (I will make a page shortly on that -- how to ensure a good setup when working with multiple cameras) and also because of the poor quality of the ArduCam. Even though I calibrated with many decimals for the distortion, I still get that drift.

Convert Pixel to normalized camera coordinates

pts = np.array([[[pixel_x, pixel_y]]], dtype=np.float64)

x_n, y_n = cv2.undistortPoints(pts, K, dist_coeffs)[0,0]

dC = np.array([x_n, y_n, 1.0], dtype=np.float64) # not unit

d_air_W = camera_orientation @ dC

o_W = camera_center- Take the raw pixel coordinates (pixel_x, pixel_y)

- Remove lens distortion with

cv2.undistortPoints(performs the inverse)

- The intrinsic matrix maps 3D camera points to pixels:

- represents where a ray through that pixel intersects plane in camera coordinates.

d_air_Wis the direction vector of a ray in world coordinates, starting at the camera center (o_W) and going towards the pixel, before refractioncamera_orientationis the rotation matrix R from camera frame to world framecamera_centeris the camera position in world coordinates from the ArUco detection. I got it by using Rodrigues’ Rotation Formula.

Camera coordinate system:

Z (forward, into scene)

^

|

| • (x_n, y_n, 1) <-- point on image plane

| /

| /

| /

o───────→ X (right)

/

↓

Y (down)

Intersect with water surface (z=0)

denom = d_air_W[2]

s0 = (0.0 - o_W[2]) / denom

if s0 <= 0:

rospy.logwarn(f"Ray does not intersect water surface, or camera is below water surface, camera_z = {o_W[2]}")

return None

I0_W = o_W + s0 * d_air_Wdenomis the z component of the rays0tells me how far I need to go along the ray to intersect the water surface plane z- If

s0⇒ it’s in front (valid) - If

s0⇒ it’s behind (invalid)

- If

I0_Wis the 3D intersection point of the incoming ray with the water surface plane (z )

o_W (camera)

\

\ s0 = distance to water

\

────────●────────── z = 0

I0_W

Compute Refracted Direction Into Water

n_surface_up = np.array([0.0, 0.0, 1.0])

d_in = d_air_W / np.linalg.norm(d_air_W)

d_wtr = self.refract_dir(d_in, n_surface_up, air_n, water_n)d_inis the unit direction vector of the ray in the air pointing towardsI0_Wd_wtris the refracted ray inside the water computed with Snell’s Law

AIR (n=1.0)

\ θ₁ (incident angle)

\

───────●───────── surface

/

/ θ₂ (refracted angle, smaller)

/

WATER (n=1.33)

Intersect refracted ray with plane z = depth (coming from IMU)

denom2 = d_wtr[2]

s1 = ((float(depth)) - I0_W[2]) / denom2

P_W = I0_W + s1 * d_wtrdenom2is the z component of the refracted ray direction- If

denom2⇒ Ray parallel to water surface after refraction - If

denom2⇒ ray pointing downward into the water

- If

s1tells me how far along the ray to travel to reach plane at depth levelP_Wis the 3D world point on the ROV’s depth plane that corresponds to the original pixel, AFTER refraction. This is what I want to get!! (reference + direction * distance)

─────────●─────────── z = 0 (X0_W)

\

\ d_wtr (refracted ray)

\

● P_W z = depth

Convert back to Camera Frame

R_CW = camera_orientation.T

p_C = R_CW @ (P_W - o_W)

return p_CR_CWis the rotation matrix that takes a world point to express it in the camera frame. I will let the TF library from ROS to handle the conversion back to world.p_Cis the 3D point in camera coordinates, which is then transformed to the ArUco marker frame by thetransform_point_to_worldfunction. We subtractP_W - o_Wto get the relative distant from the camera to that exact point under water.

Why refraction matters

What camera “sees” VERSUS Actual position:

camera camera

\ \

\ \

──────●──── surface ───────●────

| \

| (straight line) \ (bent ray)

| \

● <-- wrong position ● <-- correct position

The error increases with

- depth

- viewing angle (rays at edge bend more)