Notes for my Image Processing and Computer Vision exam at the University of Twente. It’s also a good summary of the lectures. I didn’t feel like making separate pages for each subject. They also serve as exam notes.

Most of the terms I have already used in:

- SeaClear

- Bachelors Thesis

- Barcode Detection and Decoding and others on GitHub.

Lecture 2

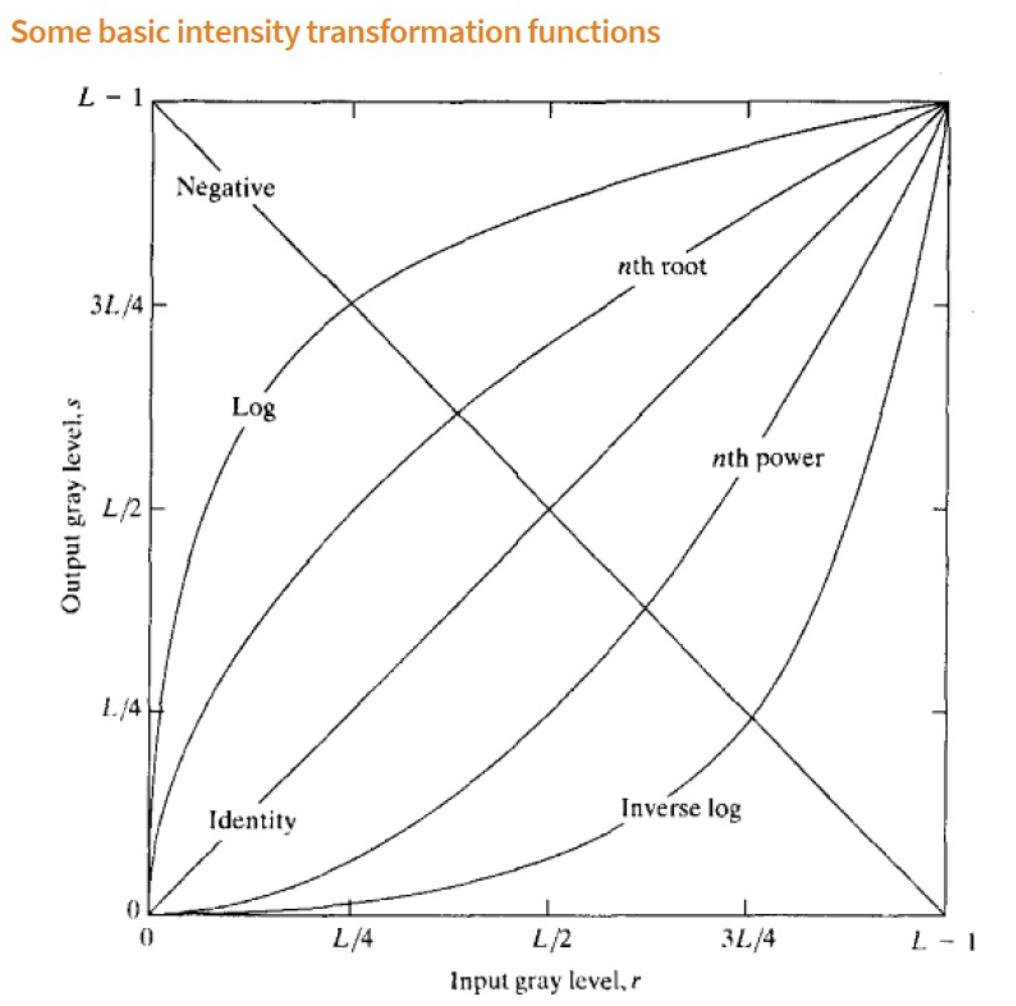

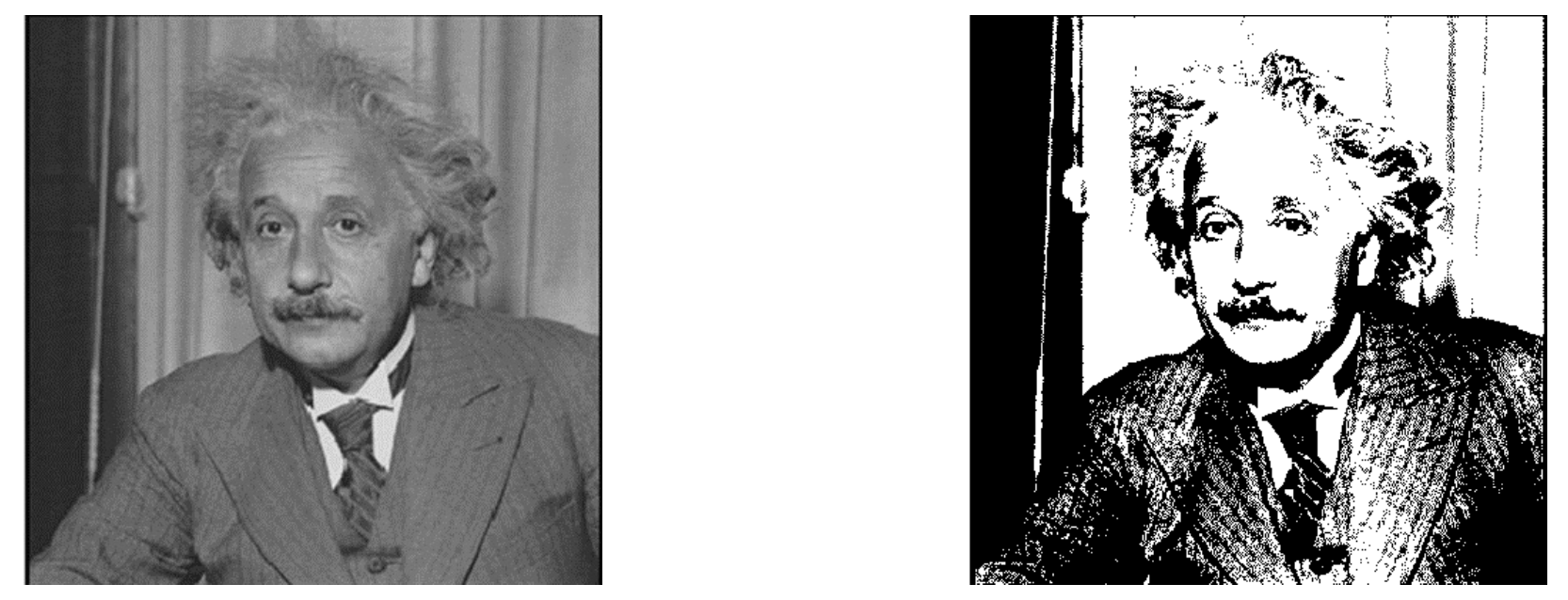

Intensity Transformations

- Some function that takes as input the old pixel value and gives the new one.

- Mostly used for image enhancement

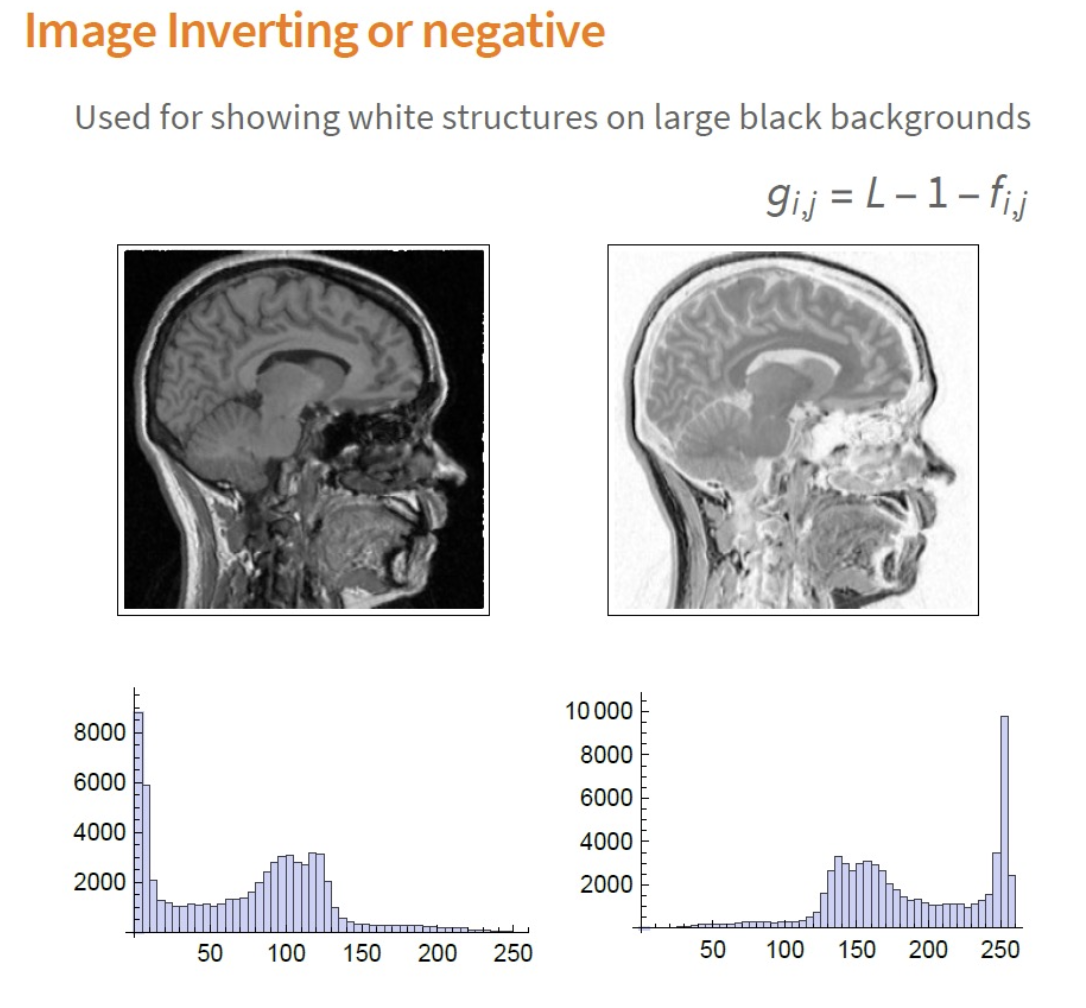

- Image inverting or negative: showing white structures on large black backgrounds.

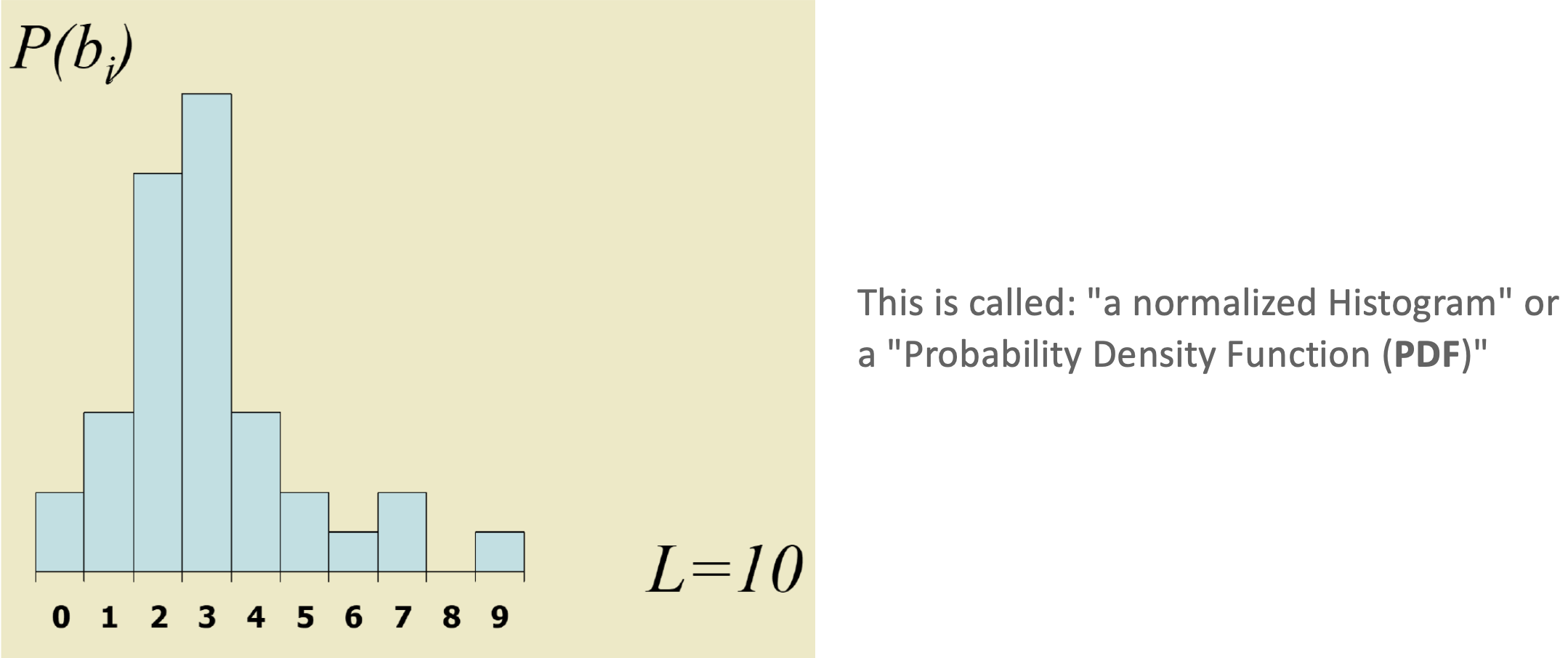

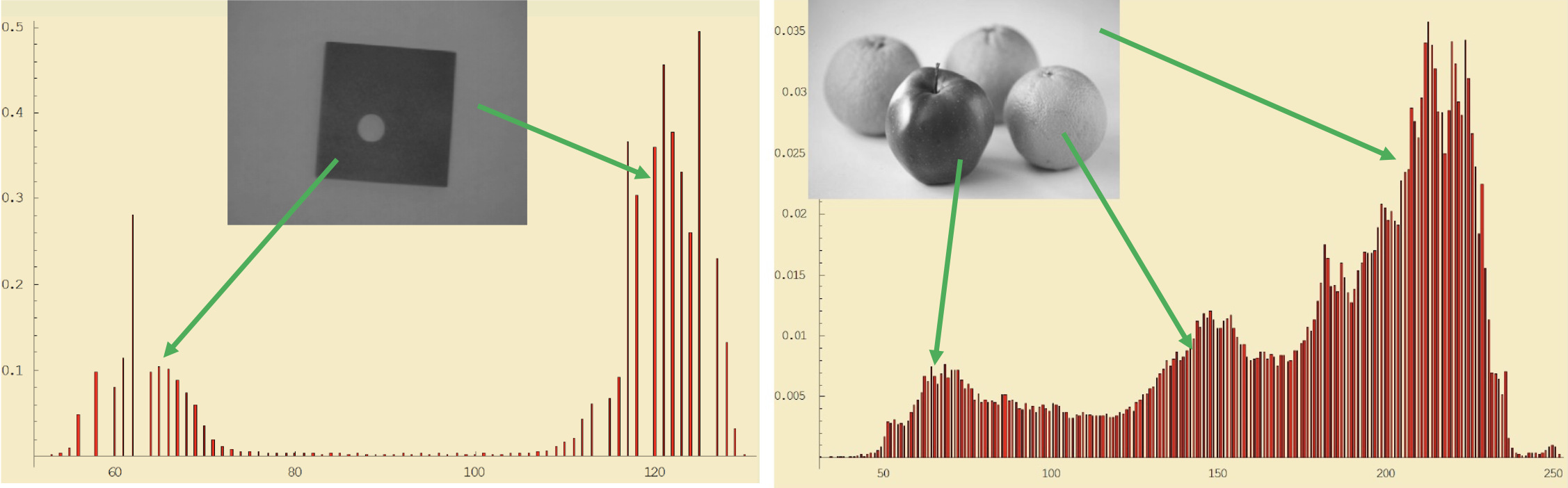

Histograms

As the teacher explained it, it’s basically a frequency vector of the intensity of vectors, divided by the total number of pixels.

- A histogram consists of bins , with

- = for which

- is the total number of pixels. Then

Gamma transformations

- means darker images

- means brighter images

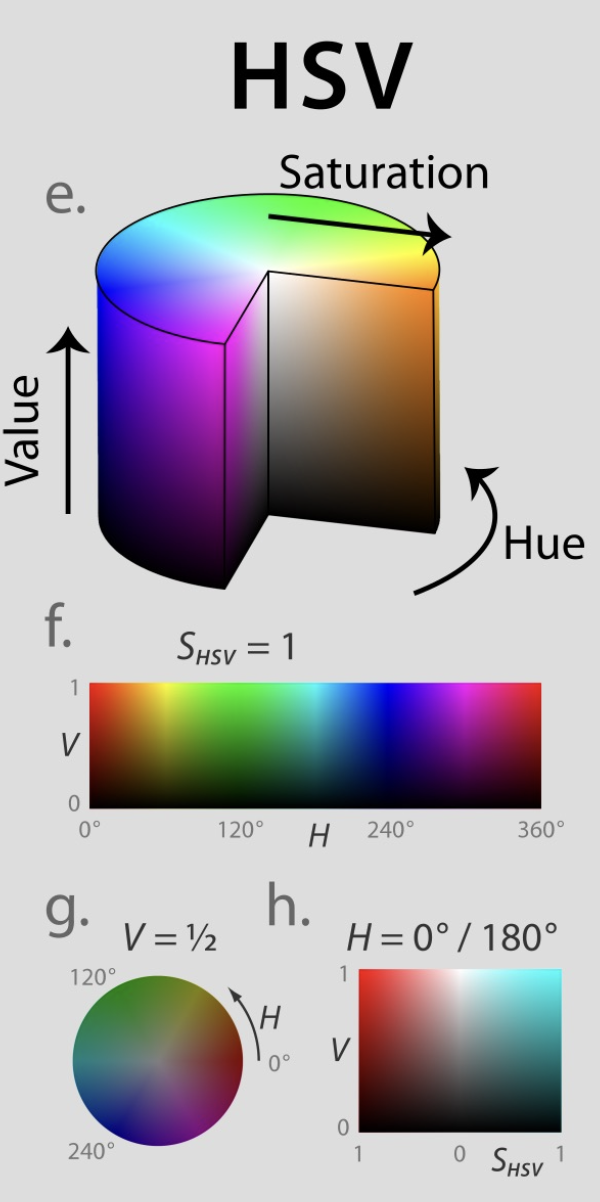

Color Spaces

- RGB (Red, Green, Blue)

- HSV (Hue, Saturation, Value)

- HSL (Hue, Saturation, Lightness)

- Hue is the color of the image

- Saturation is the pureness of the Hue

- Value is the strength of the Hue

Image Filtering

Image Filtering

- in spatial domain, filtering is a mathematical operation on a grid of numbers (smoothing, sharpening)

- in frequency domain, filtering is a way of modifying the frequencies of images (denoising, sampling, compression)

- in templates and image pyramids, filtering is a way to match a template to the image (detection)

- Translating an image or multiplying/adding with a constant leaves the semantic context intact

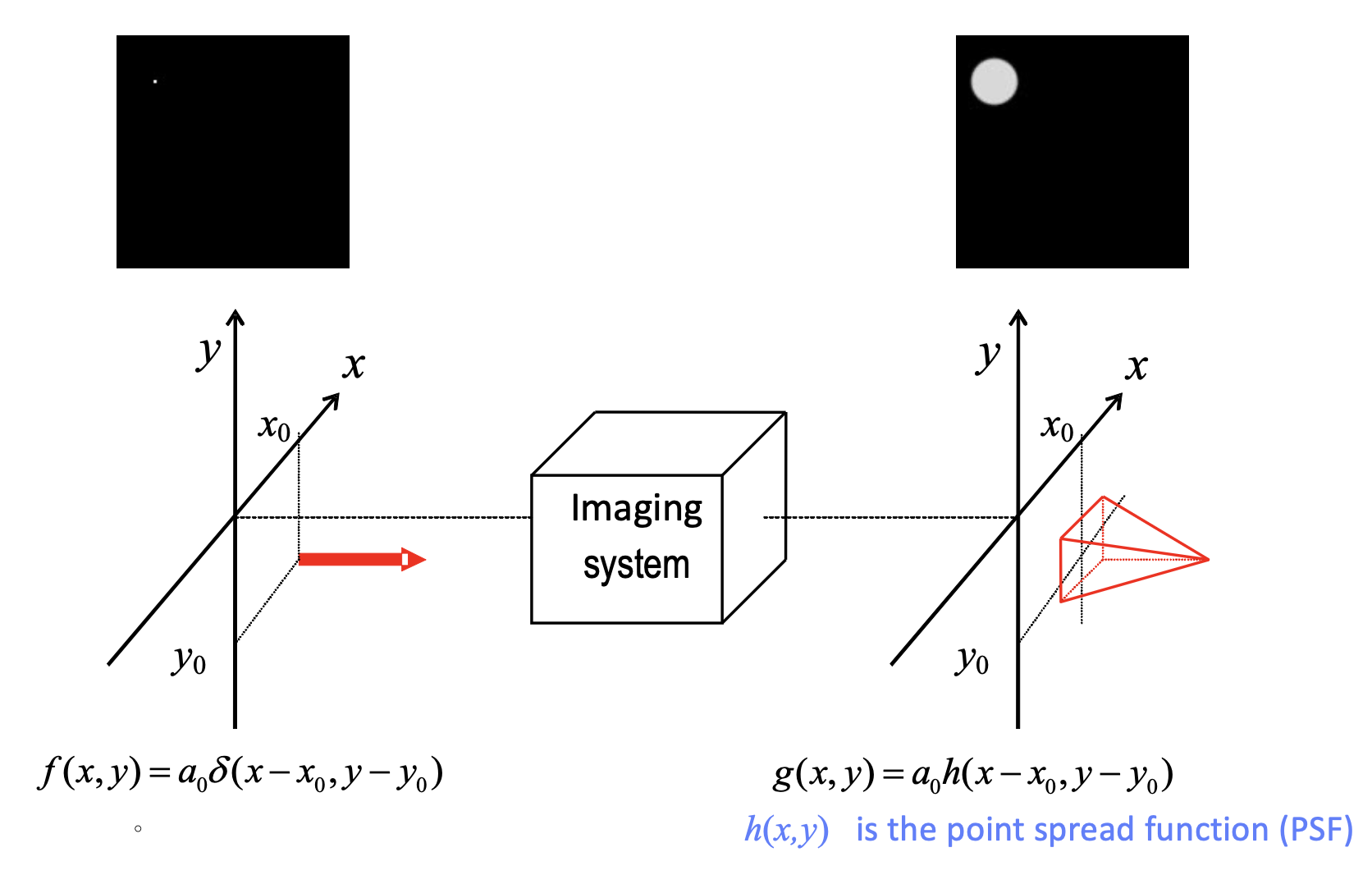

Intensity versus Point Spread Functions (PSF)

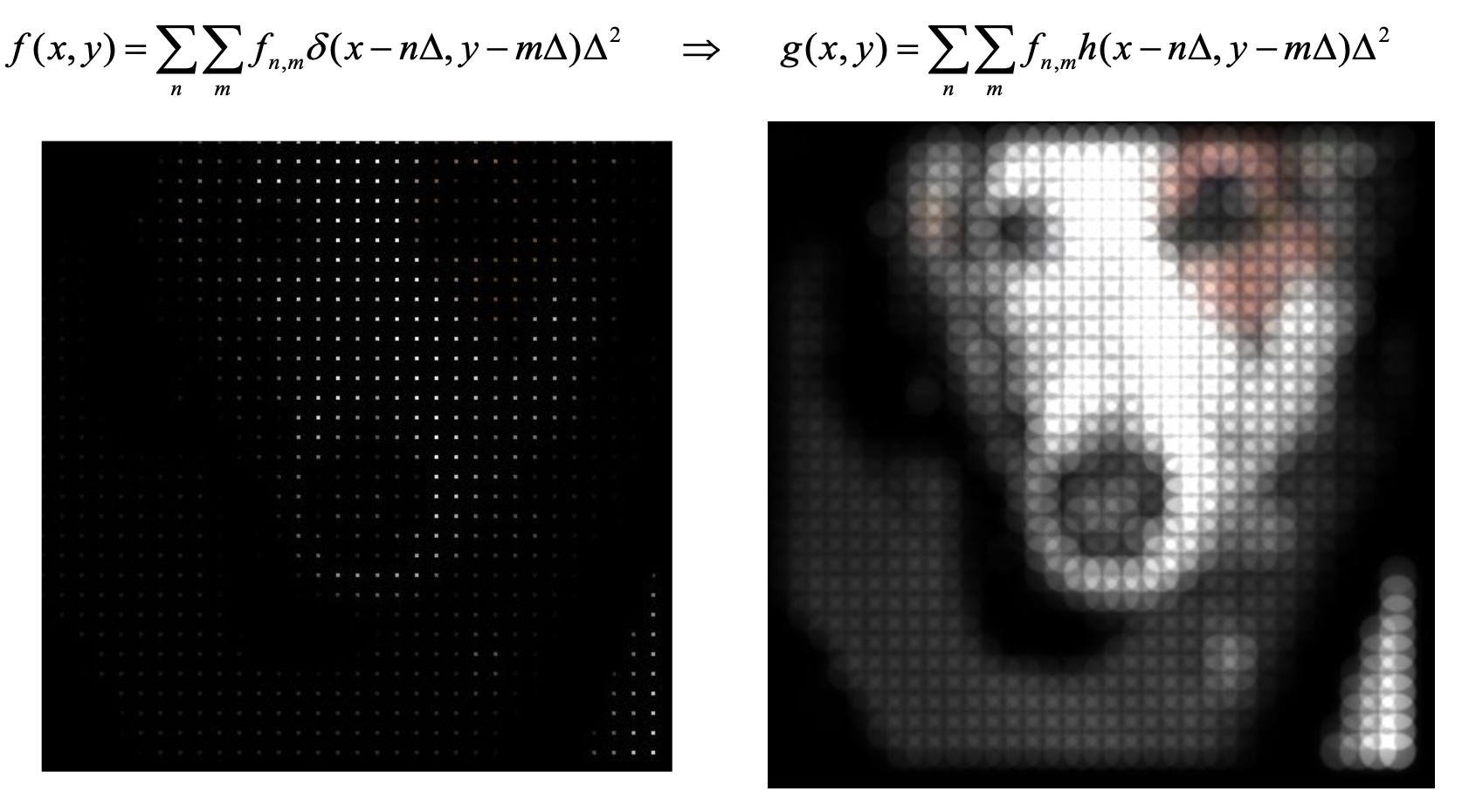

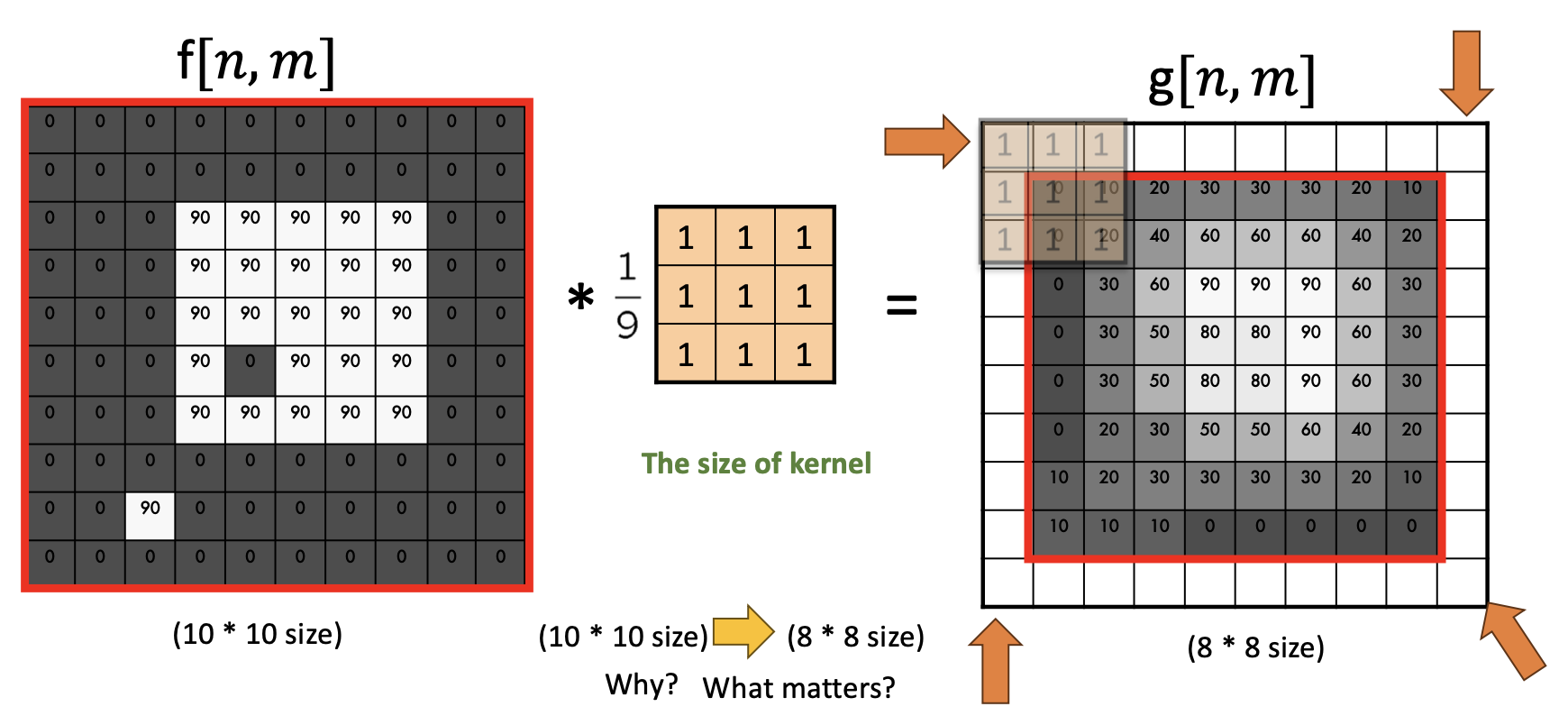

Convolution

- 2D:

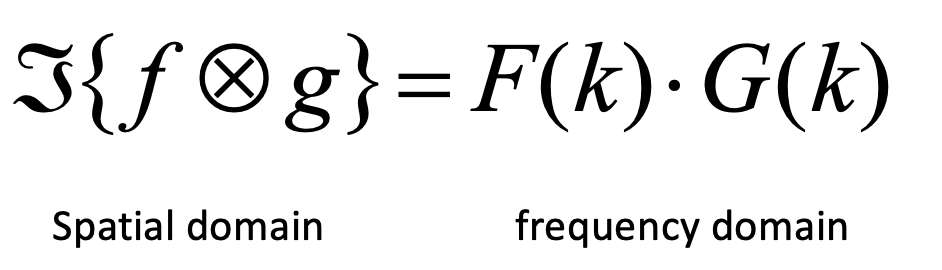

Convolution Theorem

- Convolution in space domain is equivalent to multiplication frequency domain

- After convolution, the resulting size is reduced

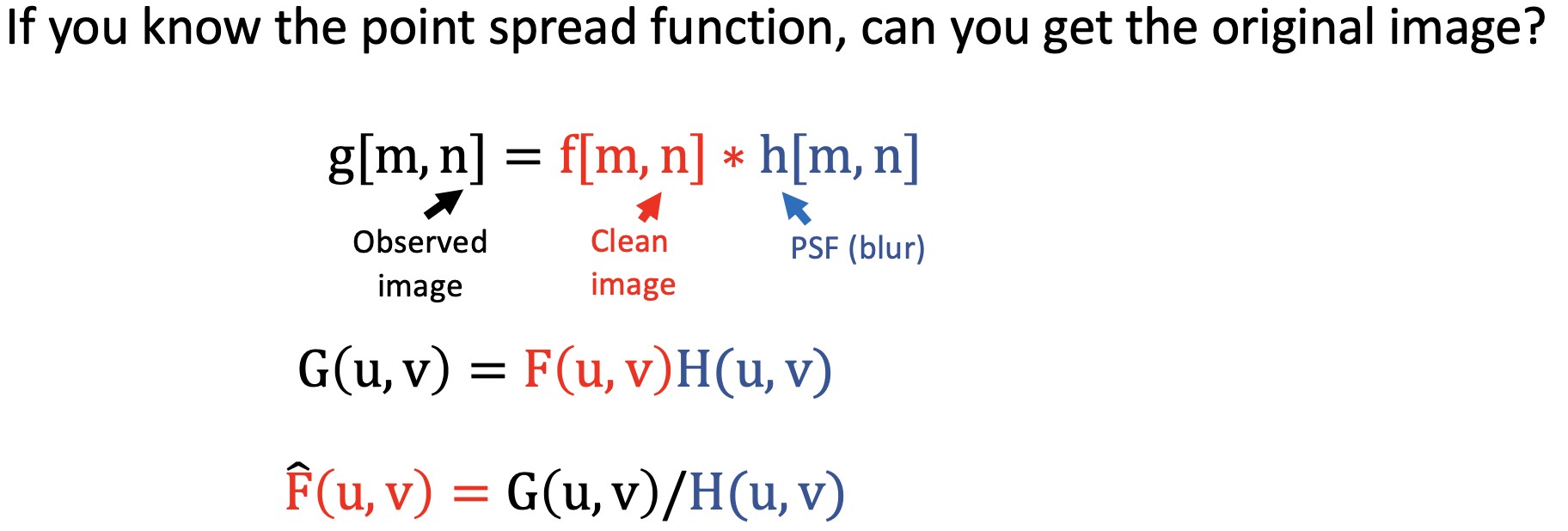

Image Restoration

- Limit to avoid 0 in the denominator!

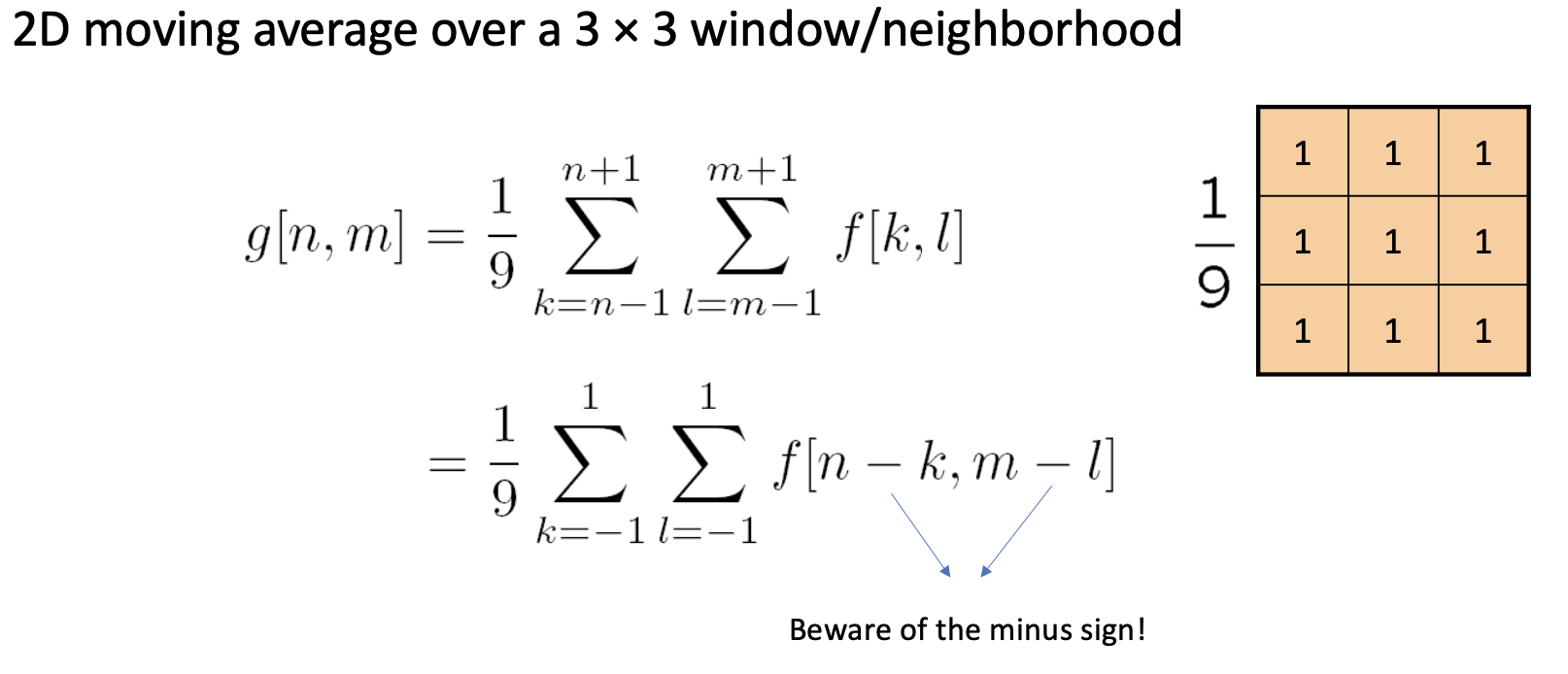

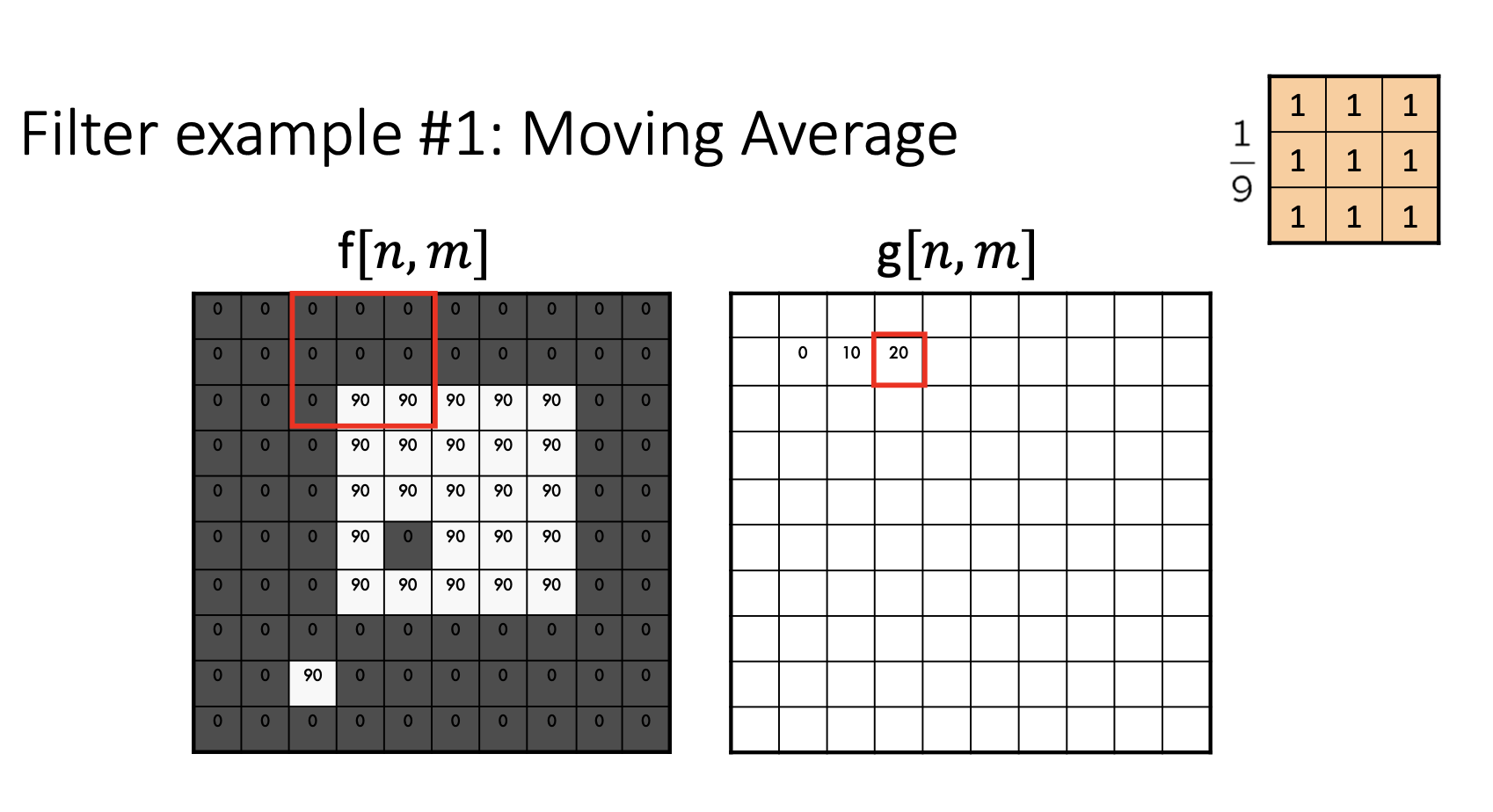

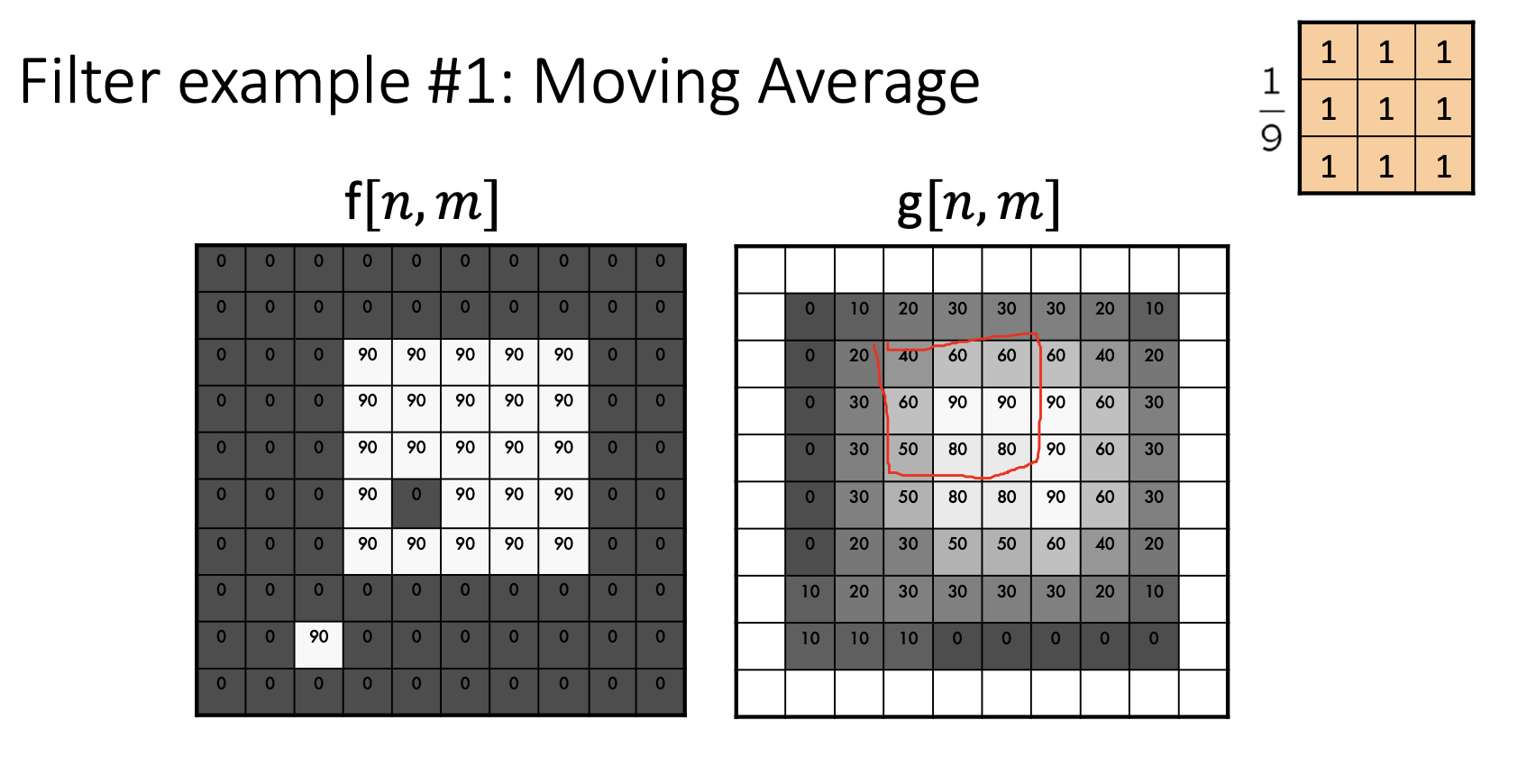

Moving Average

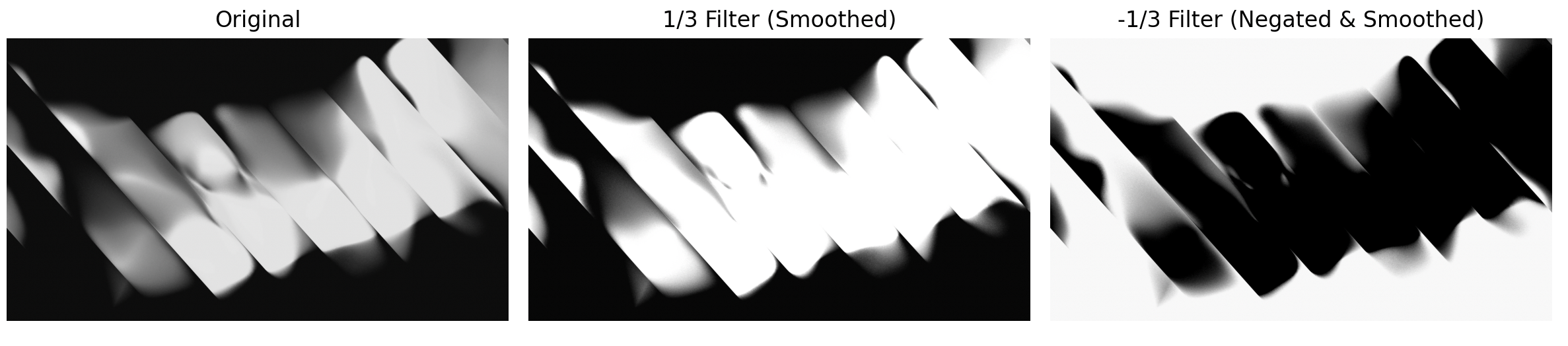

What this specific filter does:

- It smoothens the image

- It calculates the average in a neighborhood

So if the kernel was

The rule would be: Output = -(sum of 9 neighboring pixels) / 9 = -average

- The result would be the inverse of the above one. So bright regions become darker and dark regions become brighter.

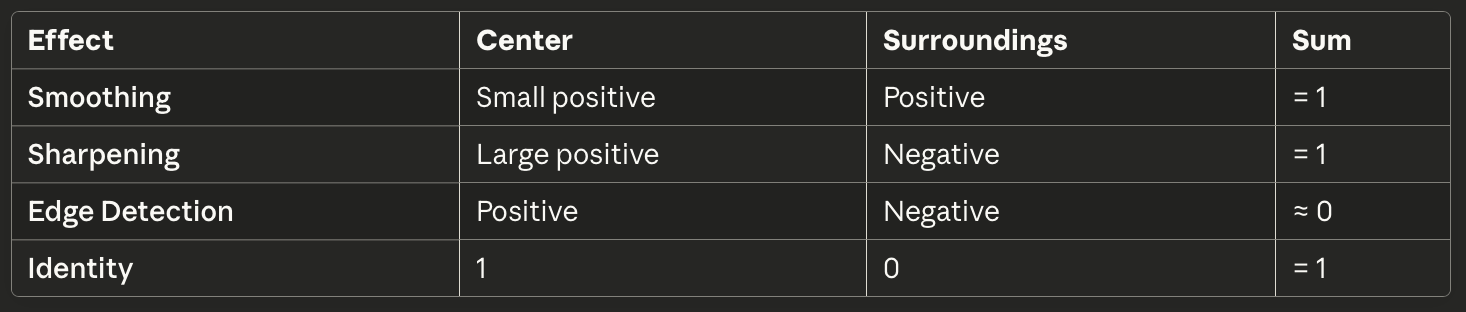

Some rules of thumb for each operation

A kernel does

- Darkening — sum of all elements < 1

- Brightening — sum of all elements > 1

- Smoothing — positive weights distributed

- Sharpening — when the center value is large and positive, the surrounding values are negative and the sum == 1.

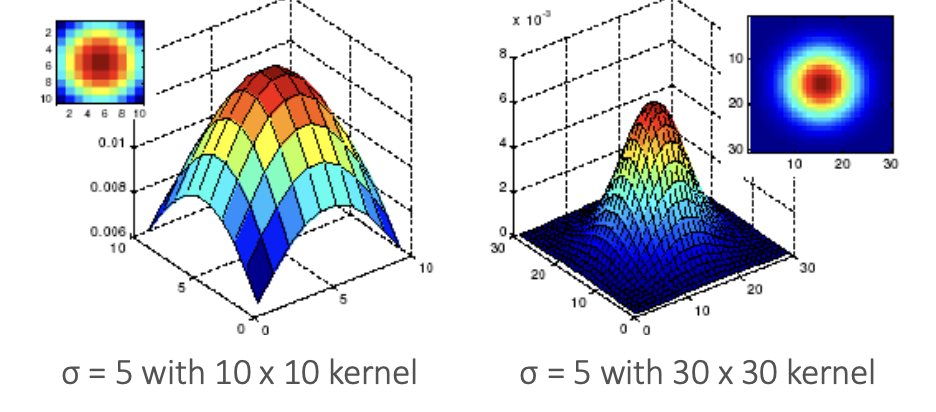

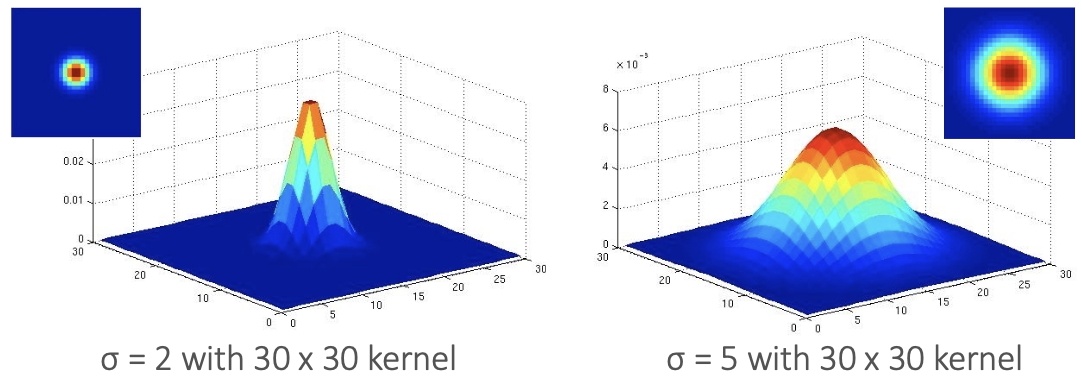

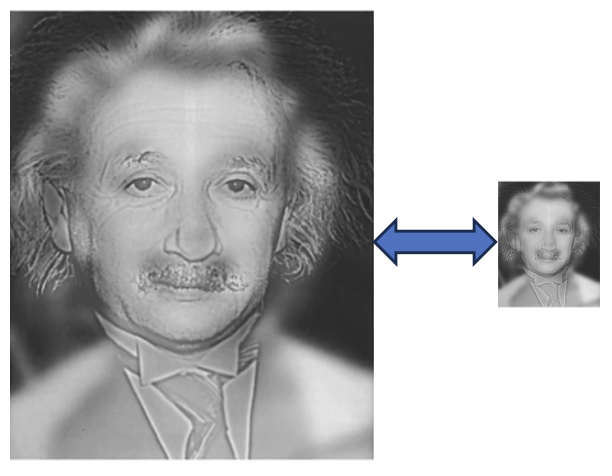

Gaussian Filter

- Smoothing

- Denoising

Key Parameters

- (sigma/variance): controls blur strength (extent of smoothing)

Small (e.g. 2) = sharp, concentrated, less blur

Large (e.g. 5) = wide, spread-out, more blur

- Kernel size: must be large enough to capture the Gaussian shape

- Too small: truncates the Gaussian bell curve

Non-linear Filters

Median Filter

- A median filter operates over a window by selecting the median intensity in the window

- Good for salt-and-pepper noise

- Robustness to outliers (in comparison with Gaussian)

- Edge-preserving (in comparison with Gaussian)

Correlation vs Convolution

- In convolution, we flip the kernel

- A convolution is an integral that expresses the amount of overlap of one function as it is shifted over another function. (filtering operation)

- Correlation compares the similarity of two sets of data. Correlation computes a measure of similarity of two input signals as they are shifted by one another. The correlation result reaches a maximum at the time when the two signals match best (measure of relatedness of two signals)

Template Matching

- It’s done through Normalized cross-correlation

- Matching depends on

- scale,

- orientation,

- general appearance

Lecture 3 — Fourier Transform and Convolution

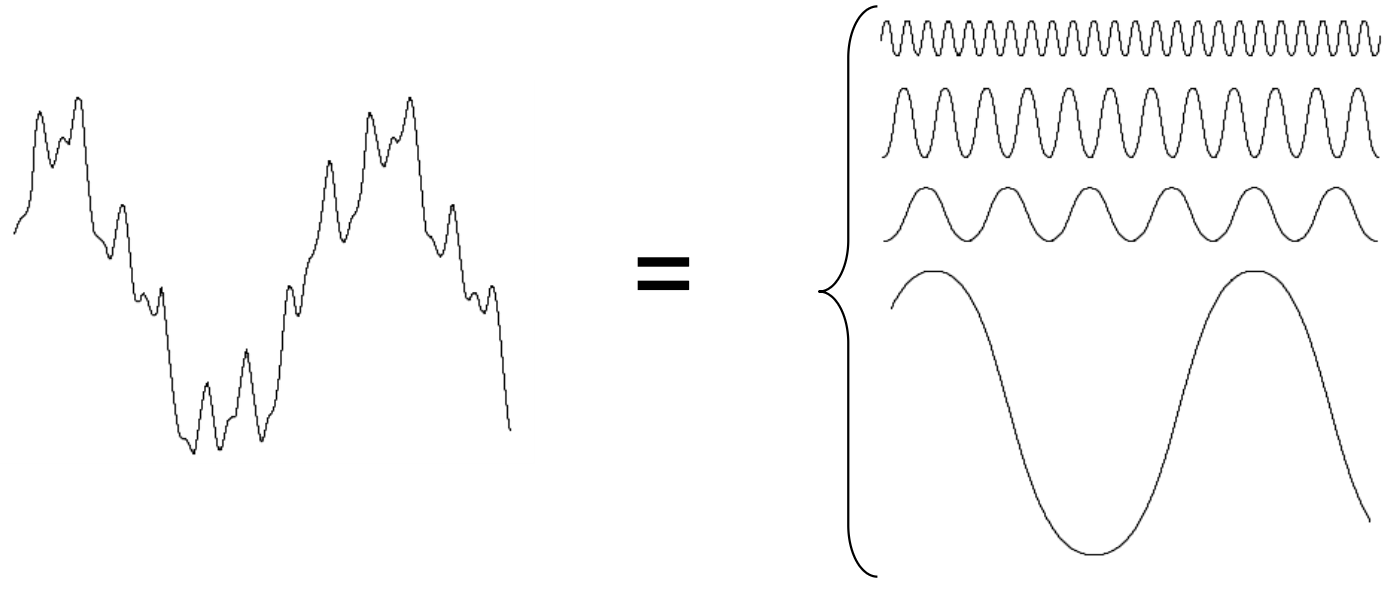

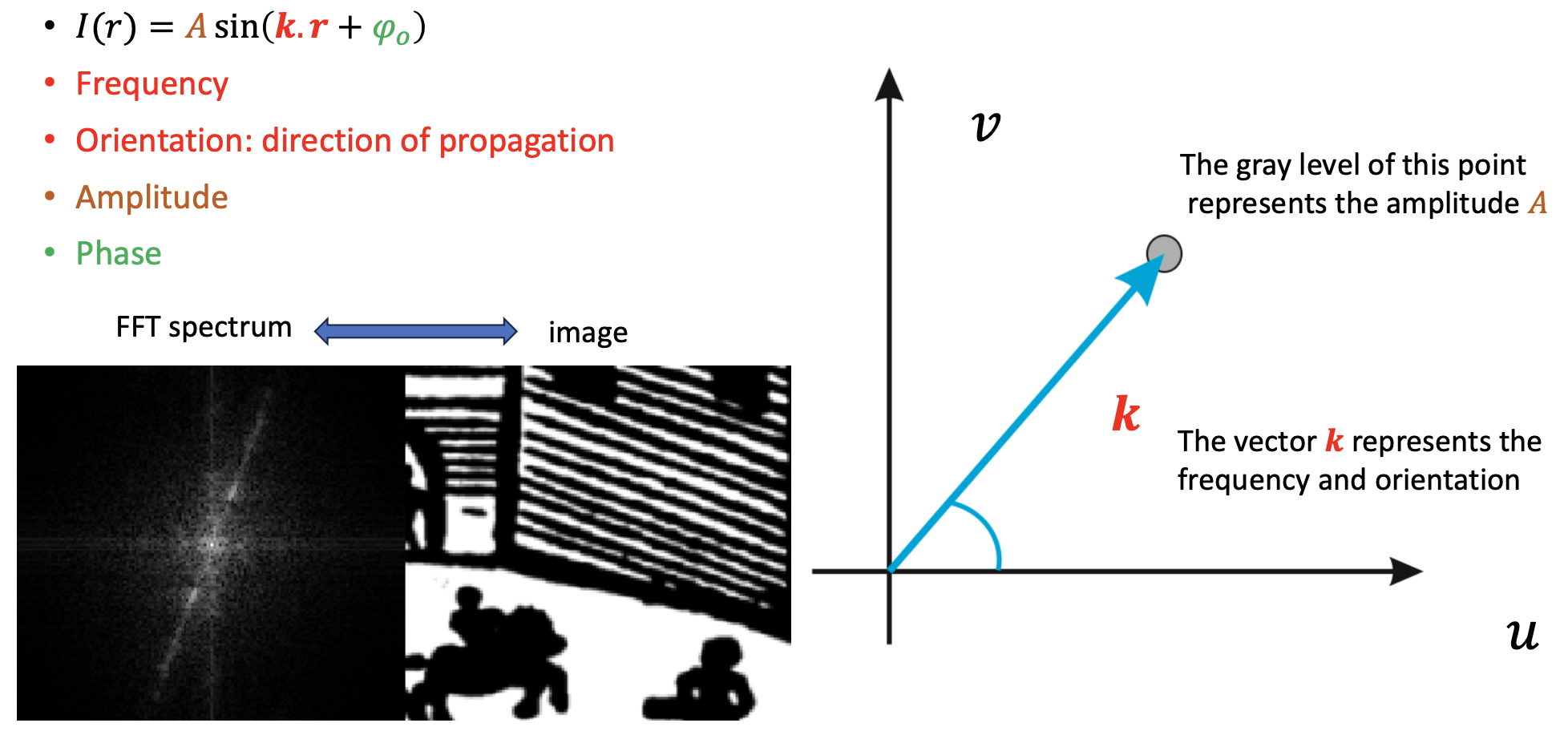

Fourier Transform

Fourier Theory

Any function that periodically repeats itself can be expressed as a sum of sines and cosines of different frequencies each multiplied by a different coefficient – a Fourier series.

Important parameters

- Frequency:

- Amplitude:

- Phase:

- Orientation:

Spectrum

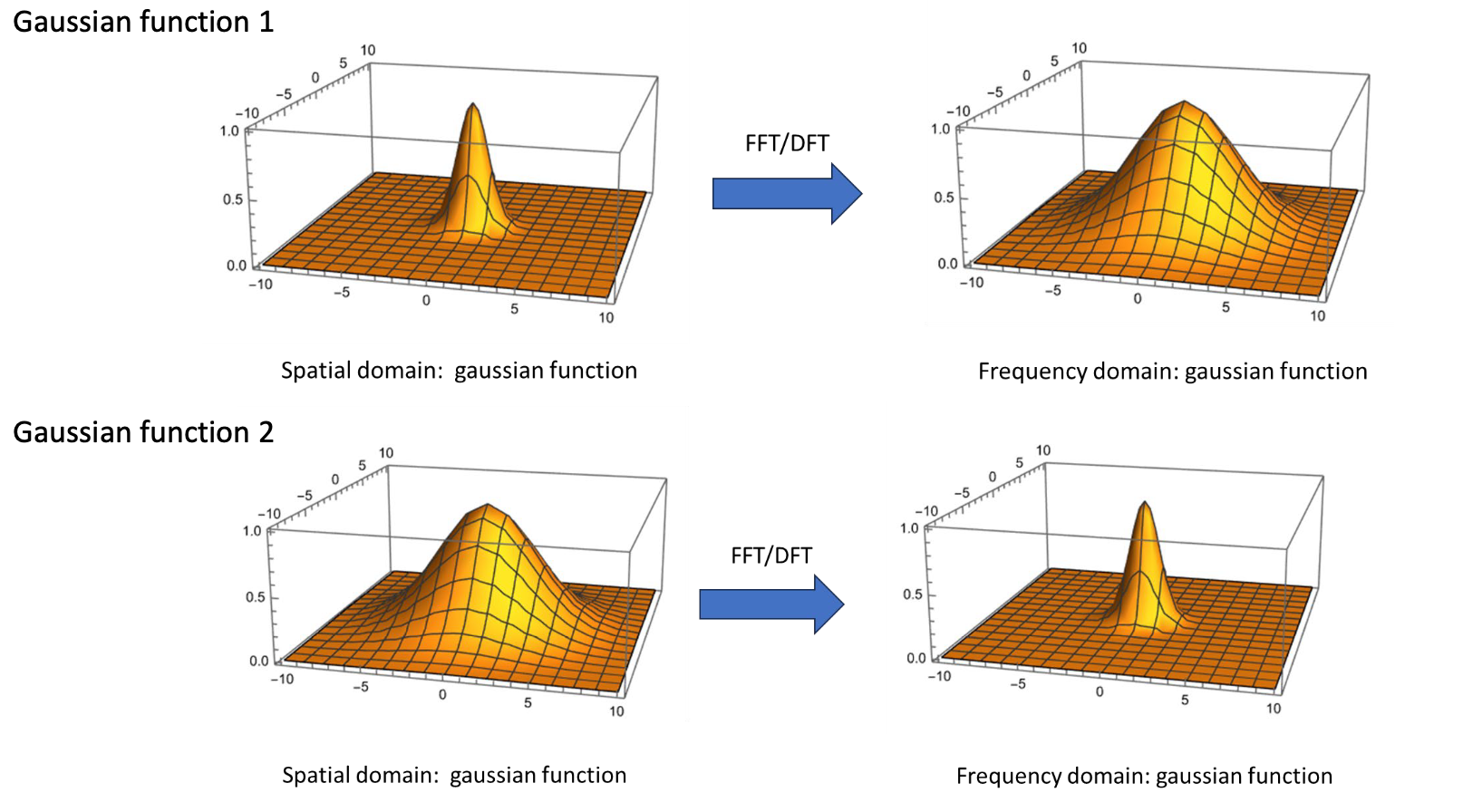

Fourier Uncertainty Principle

Narrow in Space Wide in Frequency

- Spatial Domain: The function is a narrow spike. This means the signal is highly localized in space (it exists intensely at one small spot, around (0,0), and is zero almost everywhere else).

- Frequency Domain: The resulting function is wide and spread out. To create such a sharp, sudden spike in the spatial domain, you need to combine many different frequencies (both low and high). This “wide-band” combination of frequencies results in a wide, spread-out plot in the frequency domain.

Wide in Space Narrow in Frequency

- Spatial Domain: The function is a wide, broad hill. This means the signal is delocalized (spread out) in space. It changes very smoothly and gradually.

- Frequency Domain: The resulting function is a narrow spike. Because the spatial function is so smooth and changes slowly, it is composed almost entirely of low frequencies. It doesn’t need high frequencies (which create sharp changes). This “narrow-band” signal is highly localized around the zero-frequency (DC) component, resulting in a narrow spike.

So, in short:

- To localize a signal in space (make it narrow), you must delocalize it in frequency (make it wide).

- To localize a signal in frequency (make it narrow), you must delocalize it in space (make it wide).

- You can’t have a function that is “narrow” in both the spatial and frequency domains simultaneously.

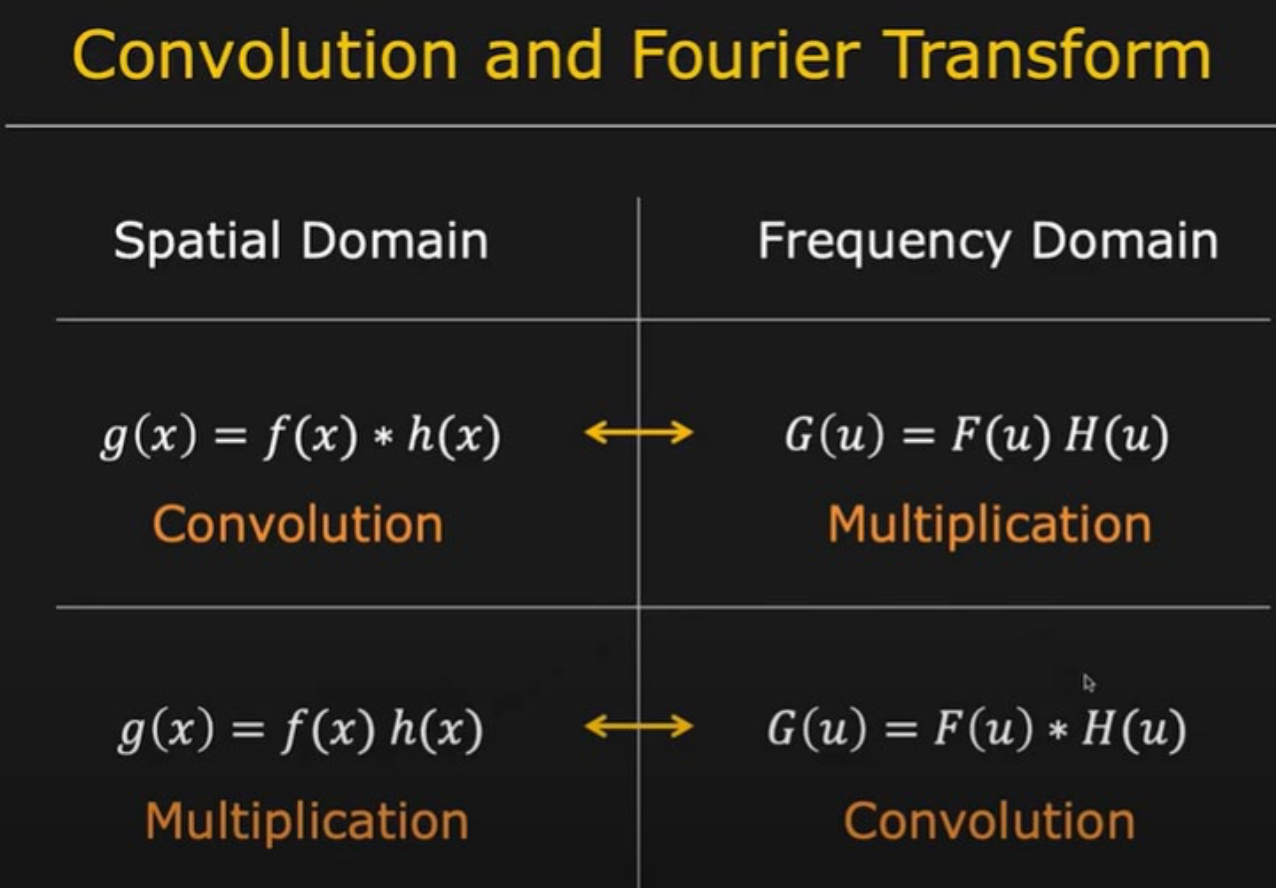

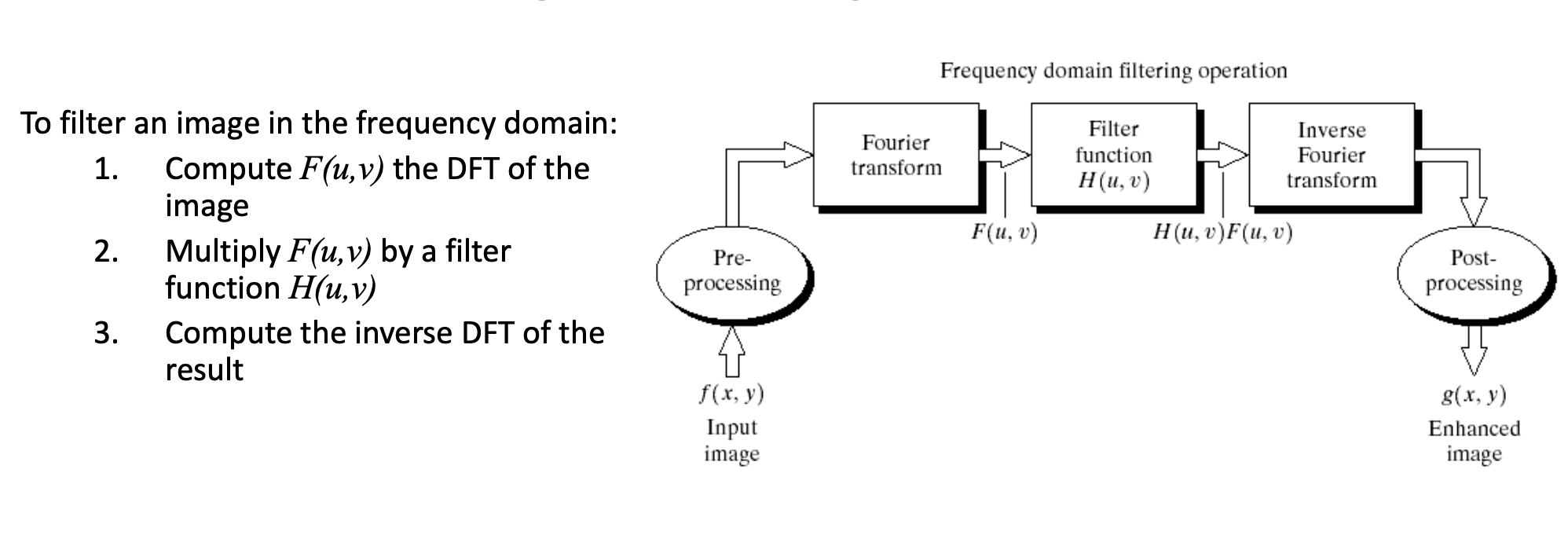

Convolution Theorem

- Convolution in space domain is equivalent to multiplication frequency domain.

- Multiplication in space domain is equivalent to convolution in frequency domain

Gaussian Low-Pass Filter

- Used to connect broken text

Gaussian High-Pass Filter

- Image restoration

Lecture 4 — Morphological Operations

- Morphological Operations are based on set theory (inclusion, union, difference, etc.)

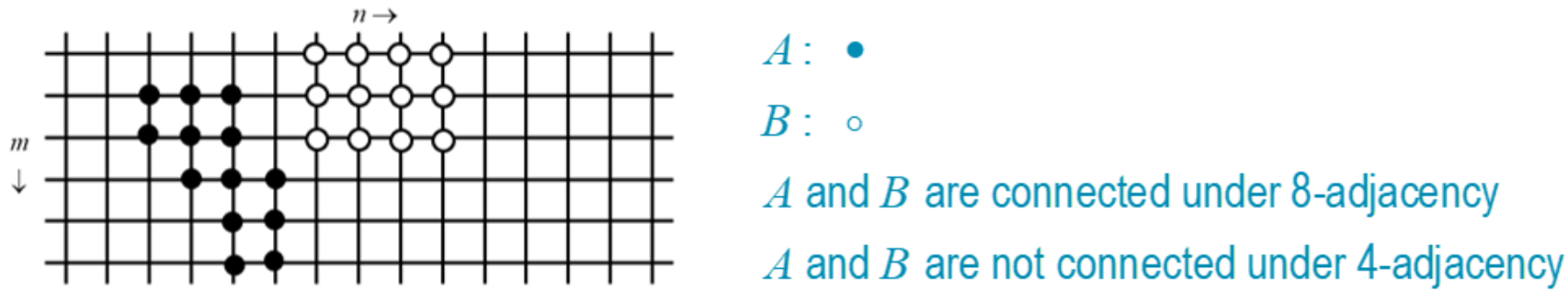

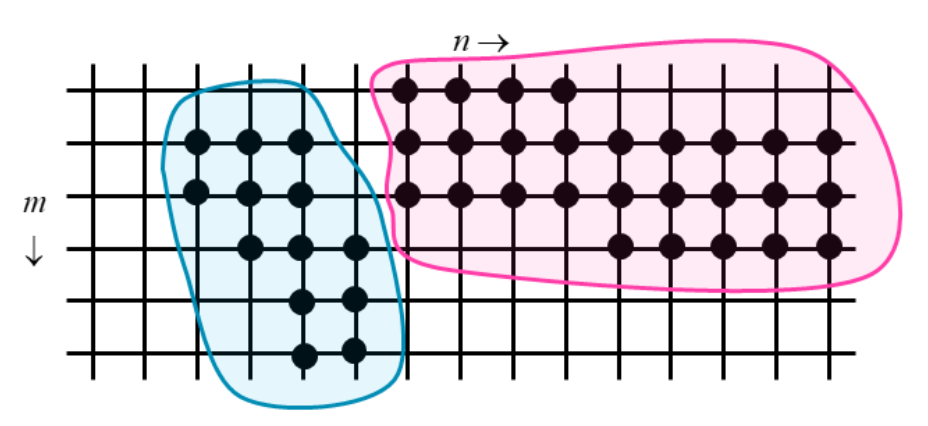

Neighborhoods and Adjacents

- two pixels and are:

- 4-connected if

- 8-connected if

-

Two pixels and are connected in region A if a path exists between and entirely contained in .

-

The connected components of are the subsets of in which:

- all pixels are connected in ,

- all pixels in not belonging to the subset are not connected to that subset.

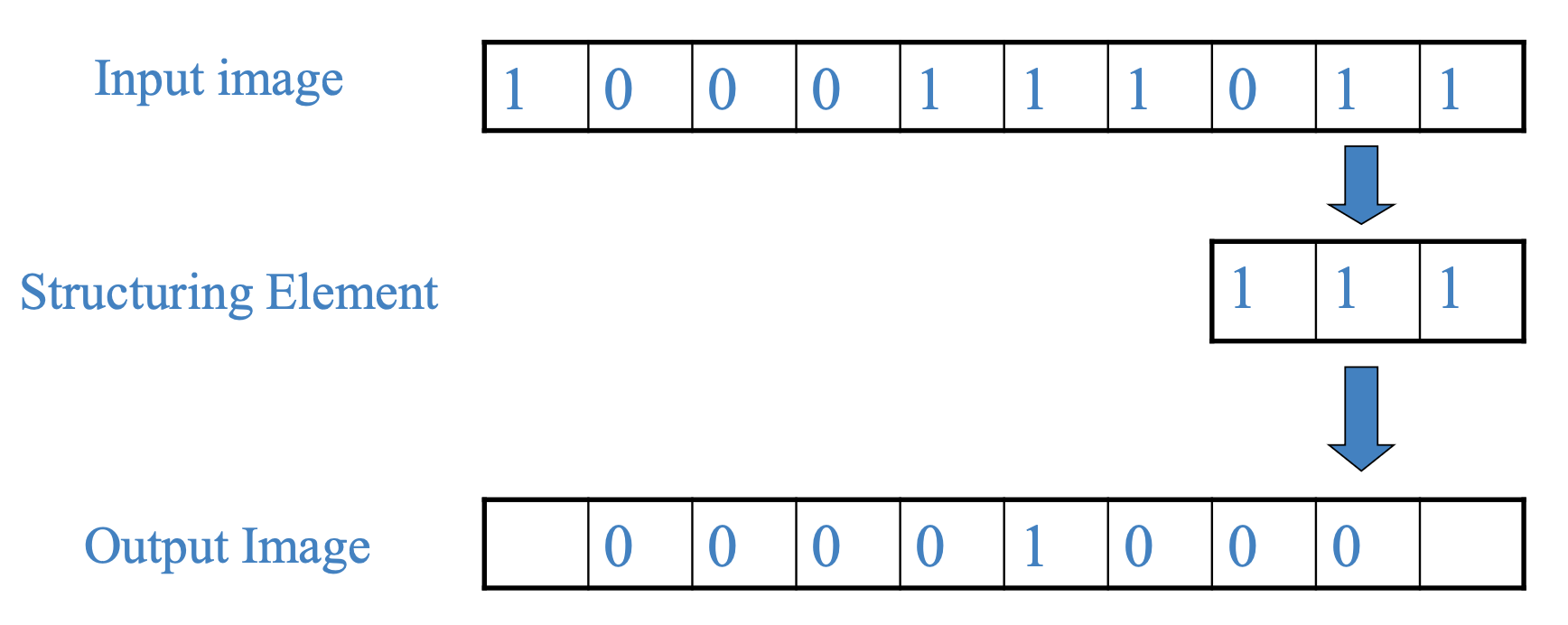

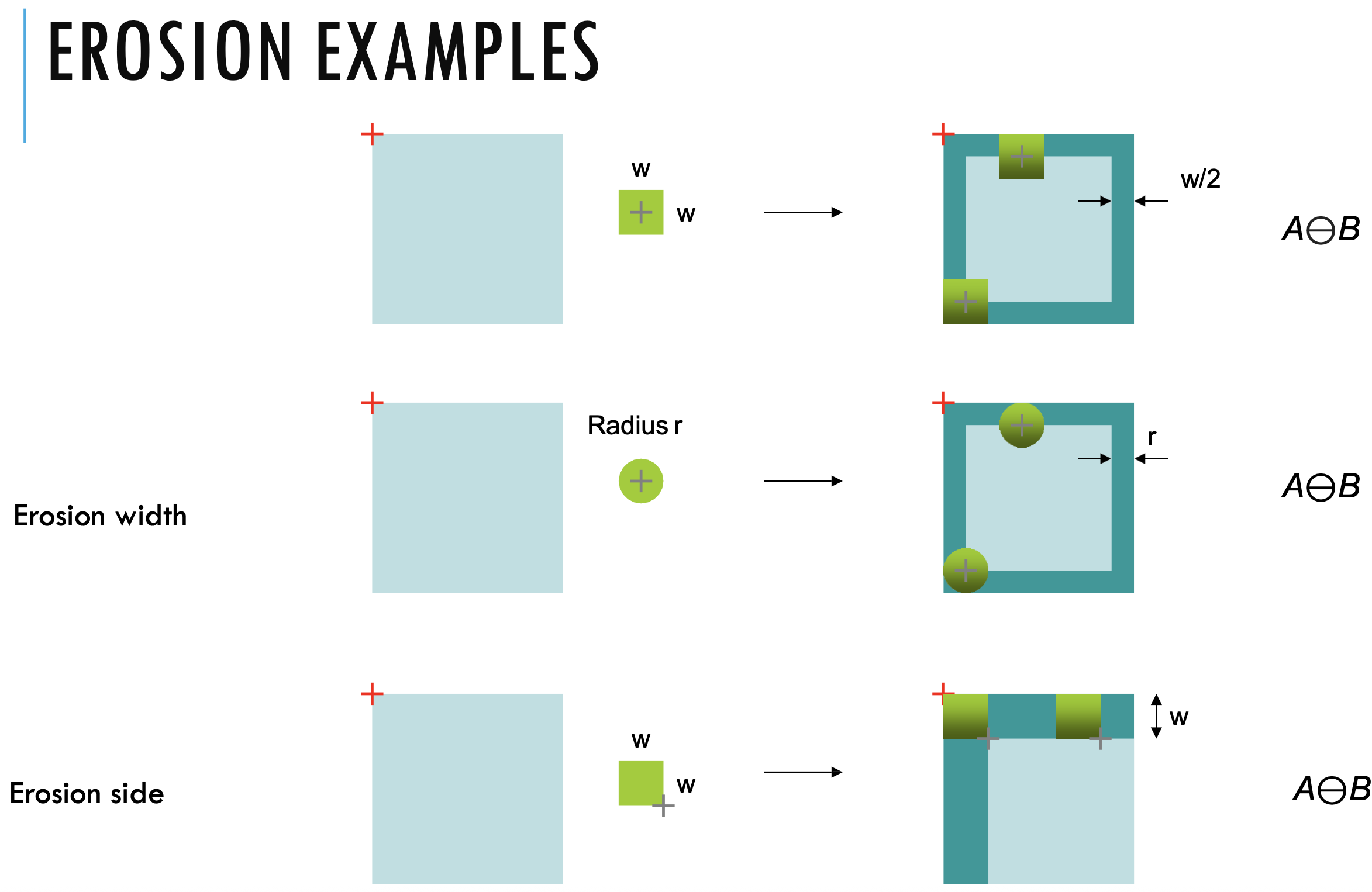

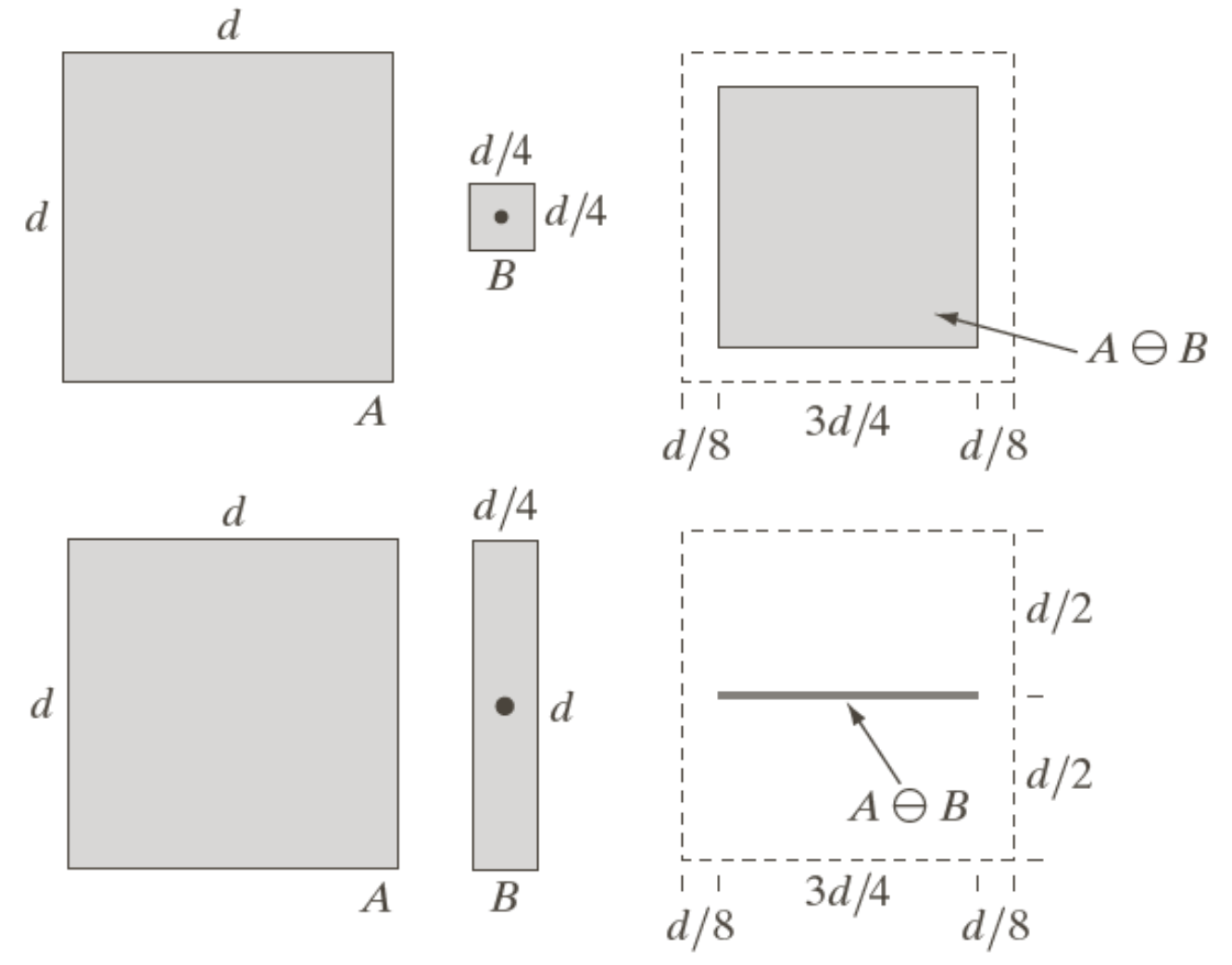

Erosion

Erosion

- Enlarges holes,

- Breaks thin parts,

- shrinks objects

- is not commutative

- Match completely

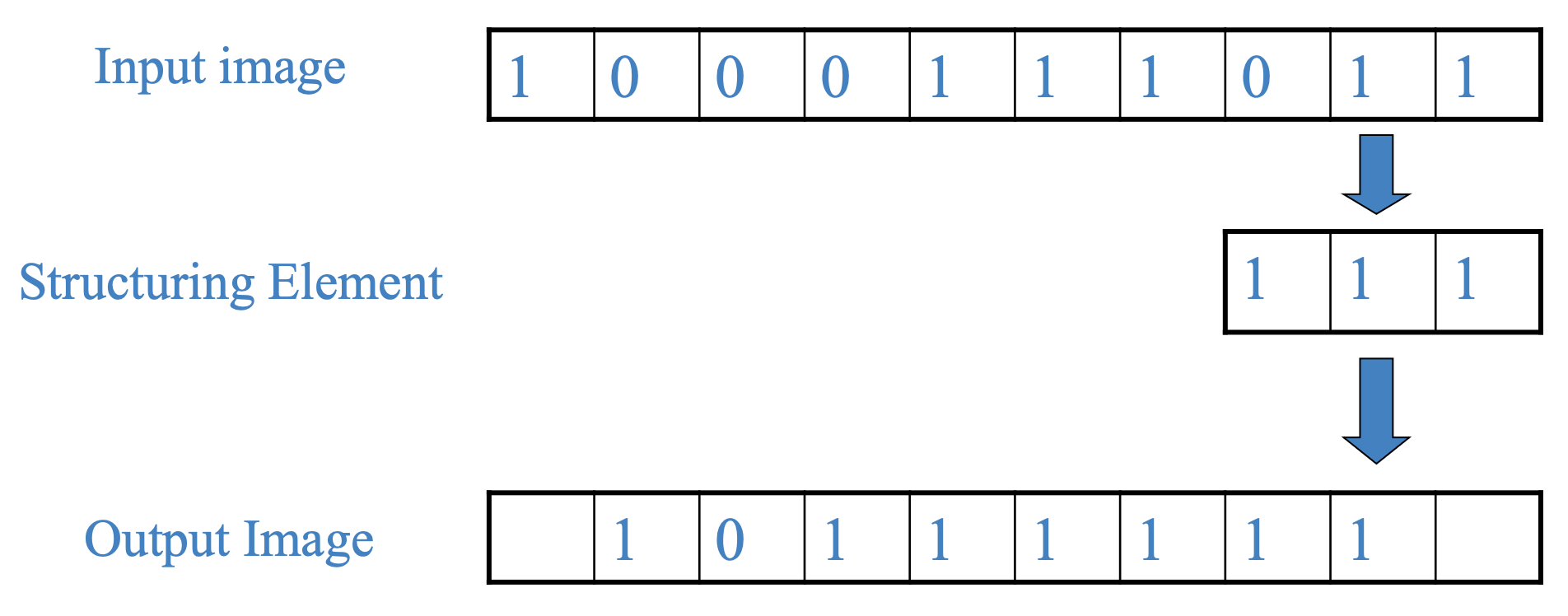

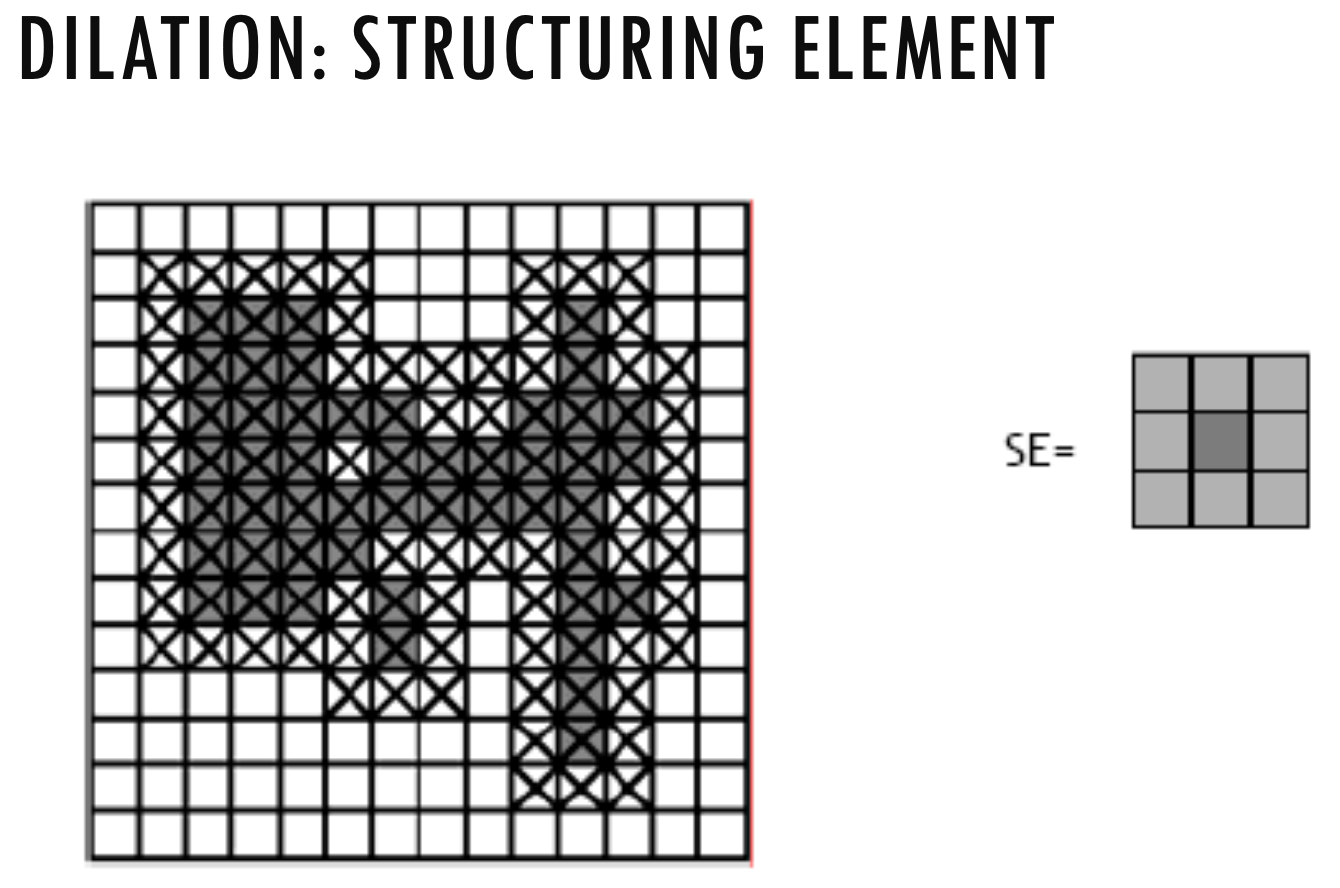

Dilation

Dilation

- Filling of holes of certain shape and size

- Match at least one element

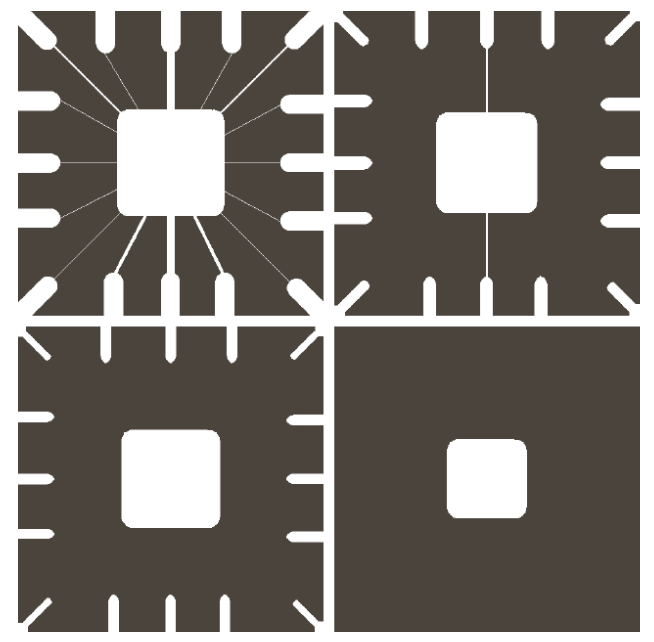

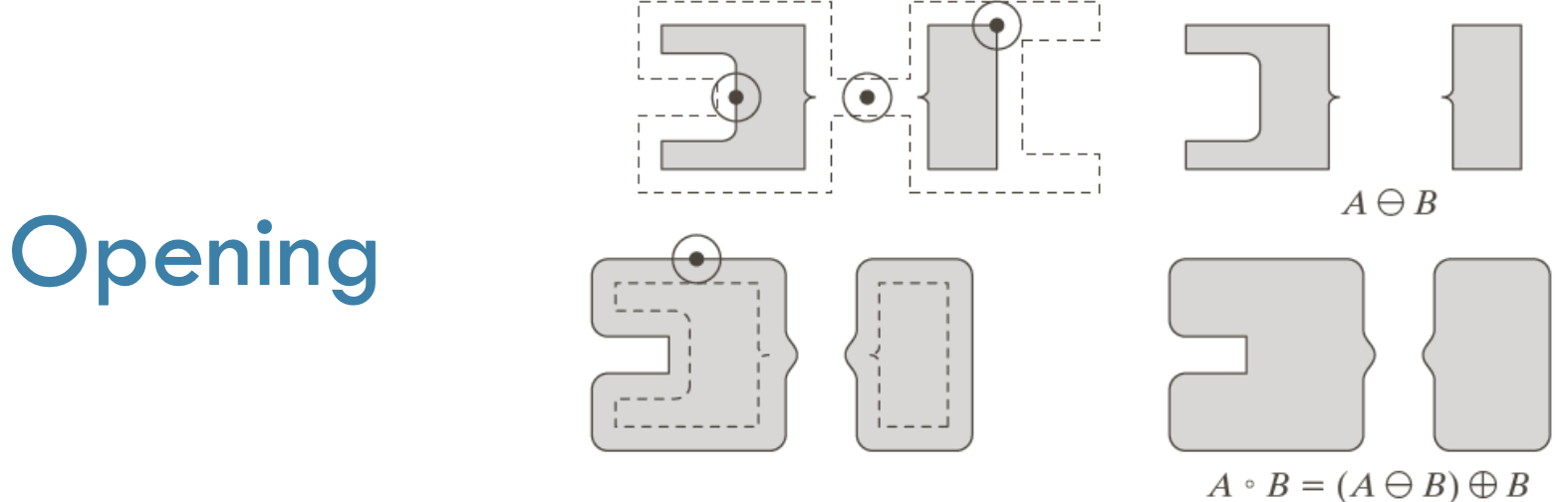

Opening

- erosion, then dilation

Hit or Miss

- Find location of one shape among a set of shapes ”template matching”

- Shape recognition

Boundary Extraction

- We can simply do

Lecture 5: Scale Space, Image Derivative and Edge Detection

- By changing the zoom-in/out ratio, we can see the different levels of information from the image.

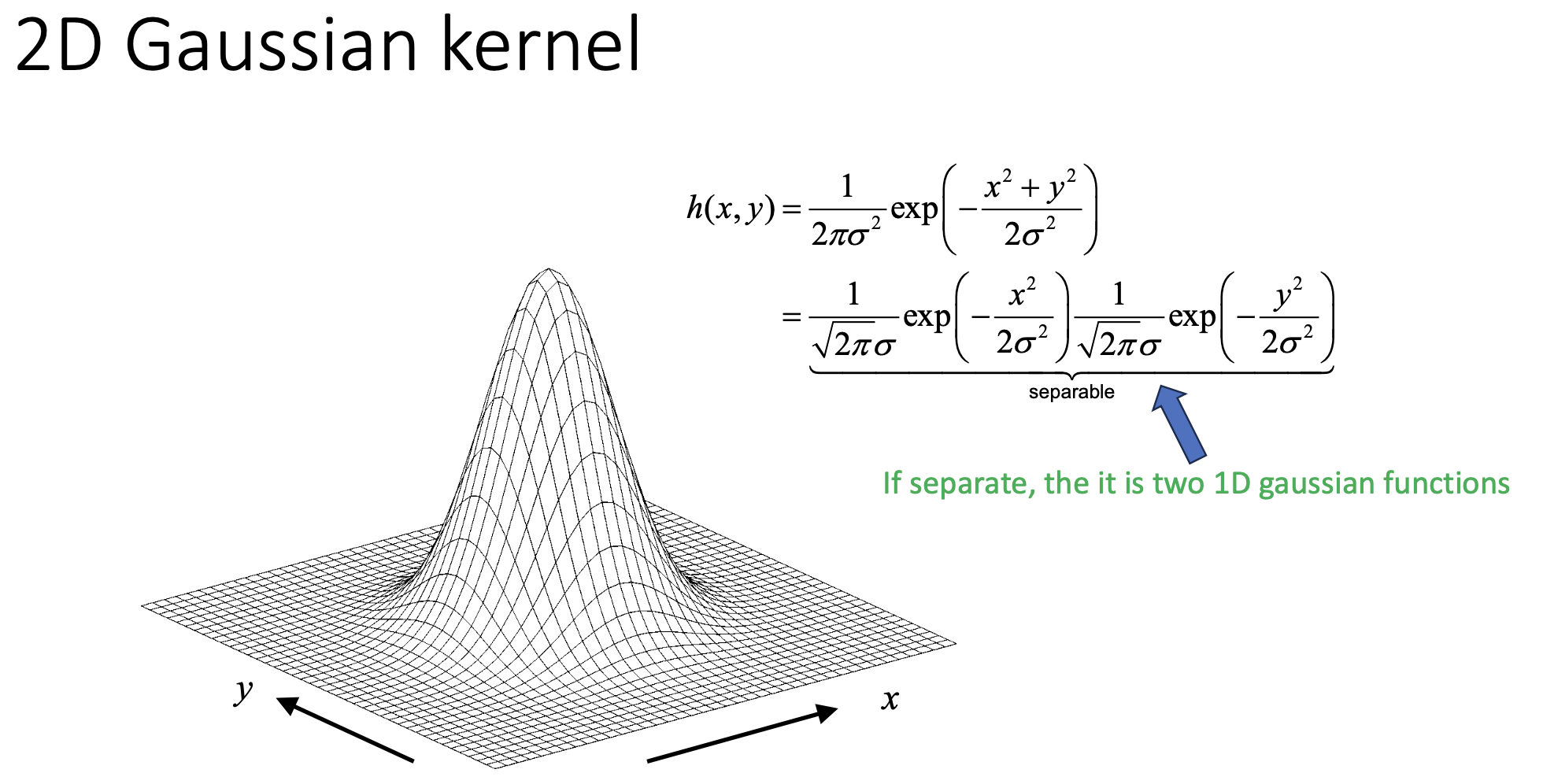

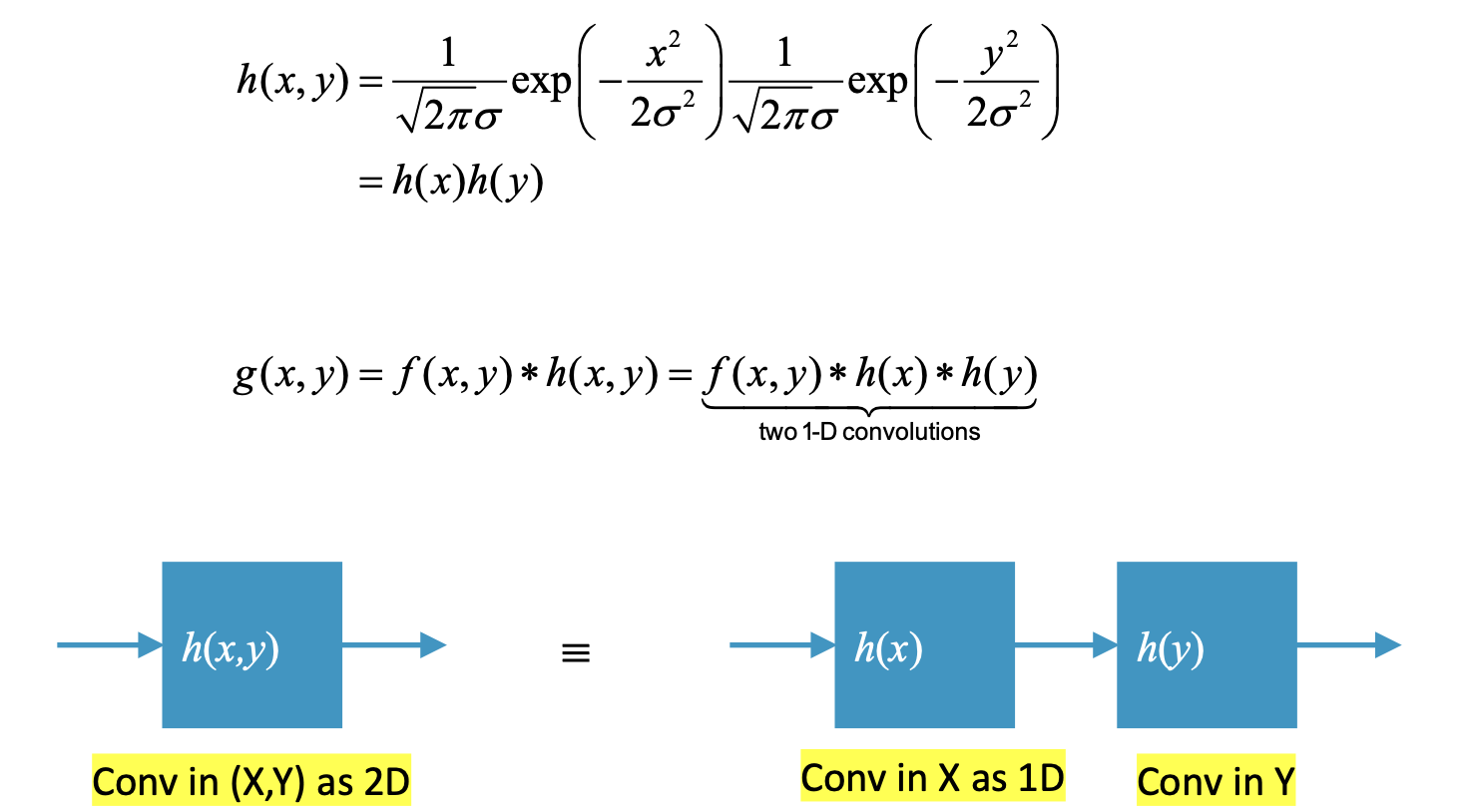

Scale Space Theory: Convolution with Gaussian

- The PSF of the operation is a Gaussian:

- scale is parametrized by

Properties of Convolution

- Commutativity:

- Associativity:

Properties of Gaussian Functions

-

Separability property: The Gaussian is the only PSF that satisfies

- This means convolving with two Gaussians sequentially is equivalent to convolving with a single Gaussian whose variance is the sum.

-

Fourier transform property:

- Spatial domain:

- Frequency domain:

-

Convolution in Fourier Domain:

- The only PSF that satisfies this is a Gaussian

An increase of scale

- blurs the image,

- gives rise to less structure,

- decreases noise

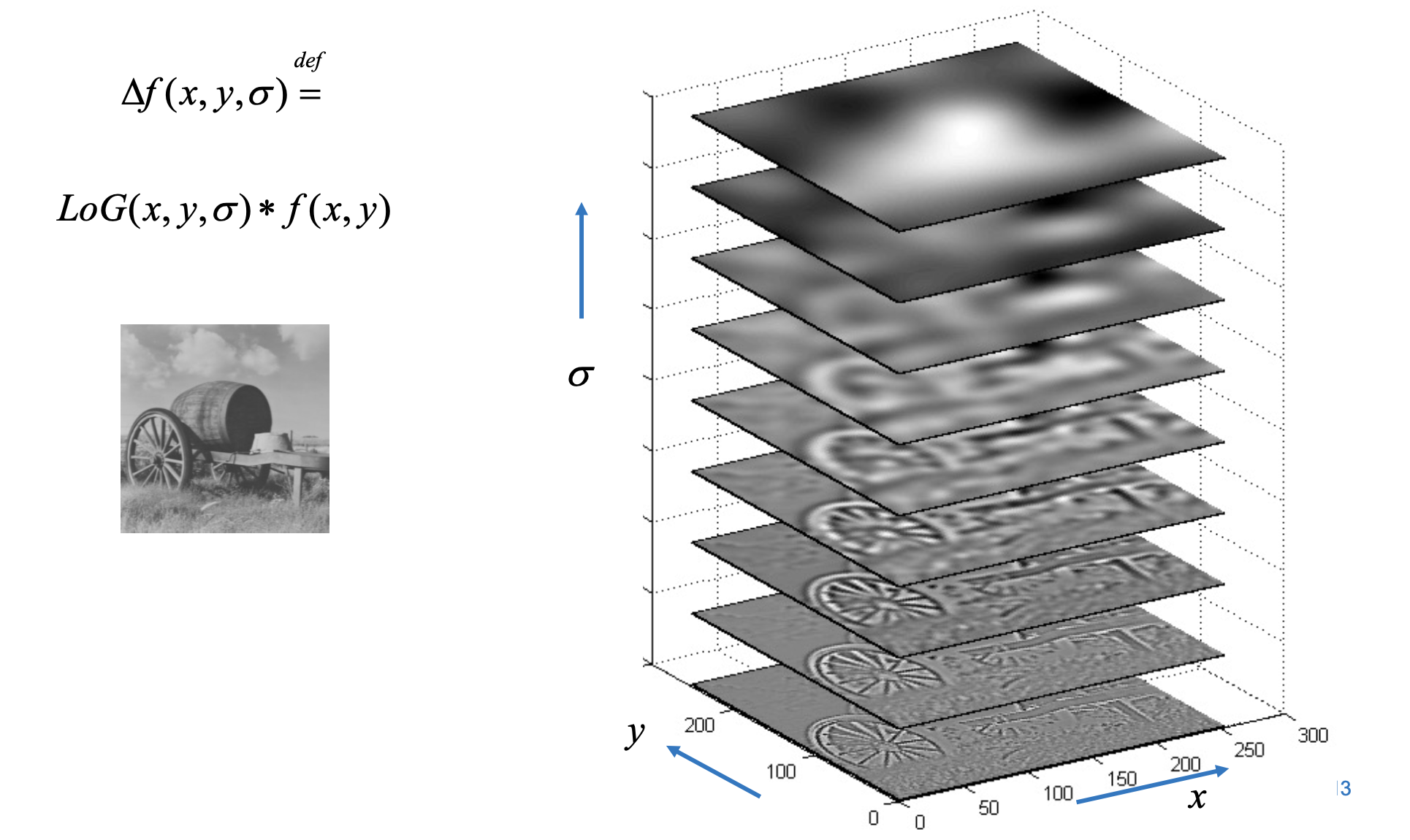

Scale space applications: SIFT

Key applications of scale space:

- Edge and blob detection,

- Feature extraction (e.g., SIFT, SURF),

- Object recognition and tracking.

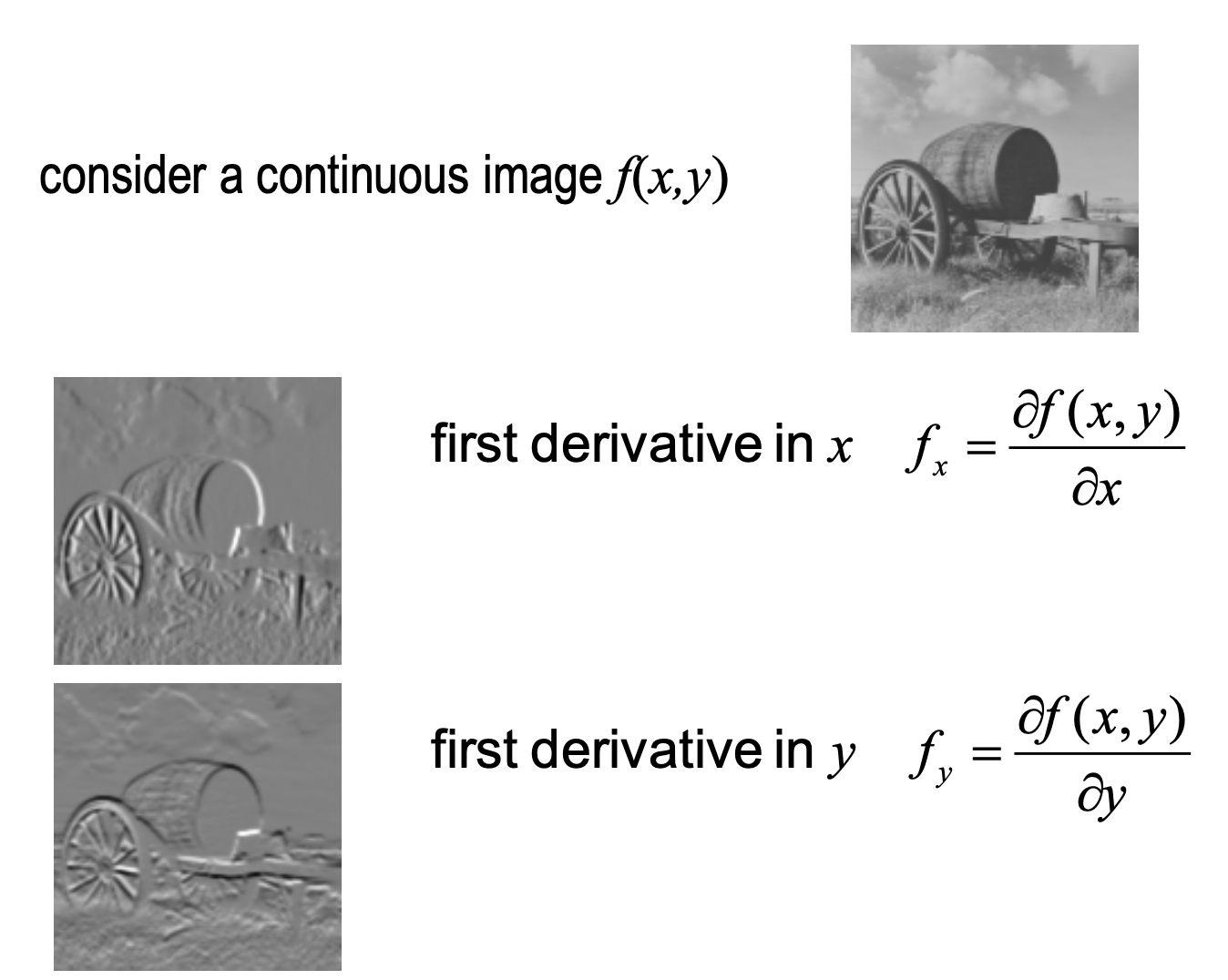

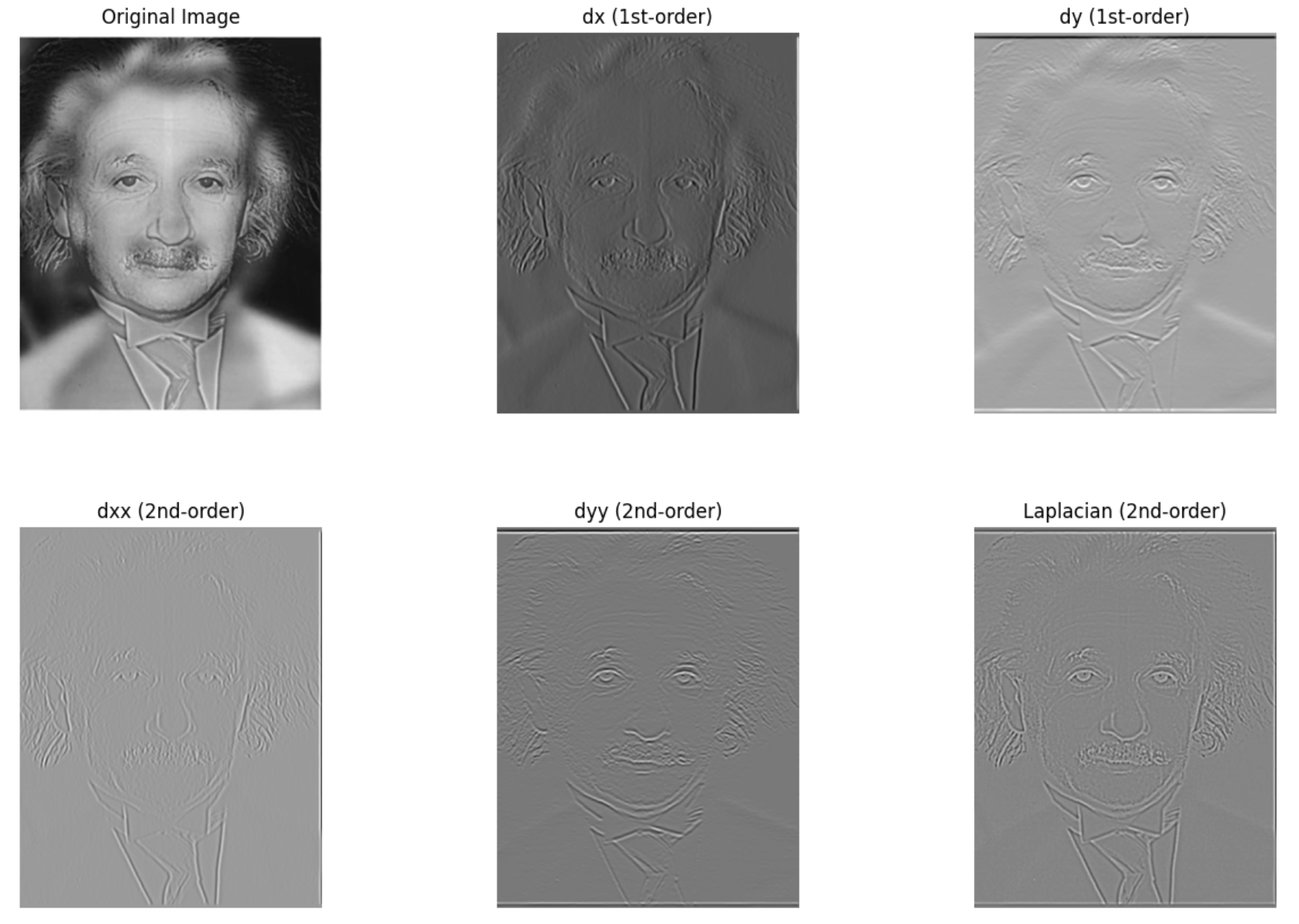

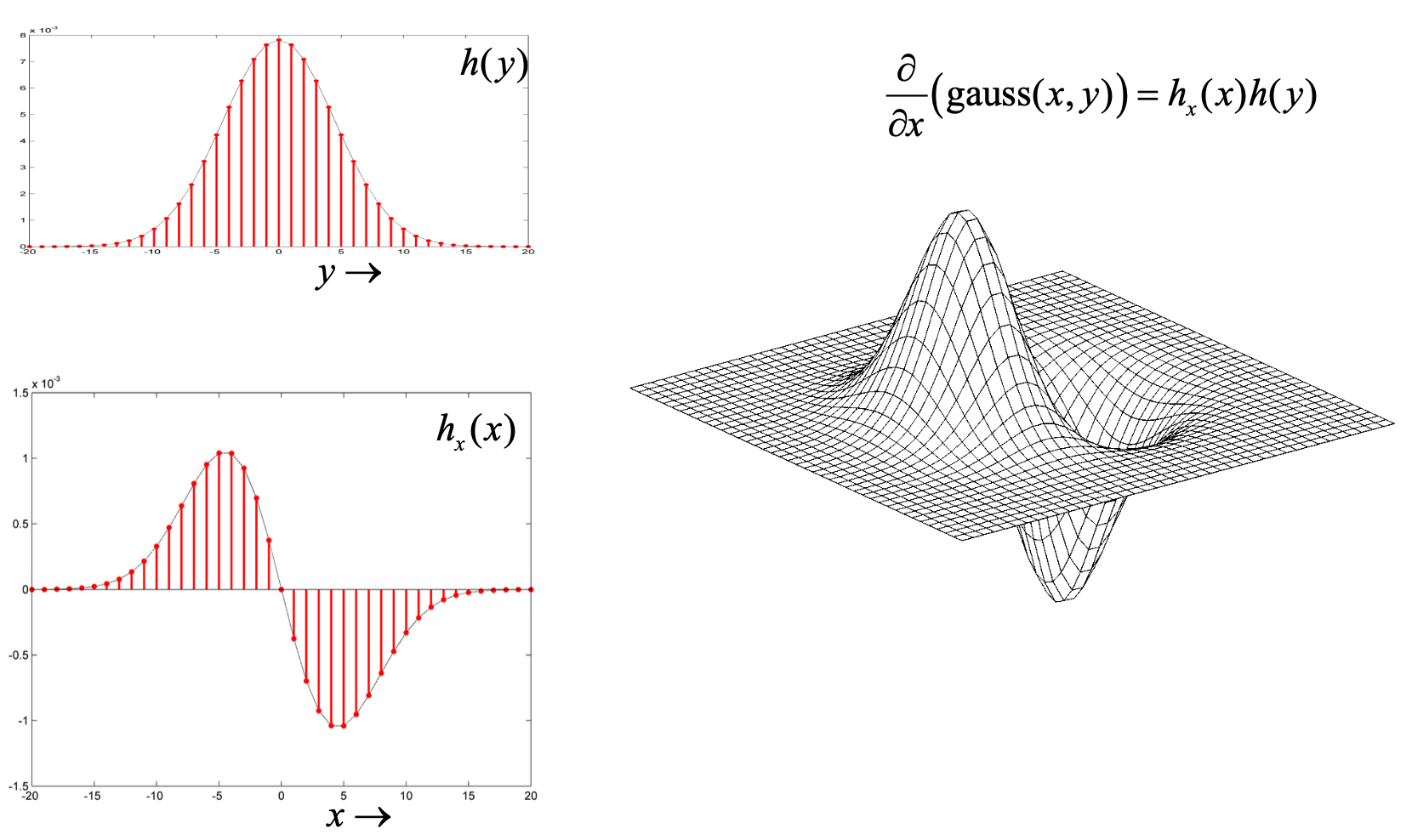

Gaussian Convolution, Image Derivative

Gradient Vector

- Is in the direction of change in intensity (perpendicular to the contour lines),

- Towards the direction of the higher intensity.

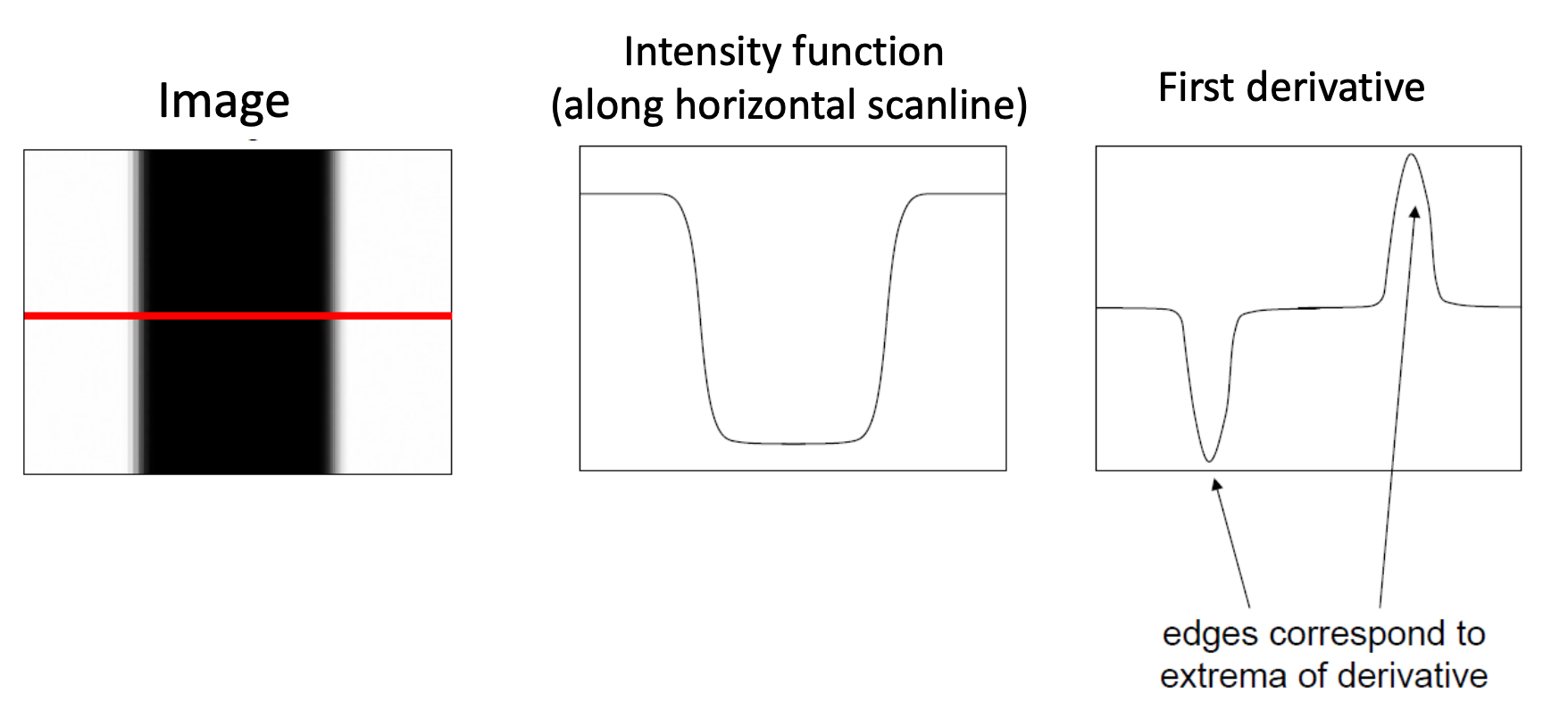

First Derivatives

- Gradient Magnitude:

- Gradient Argument:

- First derivative in x direction is convolution with derivative of Gaussian==

- Same for second derivative in y direction

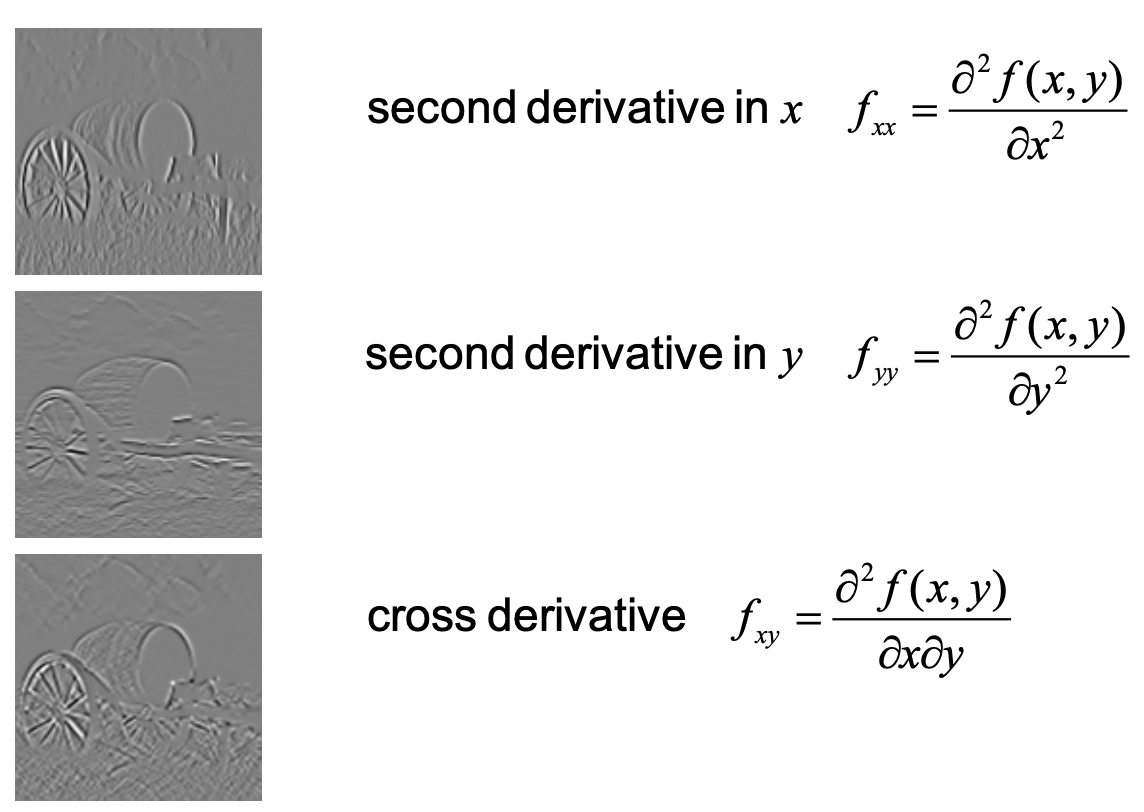

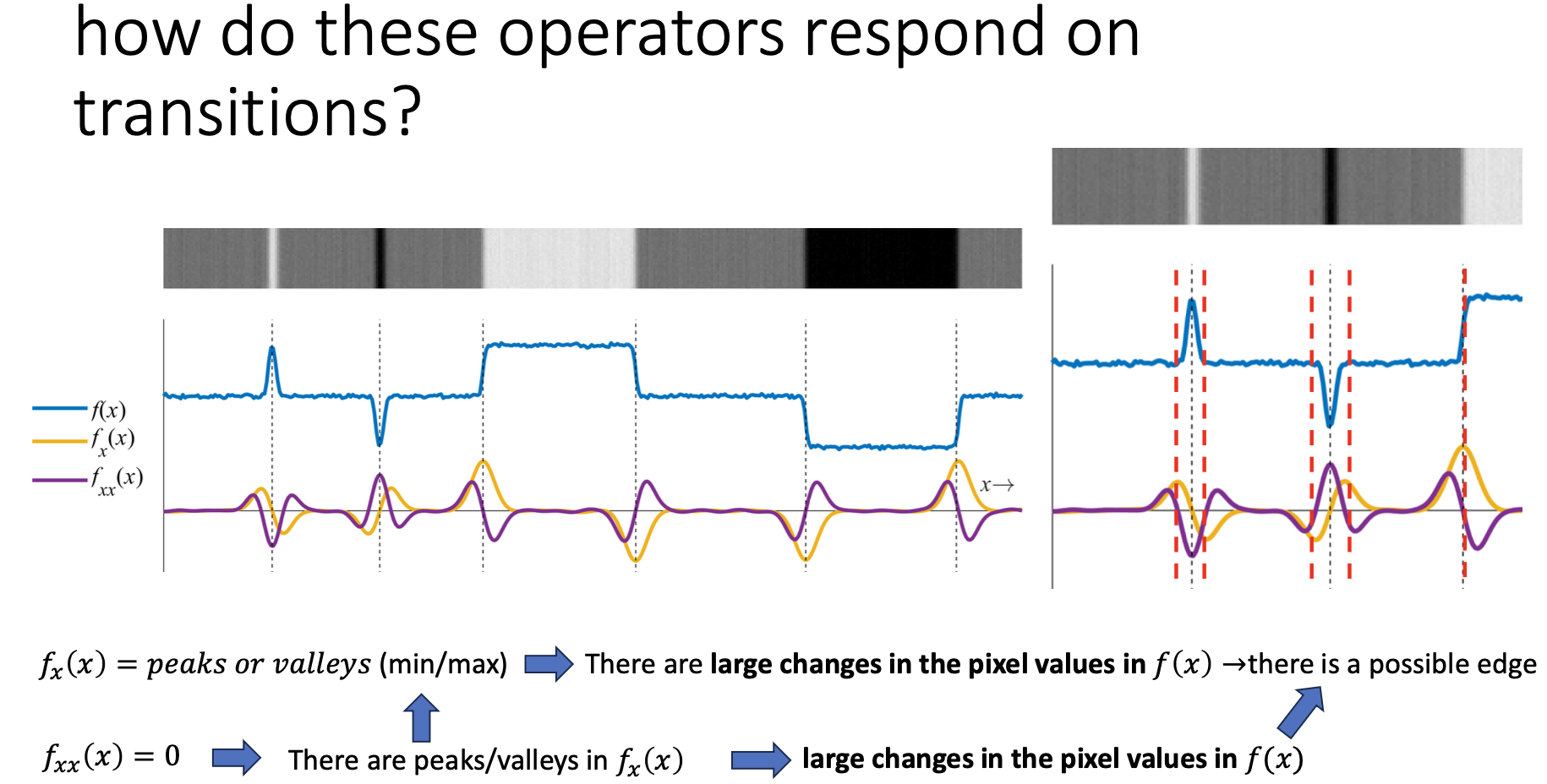

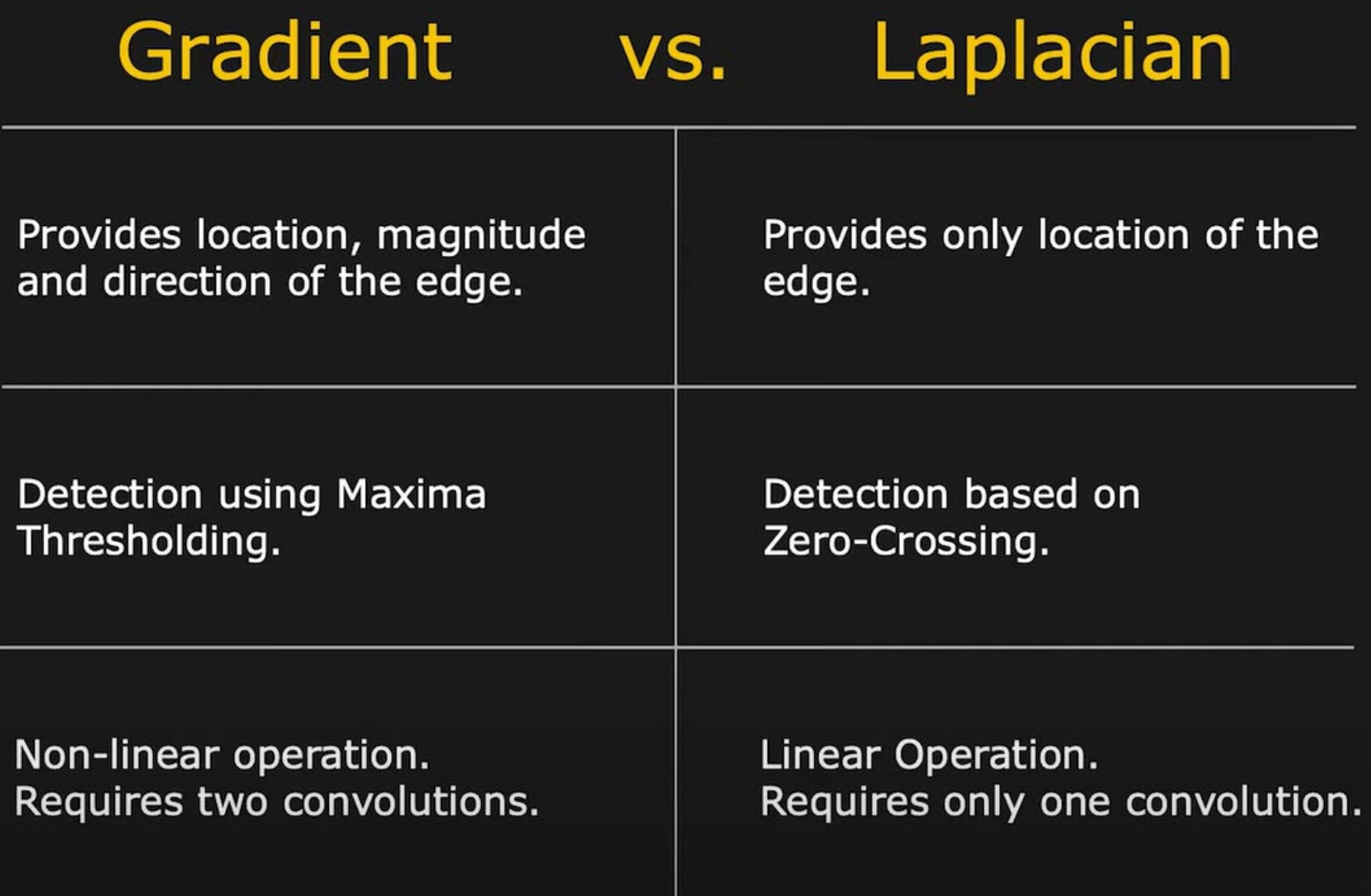

Second Derivatives

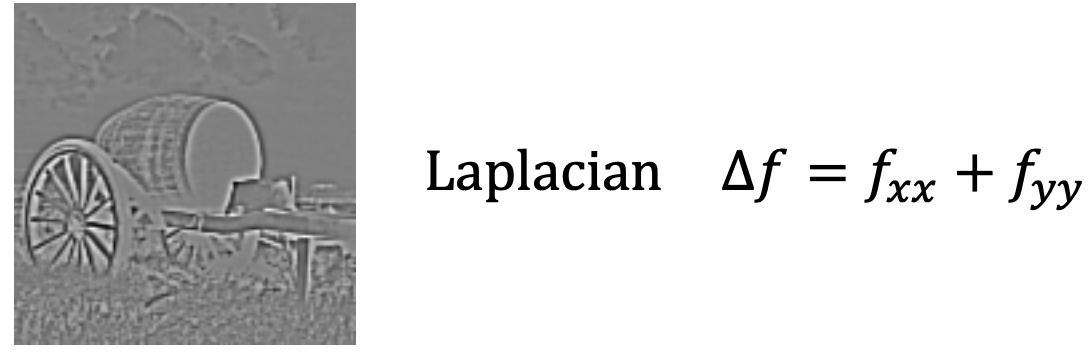

Laplacian

- Laplacian Zero-Crossing: all locations where are zero

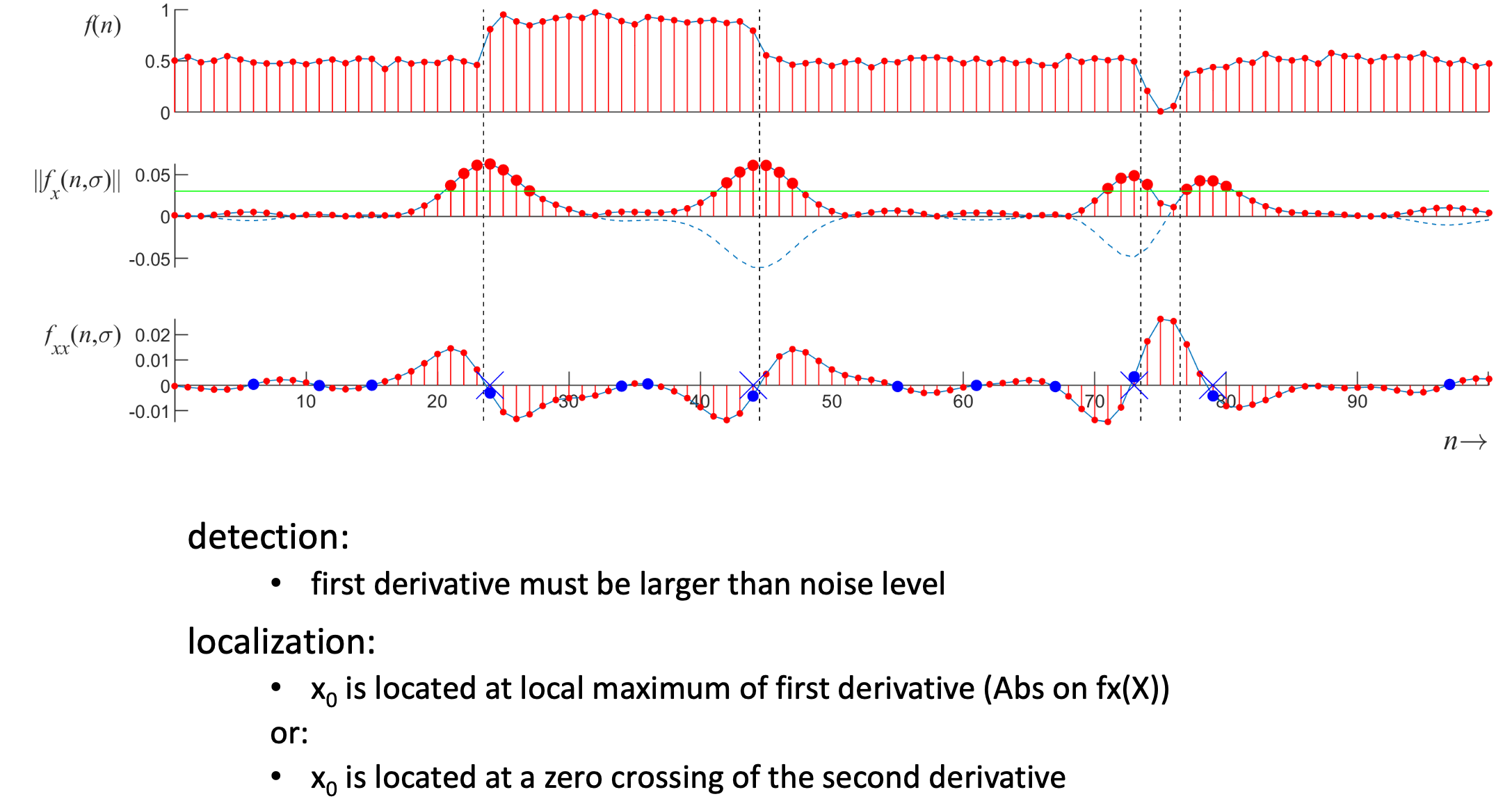

1D Edge Detection

- no edge

- edge

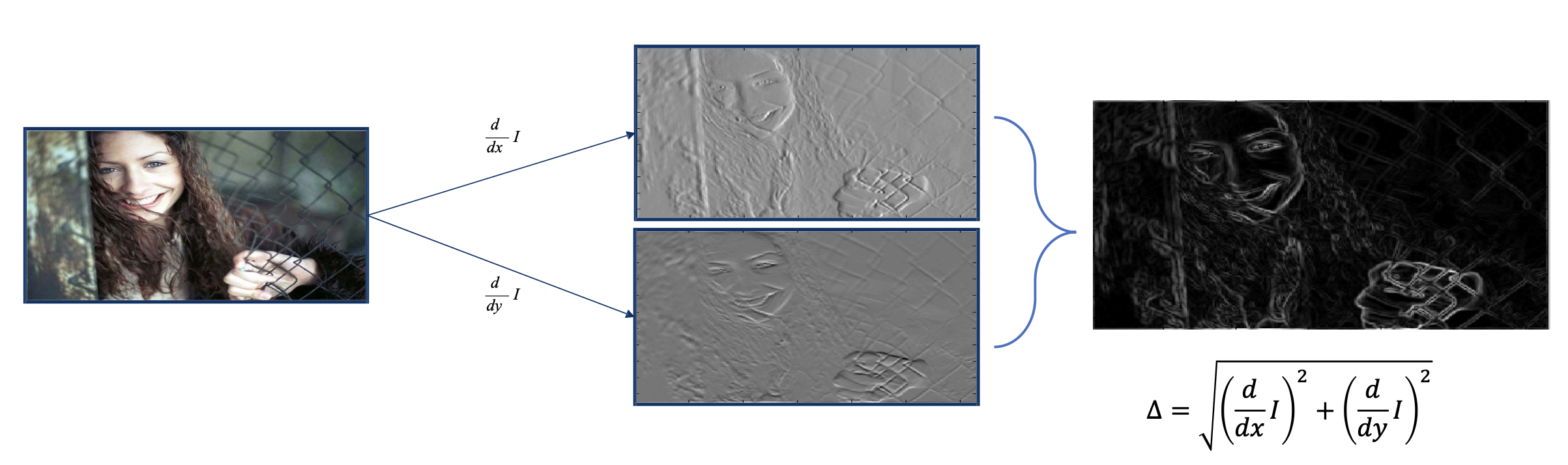

2D Edge Detection

- An edge is a place of rapid change in the image intensity function

- Affected by noise

Derivative Theorem of Convolution

- is the image

- is the convolution kernel

To calculate image derivative:

- You do the corresponding derivative on the convolution kernel (= Gaussian)

- You used the result of (1) to do the convolution with your image

- As increases,

- more pixels are involved in average

- image is more blurred

- noise is more effectively suppressed

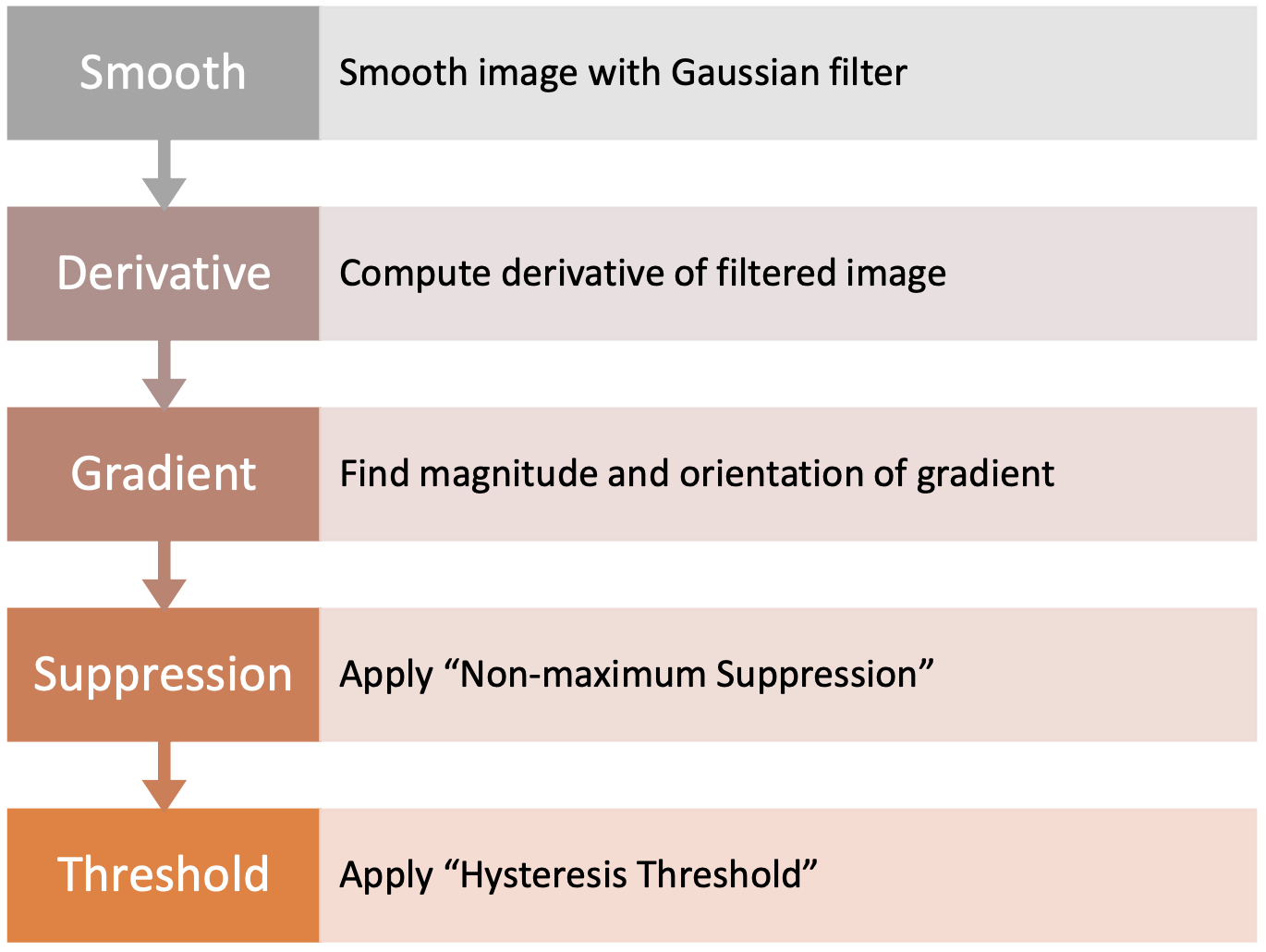

Edge Detectors

Steps

- Compute derivatives in x and y directions

- Find gradient magnitude

- Threshold gradient magnitude ⇒ edges

How does Sobel differ from Prewitt?

- Adds extra weight (2) to the central row/column.

- introduces a smoothing effect (weighted average), making Sobel less sensitive to noise than Prewitt.

Finding Zero Crossings

- Slope of zero-crossing of which is the result of convolution.

- To mark an edge

- compute slope of zero-crossing

- Apply a threshold to slope

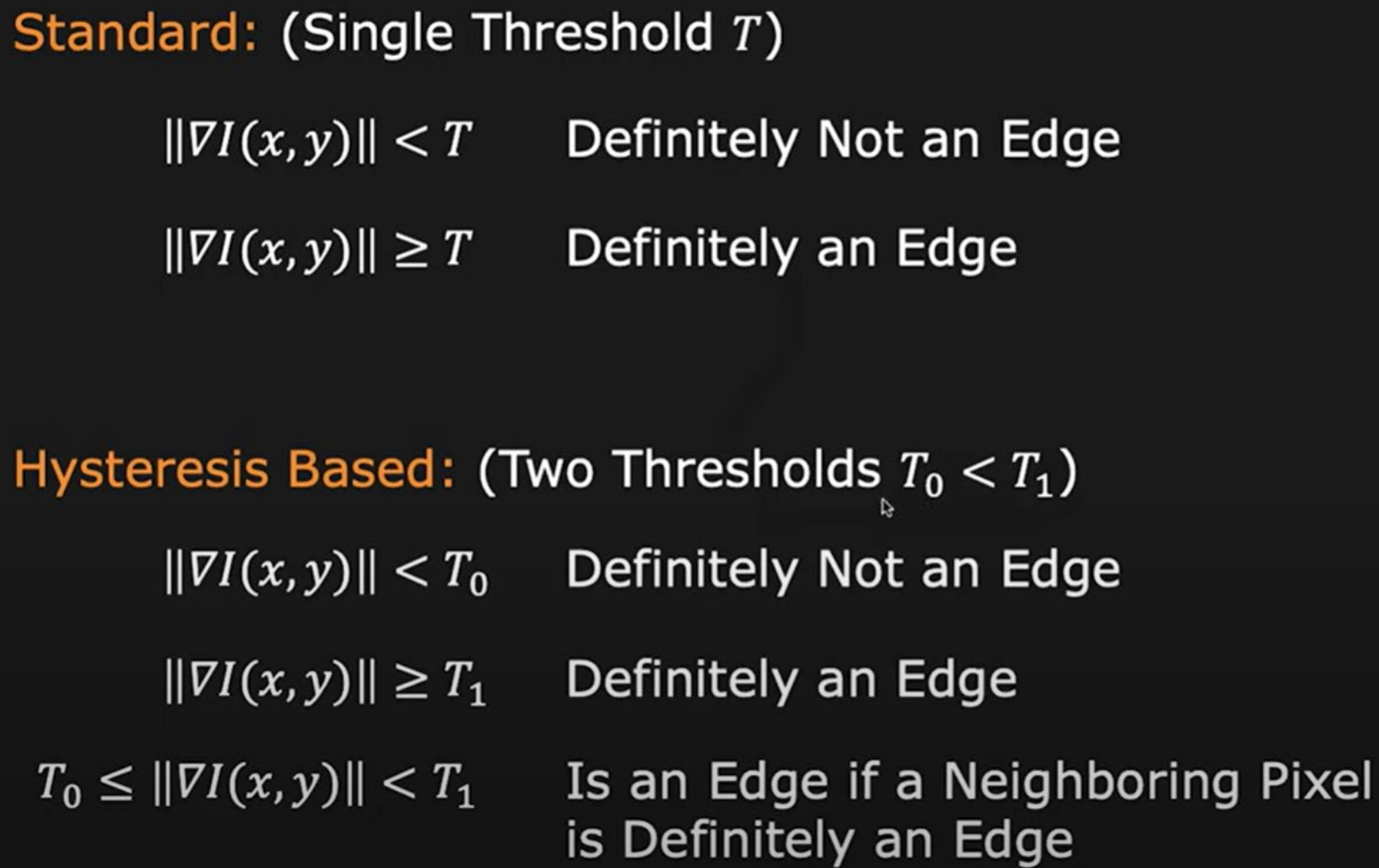

Canny Edge Detector

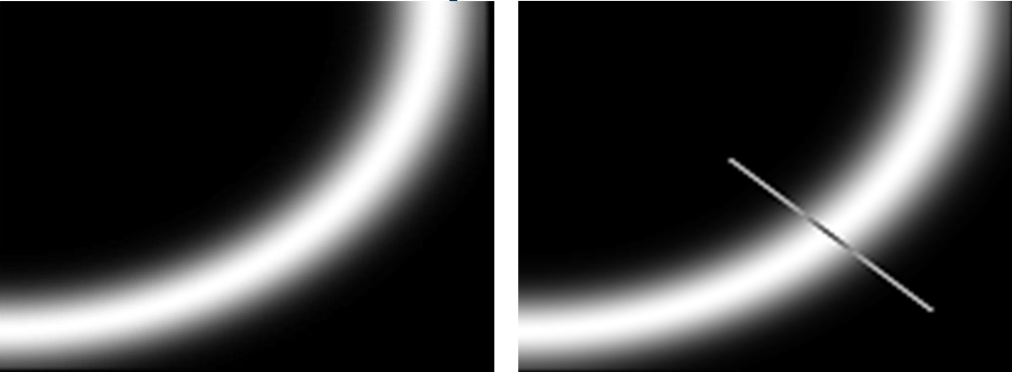

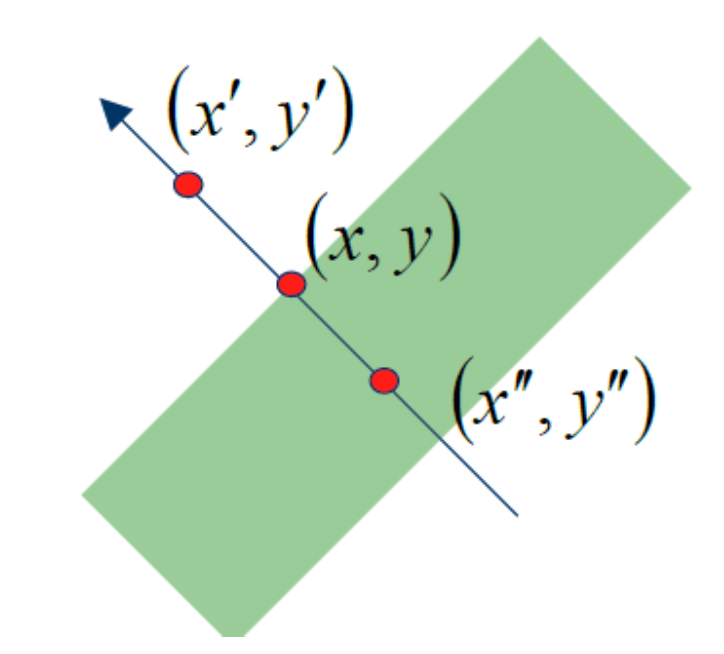

Non-Maximum Suppresion (NMS)

NMS

- We wish to mark points along the curve where the magnitude is largest. We can do this by looking for a maximum along a slice normal to the curve (non-maximum suppression)

- These points should form a curve. There are then two algorithmic issues: at which point is the maximum, and where is the next one?

- Suppress the pixels in which are not local maximum.

- and are the neighbors of along normal direction to an edge.

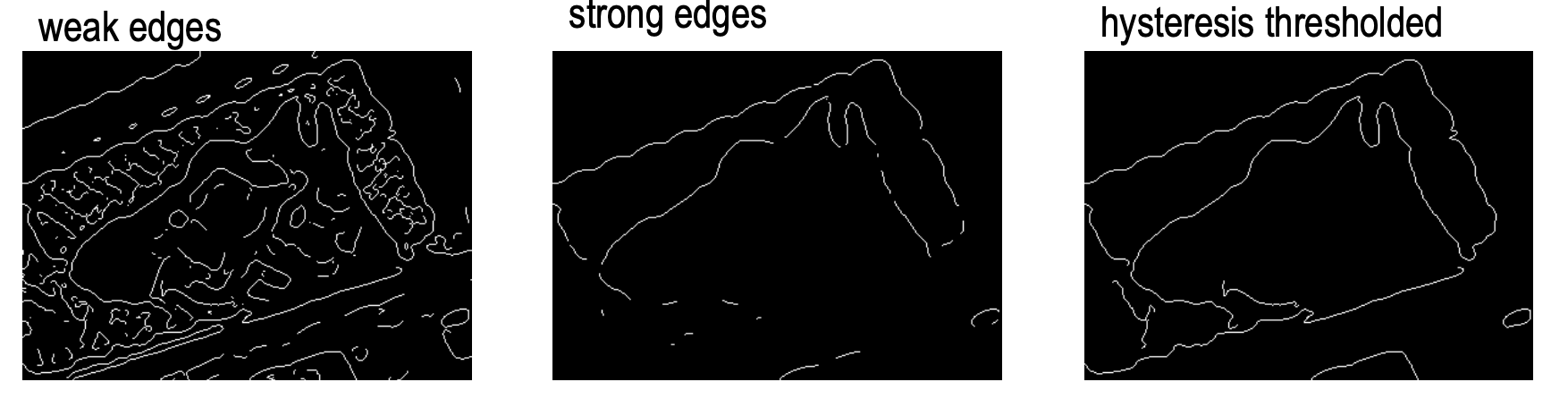

Hysteresis Thresholding

Summary

- Use two different thresholds to define strong edges and weak edges.

- Weak edges are accepted only if they are connected to at least one strong edge element.

Lecture 6: Geometric Transformations

Linear Transformations

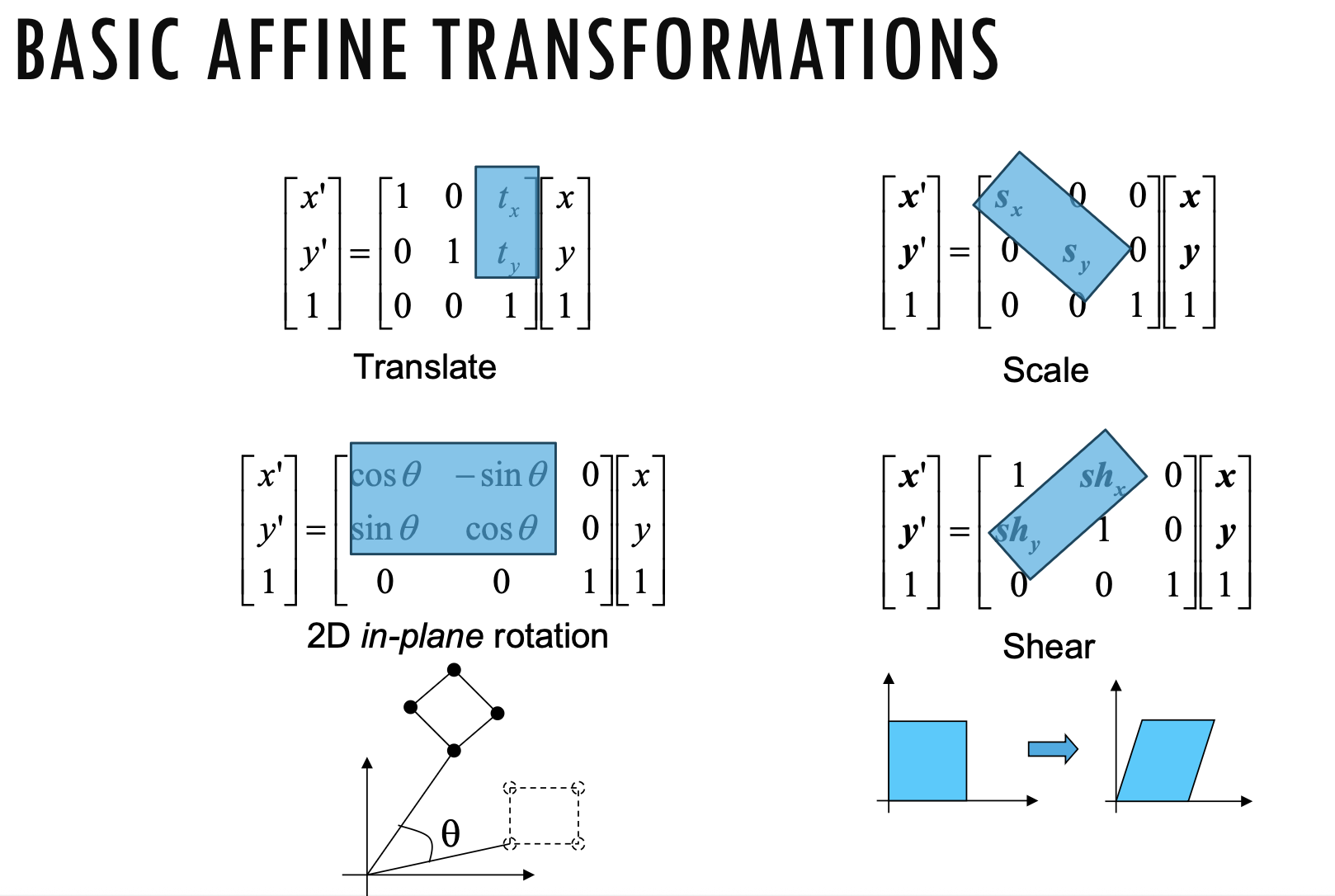

- Scaling, rotation and reflection can be combined as a 2D linear transformation

- Preserve shapes

Linear transformations are combinations of

- Scale,

- Rotation,

- Shear,

- Mirror

Properties of Linear Transformations

- Origin maps to origin (not in translation)

- Straight lines map to straight lines

- Parallel lines remain parallel

- Ratios are preserved

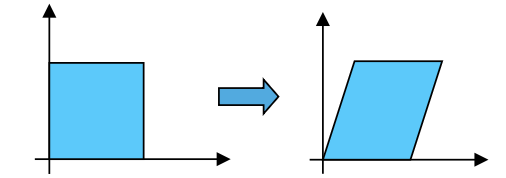

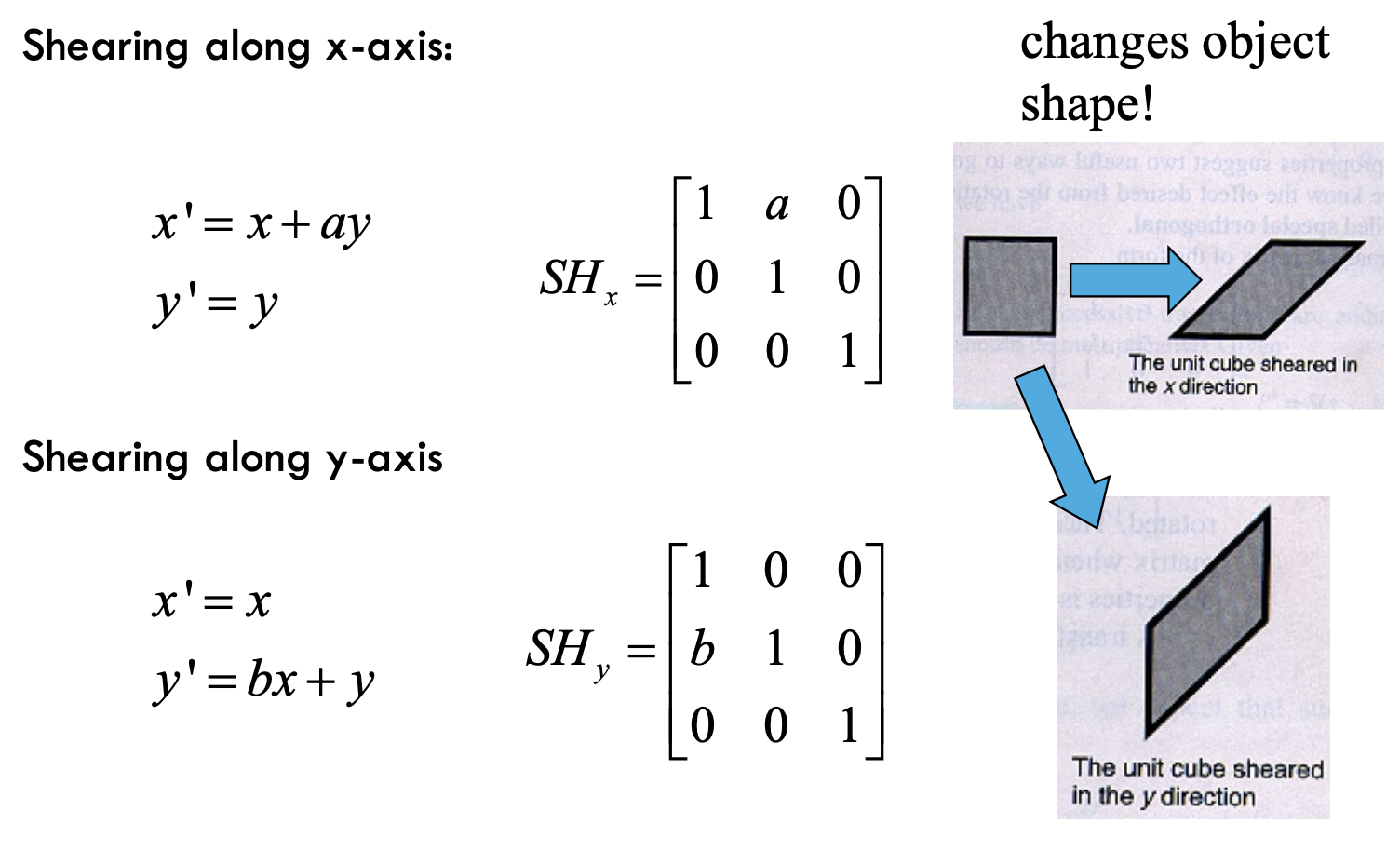

Shearing

- changes object shape

Homogenous Coordinates

Homogenous Coordinates

- Add an extra dimension to coordinates: which allows for perspective projections and other projective transformations to be treated as linear transformations that can be represented by matrice.

- Used to simplify and combine transformations like translation, rotation, and scaling into single matrix multiplications

Affine Transformations

- any transformation with last row we call an affine transformation

- Affine transformations are combinations of

- Linear Transformations

- Translations

Properties of affine transformations

- Origin does not necessarily map to origin

- Lines map to lines

- Parallel lines remain parallel

- Ratios are preserved

Projective Transformations (Warping)

Properties of projective transformations

- Origin does not necessarily map to origin

- Lines map to lines

- Parallel lines do not necessarily remain parallel

- Ratios are not preserved

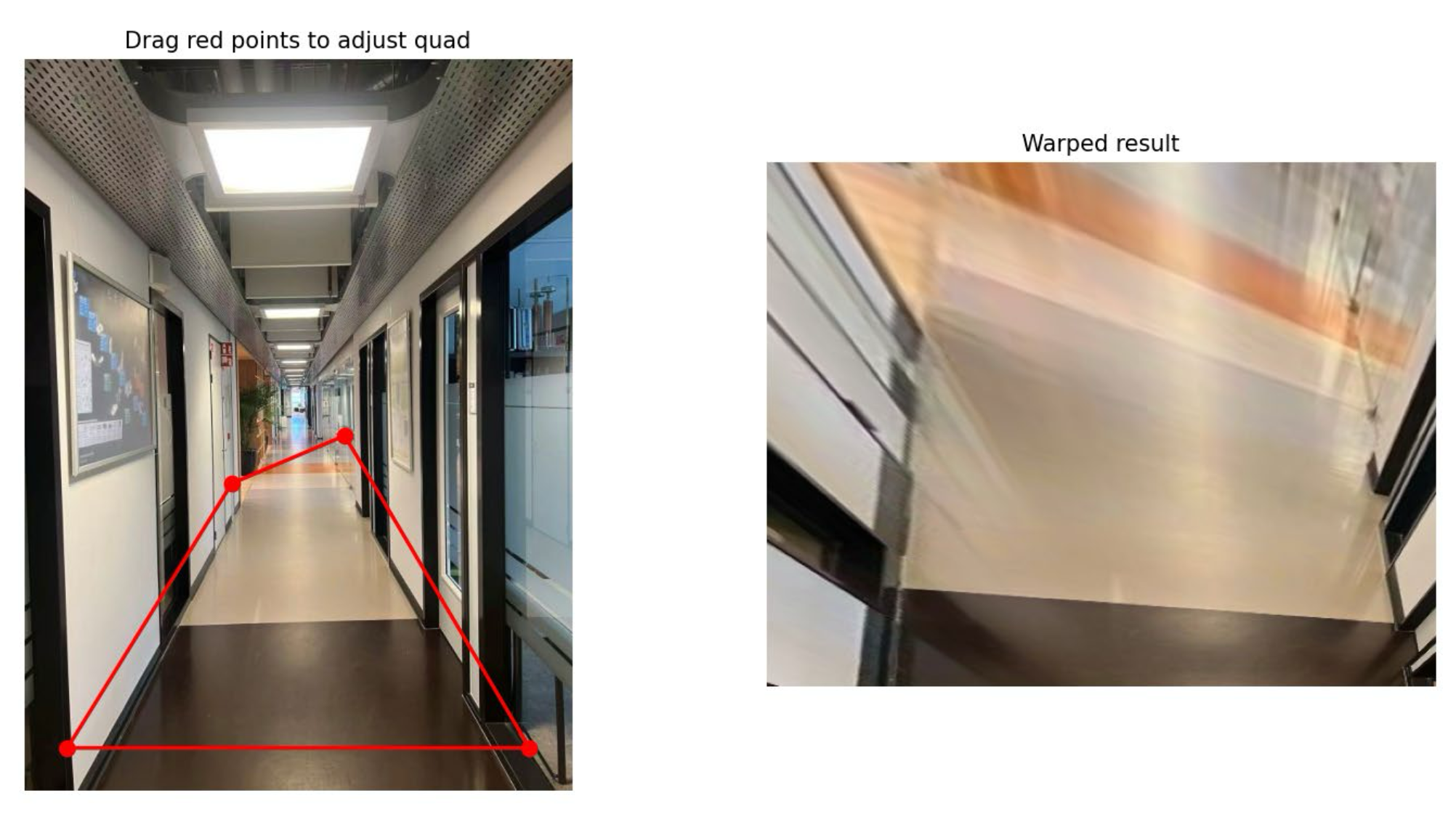

Mapping

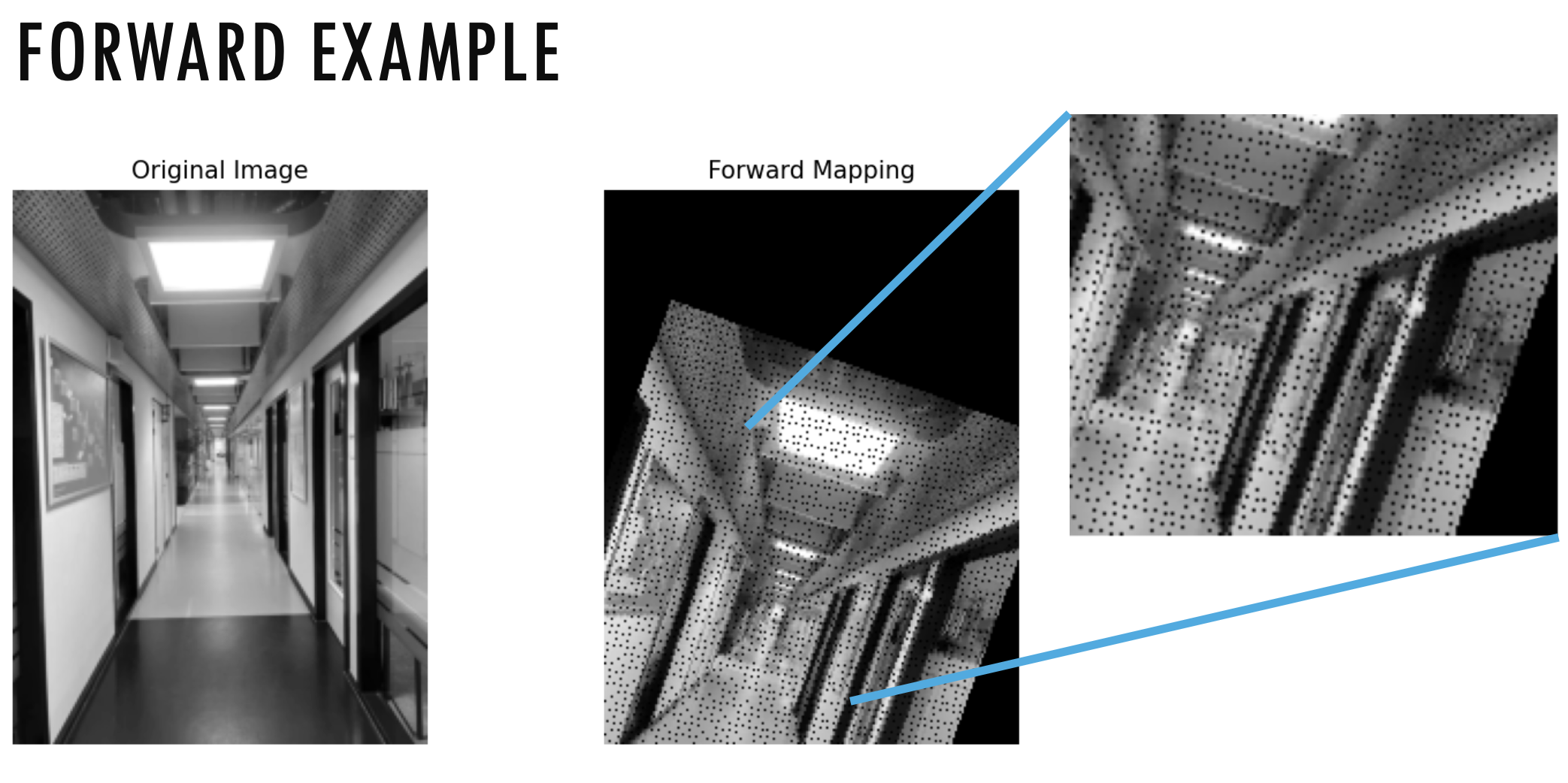

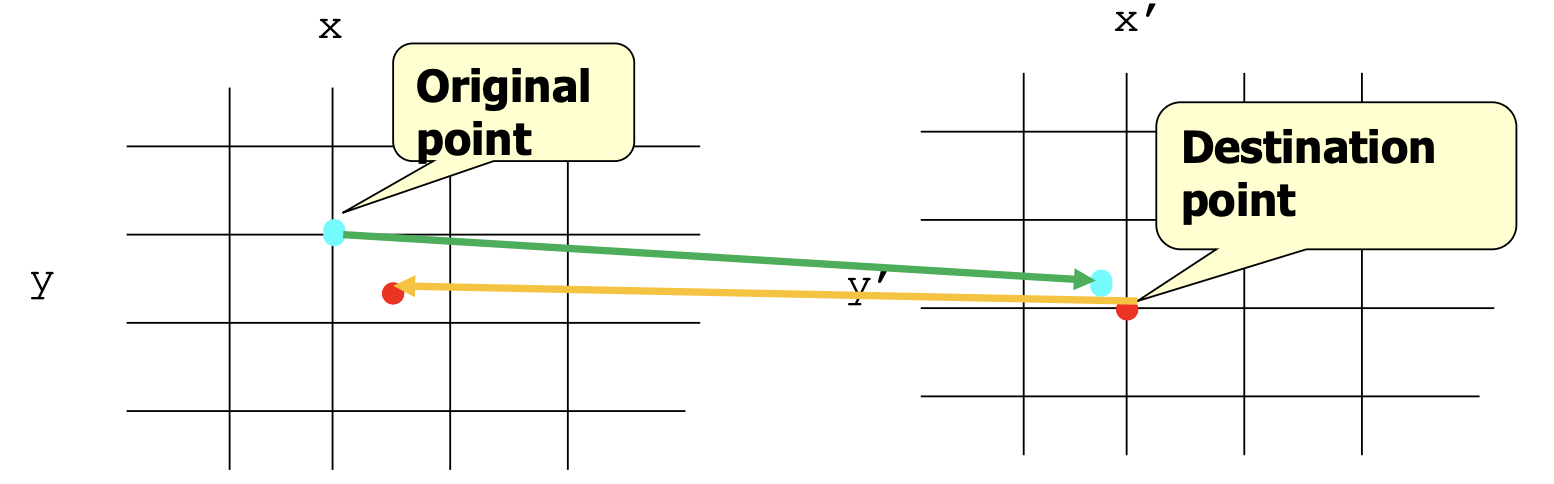

Forward Mapping

- From source image destination image

- Not every destination pixel is guaranteed to be hit by some source pixel. As a result, some pixels in the destination image remain empty, creating gaps or holes.

- Source and destination images may not be the same size

- Output locations may not be integer values

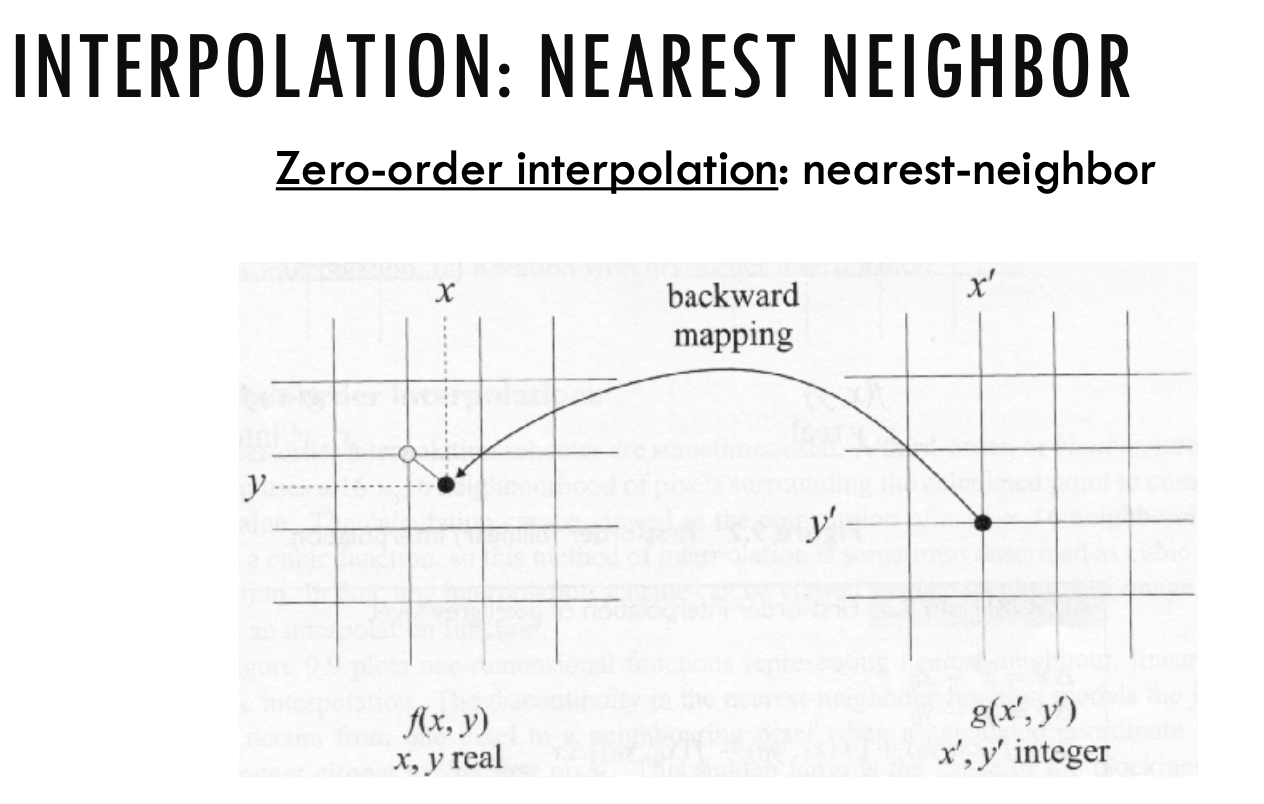

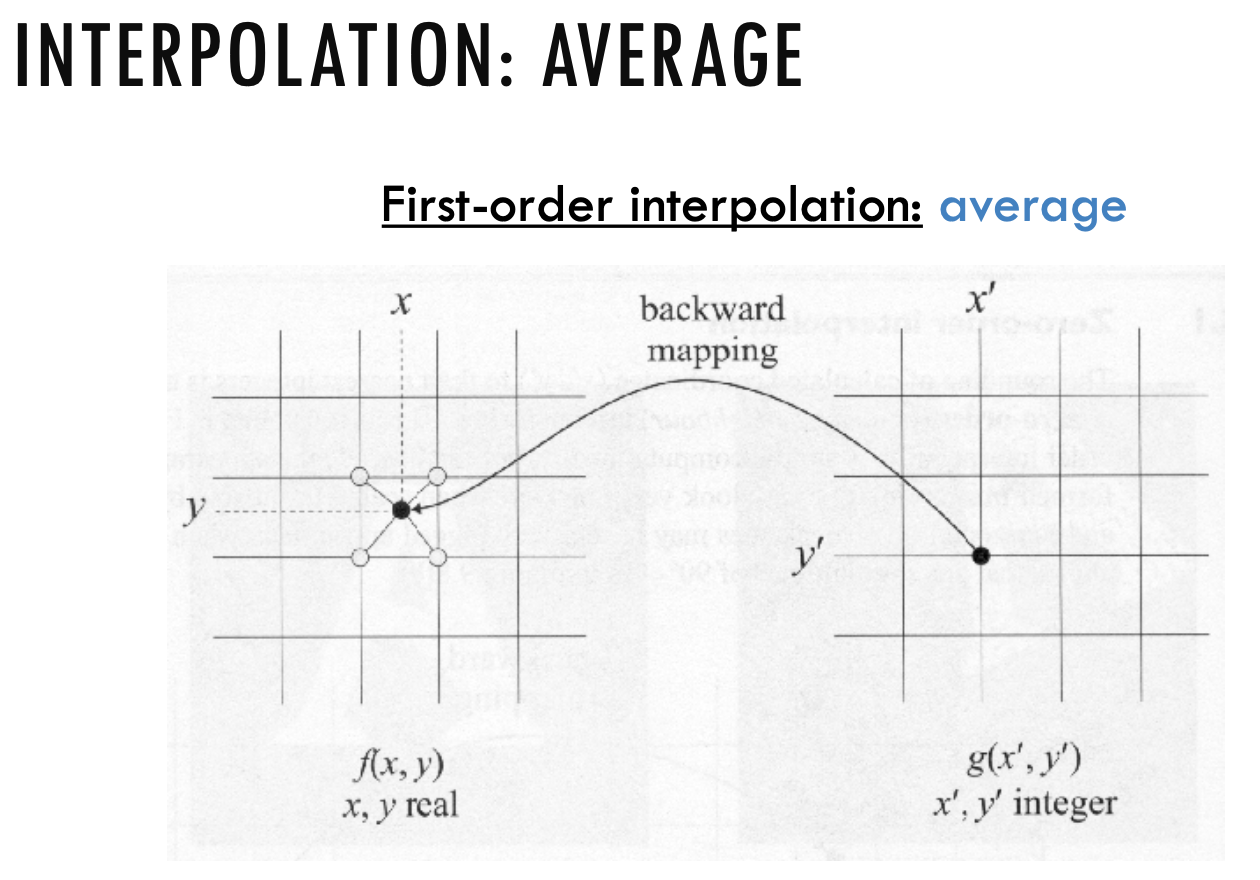

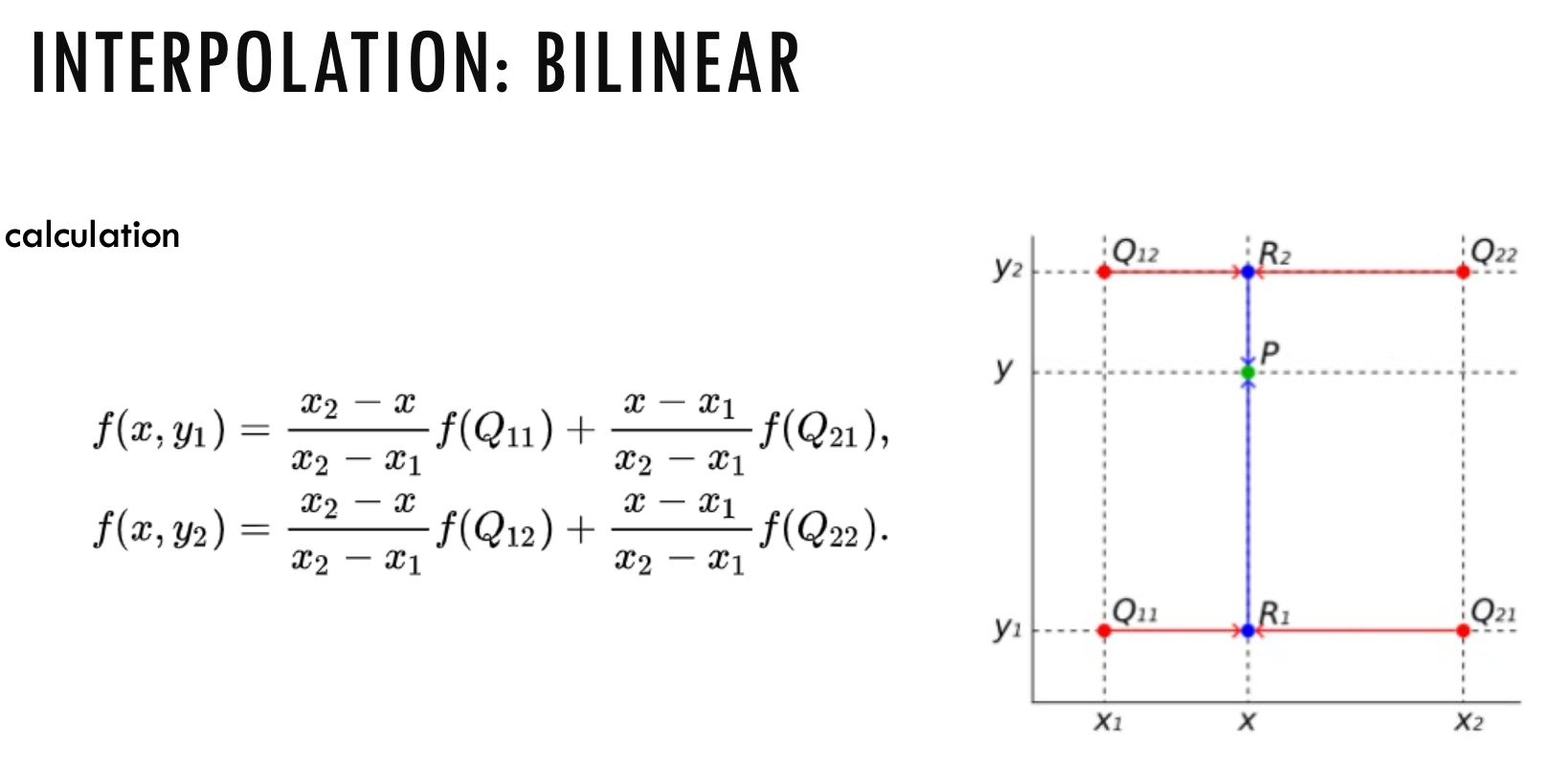

Backward Mapping

- From destination image source image

- No gaps (through inverse mapping). To assign intensity values to these locations, we need to use some form of intensity interpolation

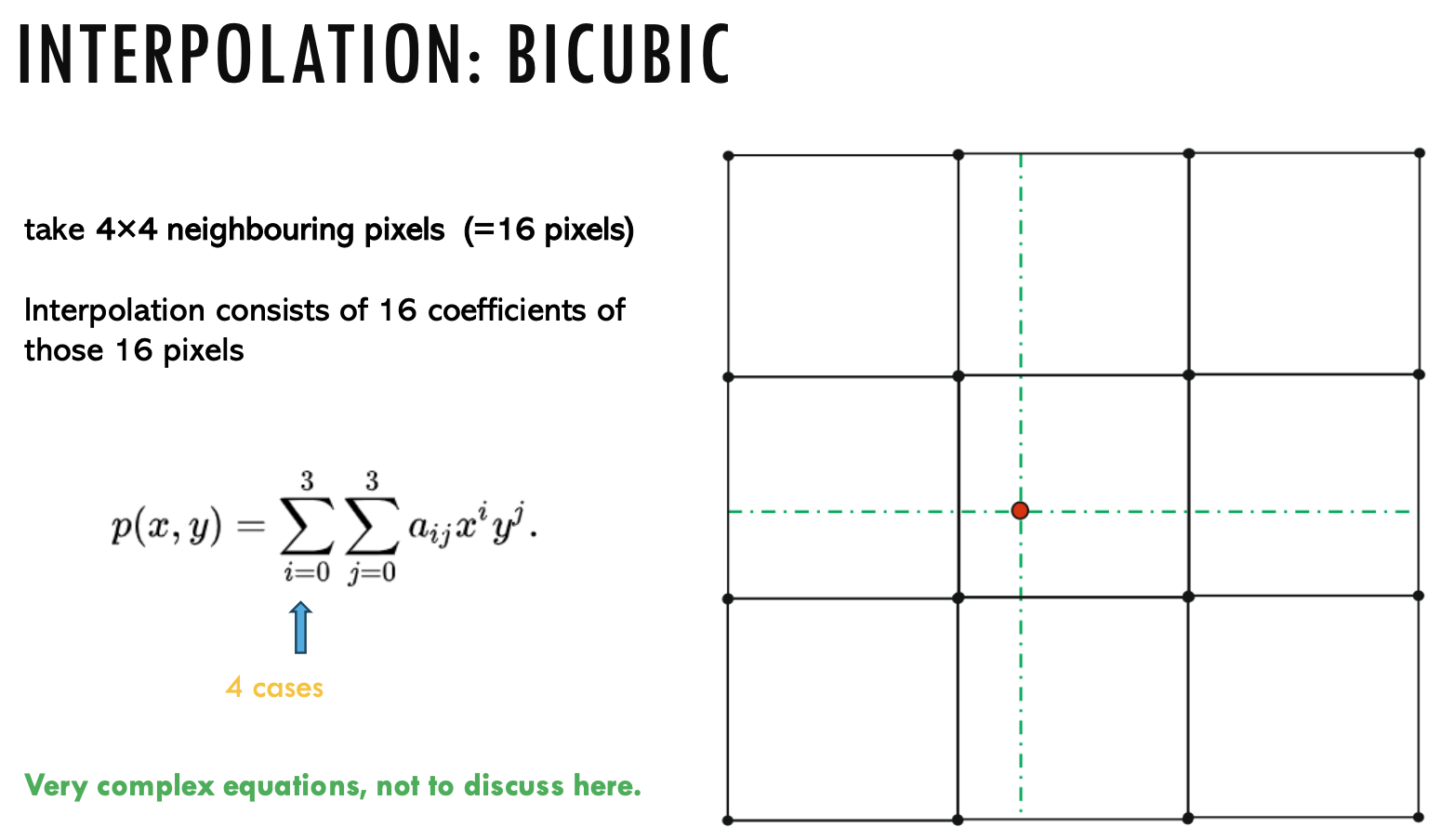

Interpolation Methods

Lecture 7: Camera Model and Calibration

Homogenous Coordinates in 2D

- Homography: a projective transformation

- Undoing a perspective distortion in an image

- transformed lines are still lines

- lines that share a common vanishing point keep on sharing a vanishing point

- distances are not preserved

- angles are not preserved

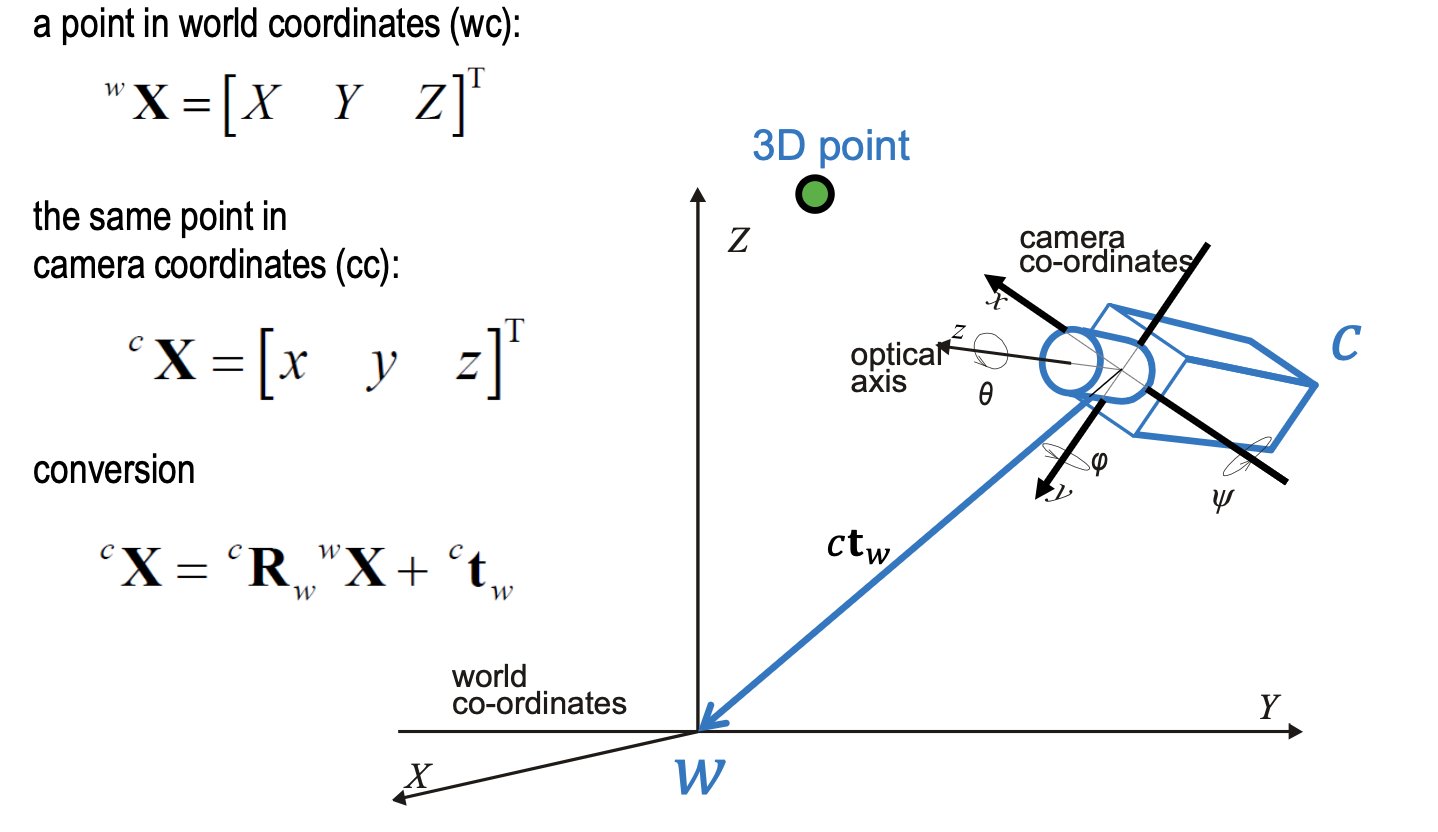

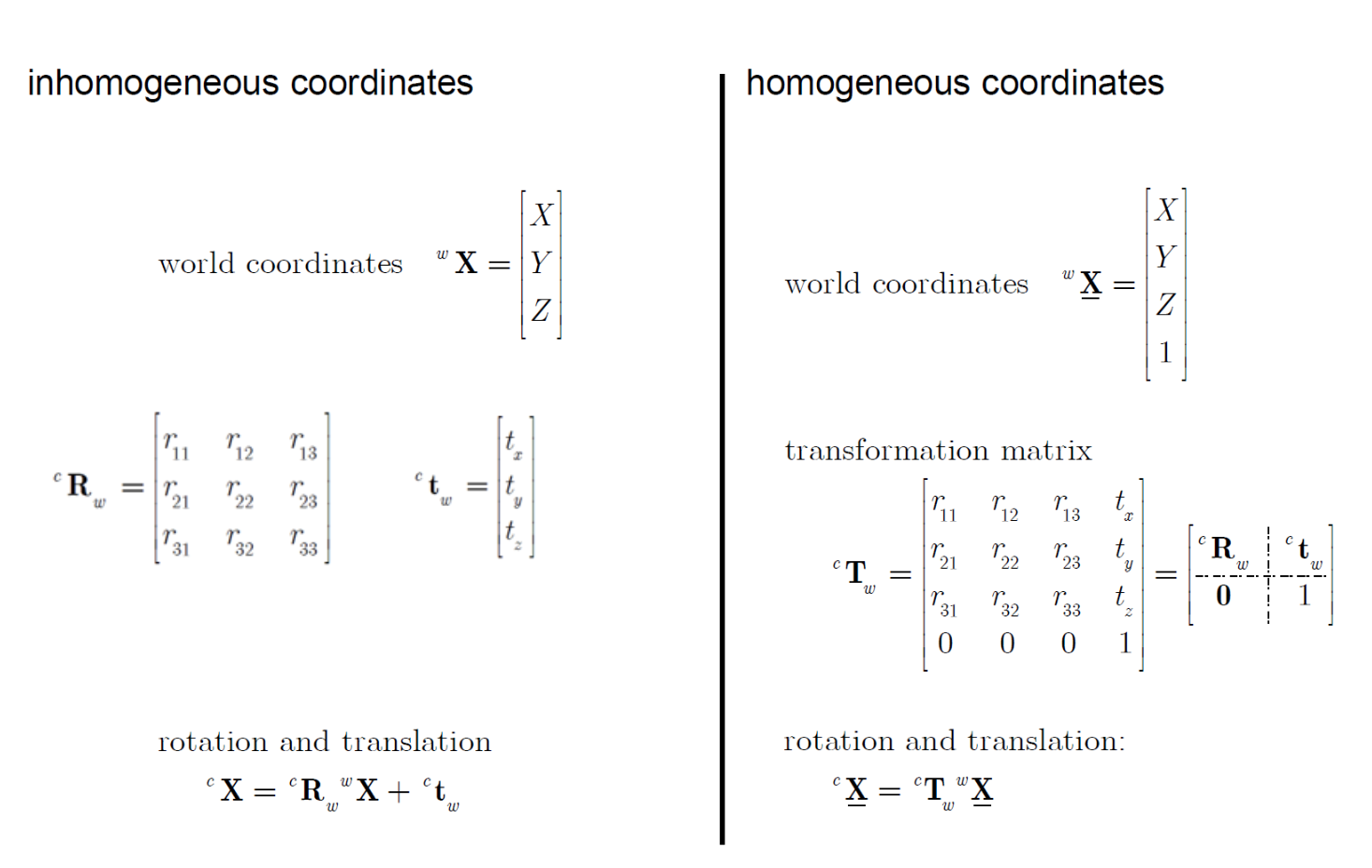

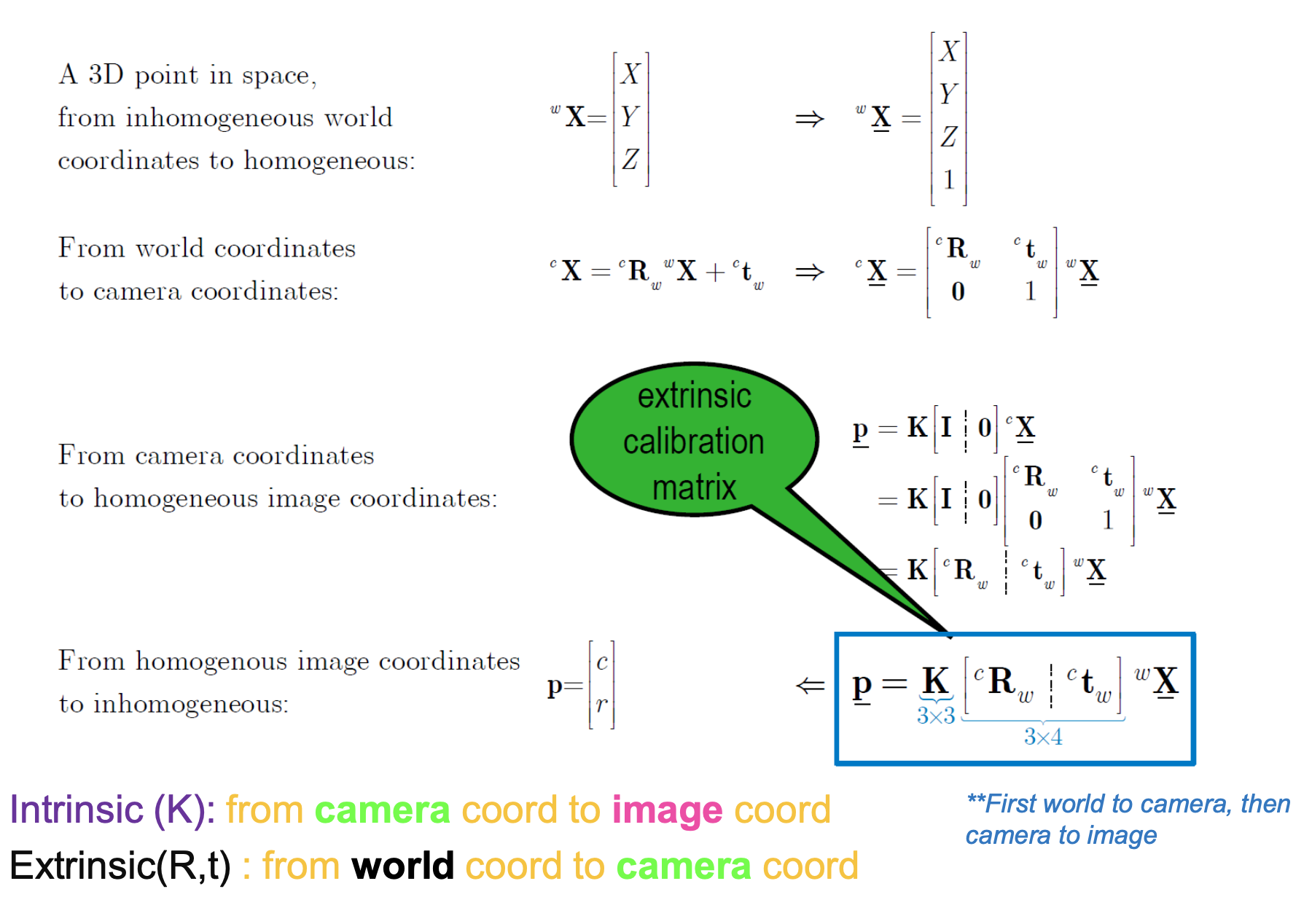

World to Camera coordinates

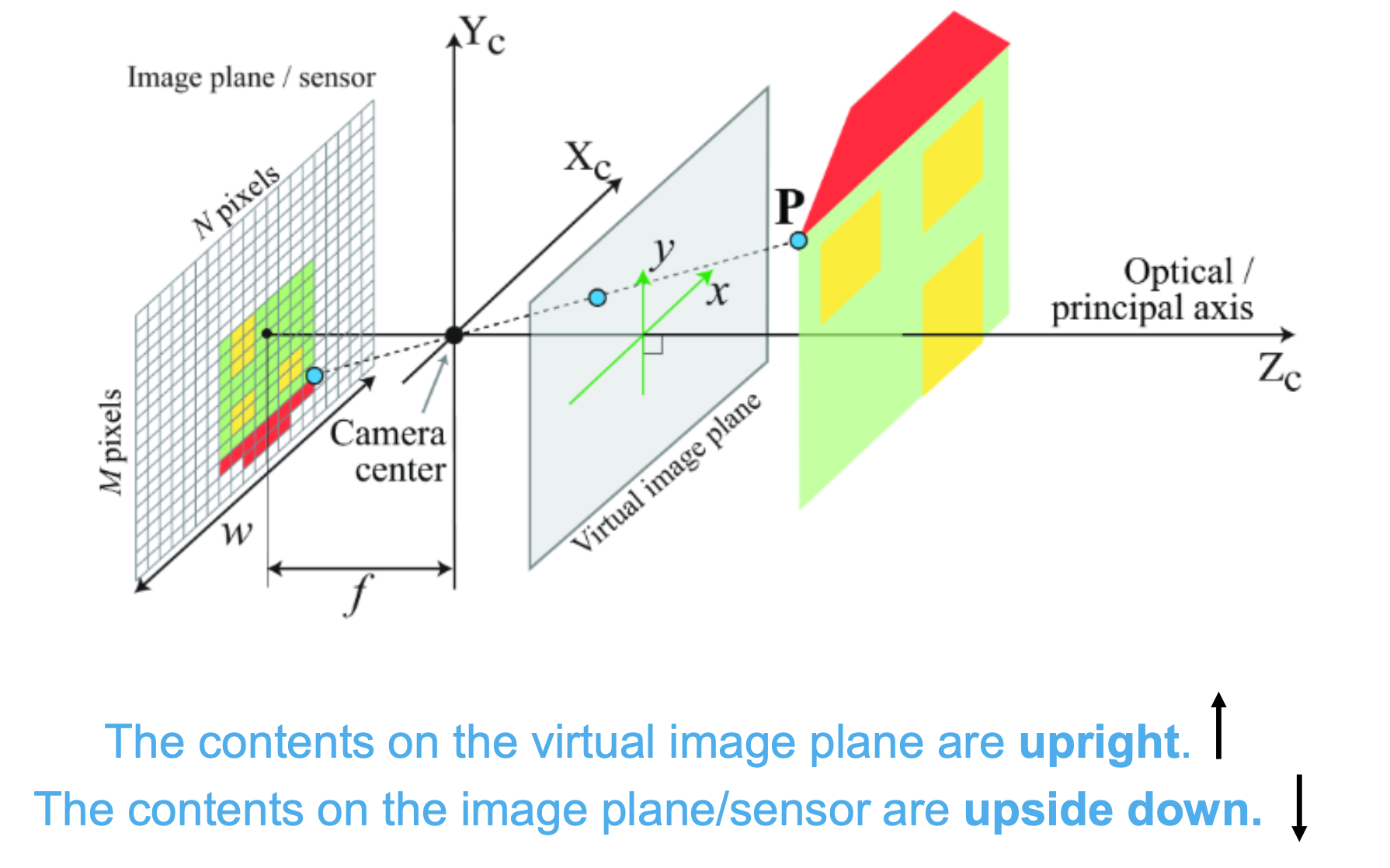

Perspective Projection of a camera

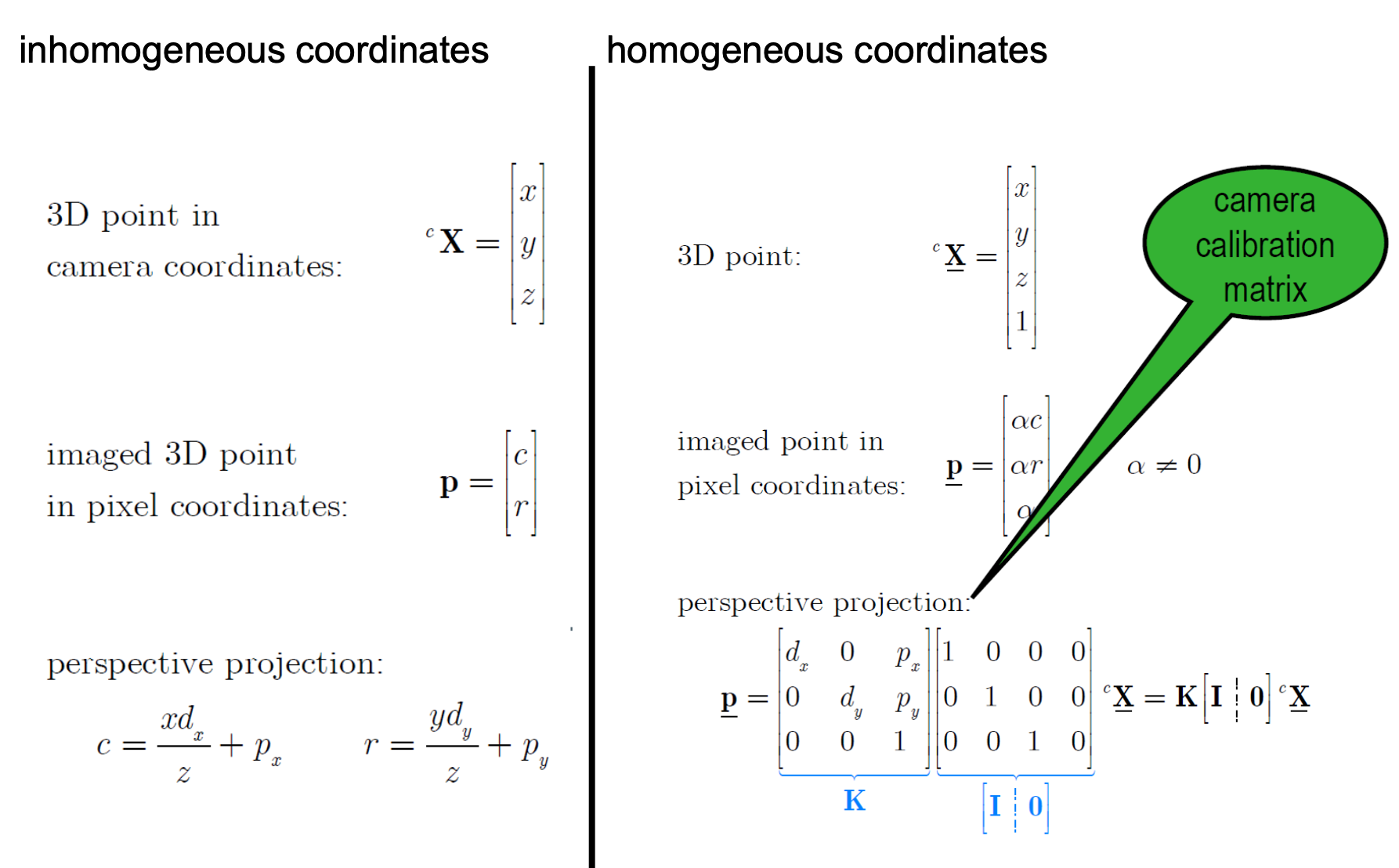

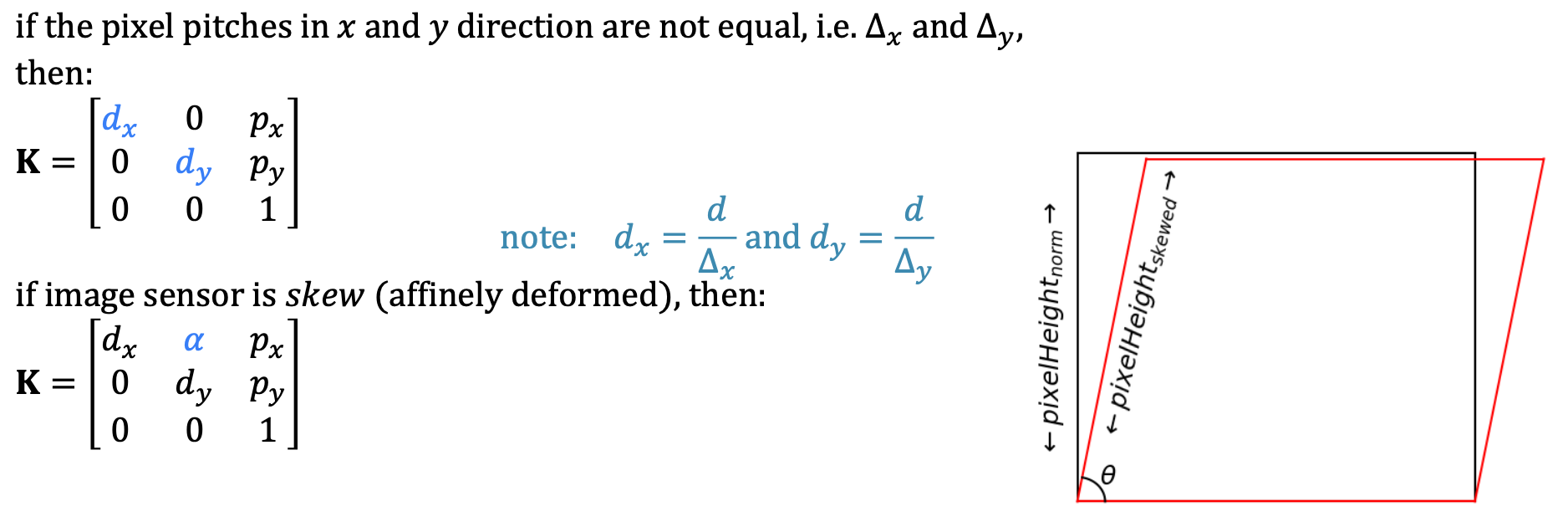

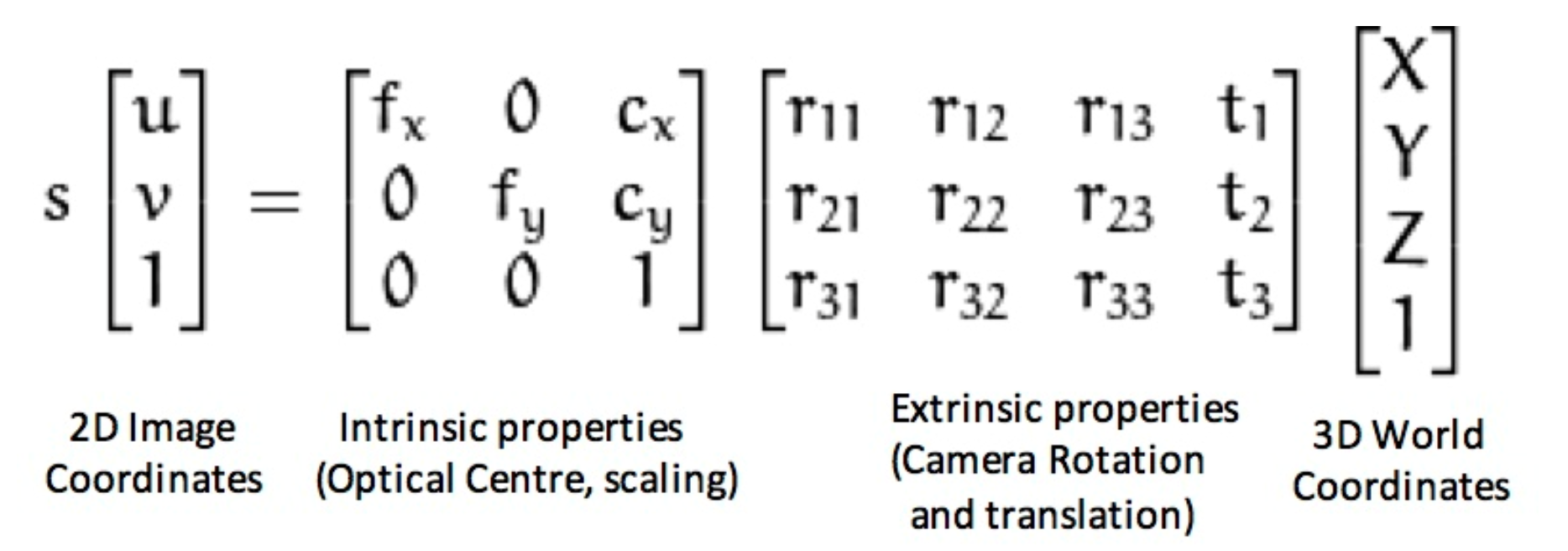

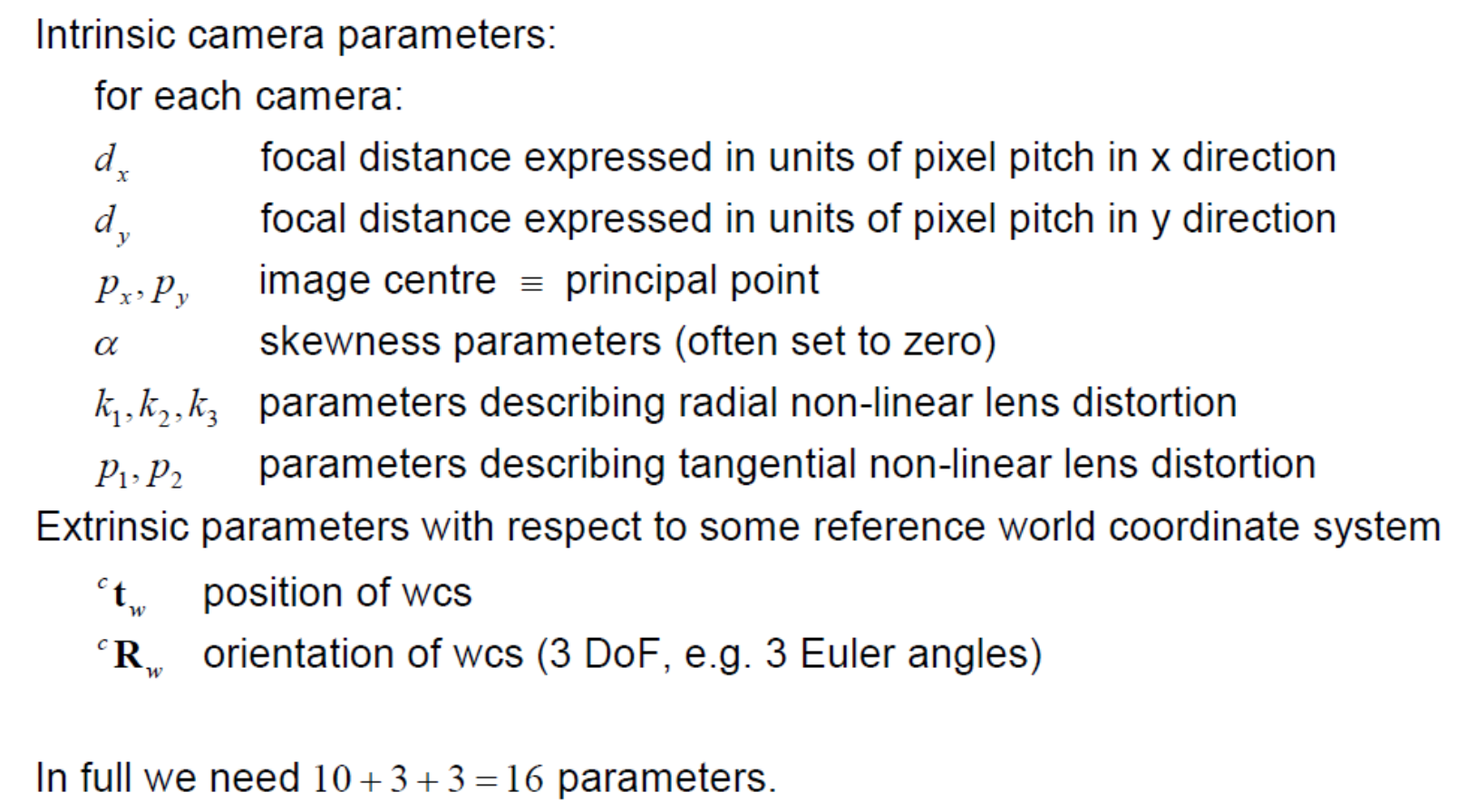

Intrinsic Camera Model

- are pixel coordinates, we want to calculate them

- , are principal point = image center

- are distances between pixels (focal distance)

- are the focal length

- are the principal point

- is the skew efficient

- These parameters map 3D camera coordinates to 2D image coordinates.

As a summary

Distortion

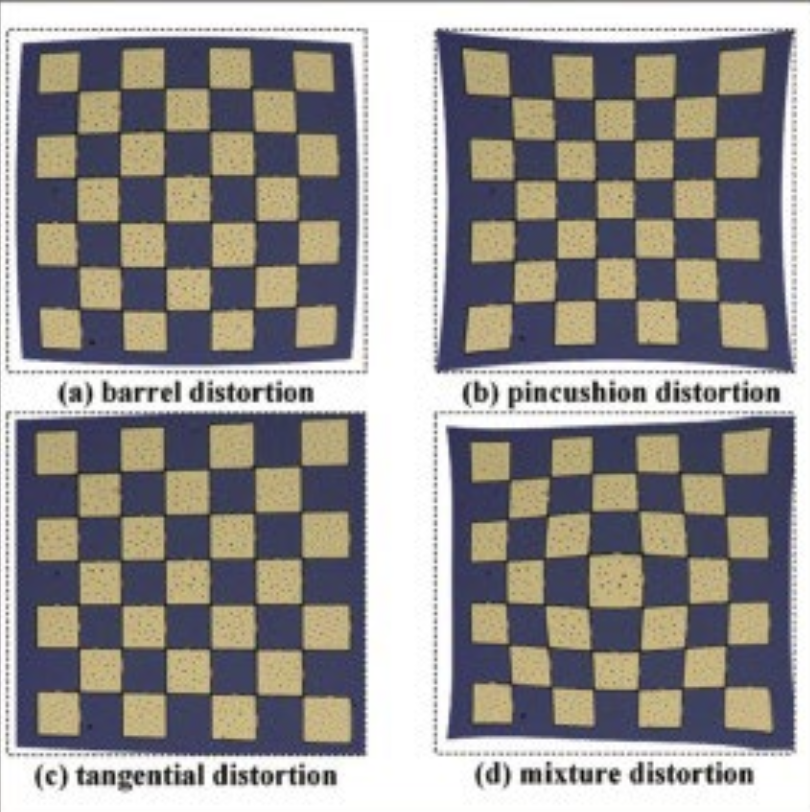

Radial Distortion

- parameters

- This type of distortion usually occur due unequal bending of light. The light ray gets displaced radially inward or outward from its ideal location before hitting the image sensor.

- The rays bend more near the edges of the lens than the rays near the centre of the lens.

- Due to radial distortion straight lines in real world appear to be curved in the image.

- Barrel distortion(negative radial displacement), Pincushion distortion(positive radial displacement)

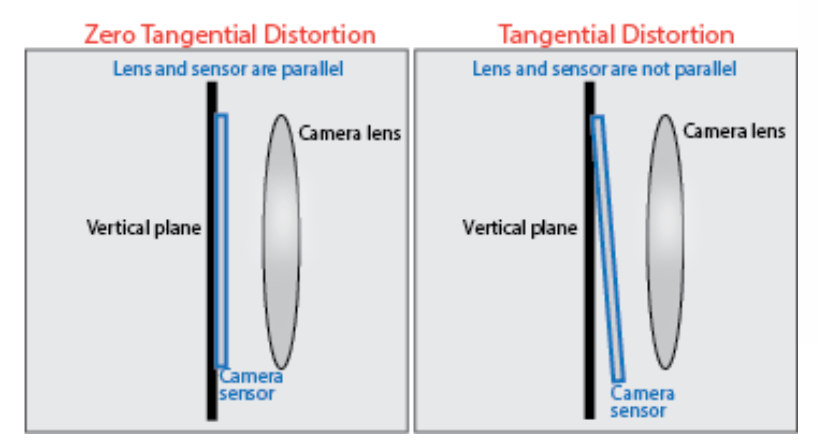

Tangential Distortion

- parameters

- This usually occurs when image screen or sensor is at an angle w.r.t the lens. Thus the image seem to be tilted and stretched.

Type of distortions

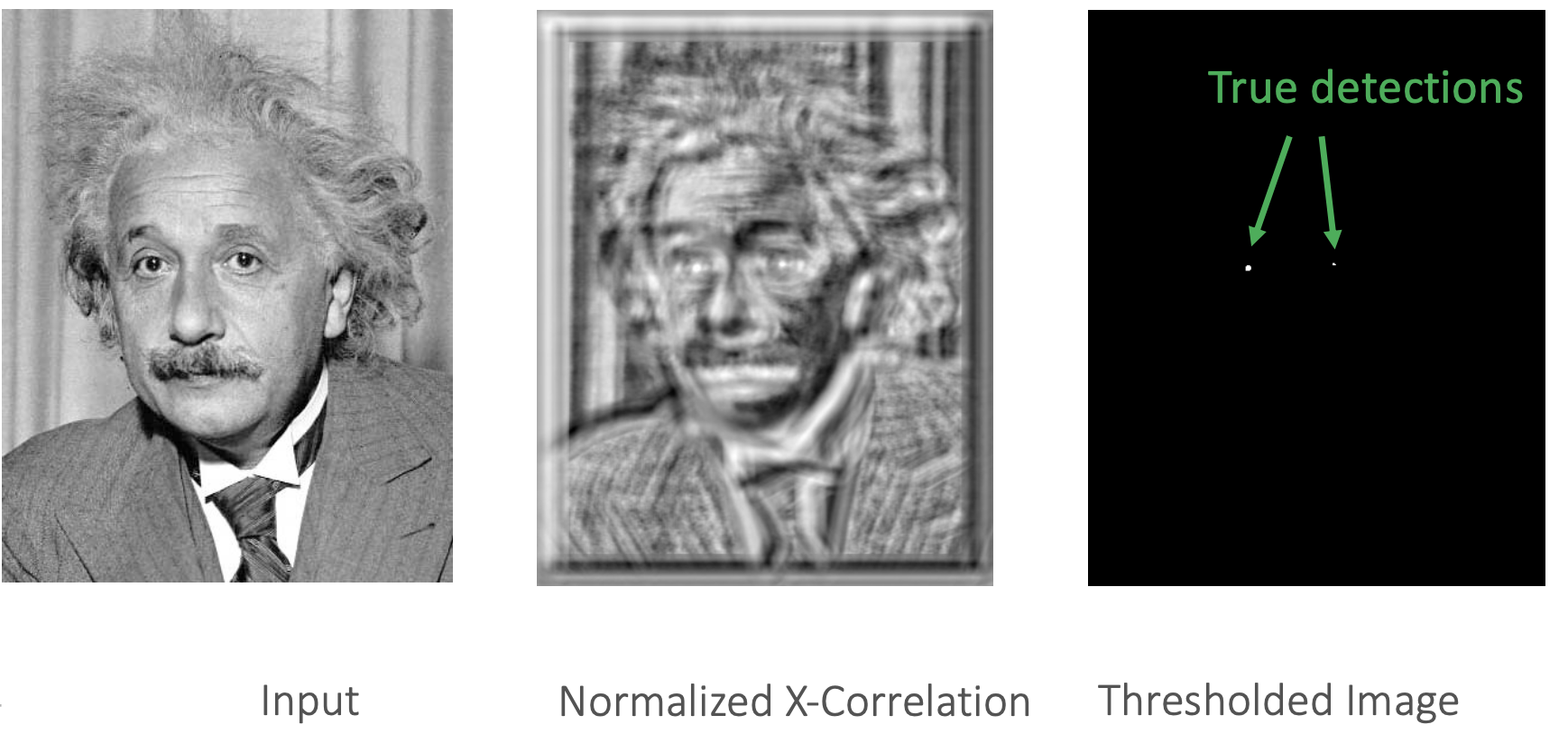

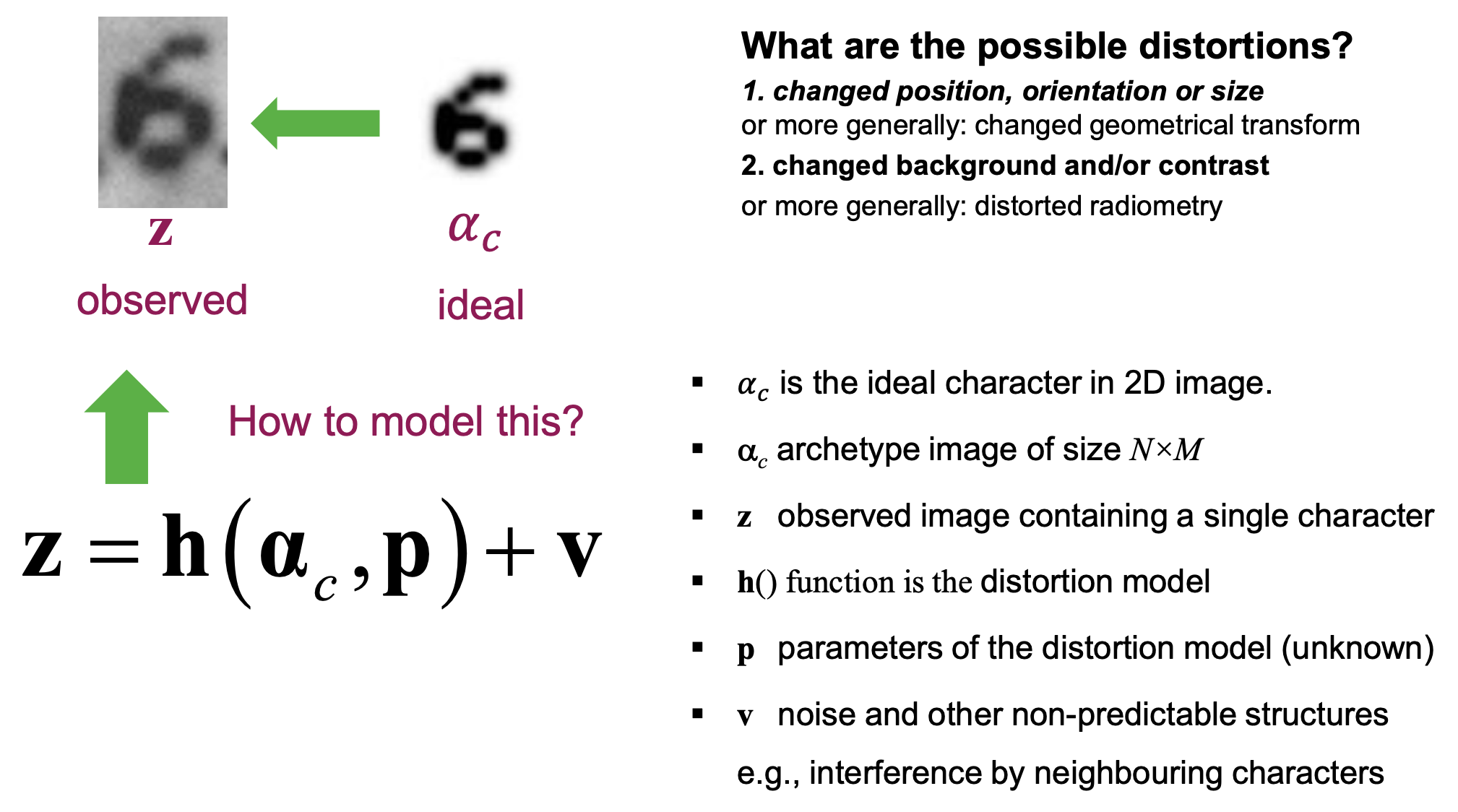

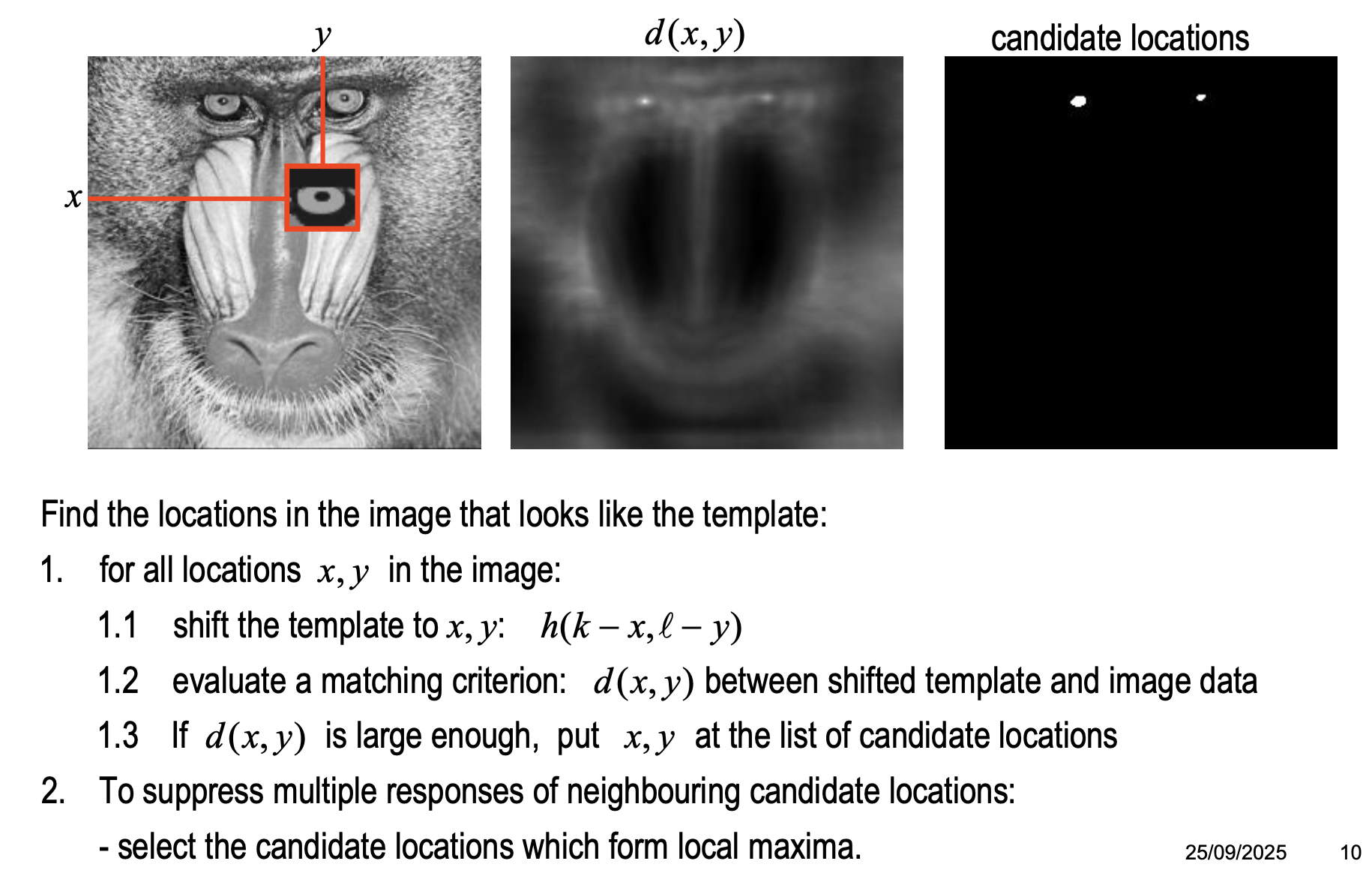

Lecture 8: Template matching & line detection

Mind these

- position

- size

- orientation

- background

- contrast

The template is an iconic archetype of the object that we are looking for.

Template Matching

Can we find locations in the image where locally the image “looks like” the archetype? — We need to define what “looks like” is.

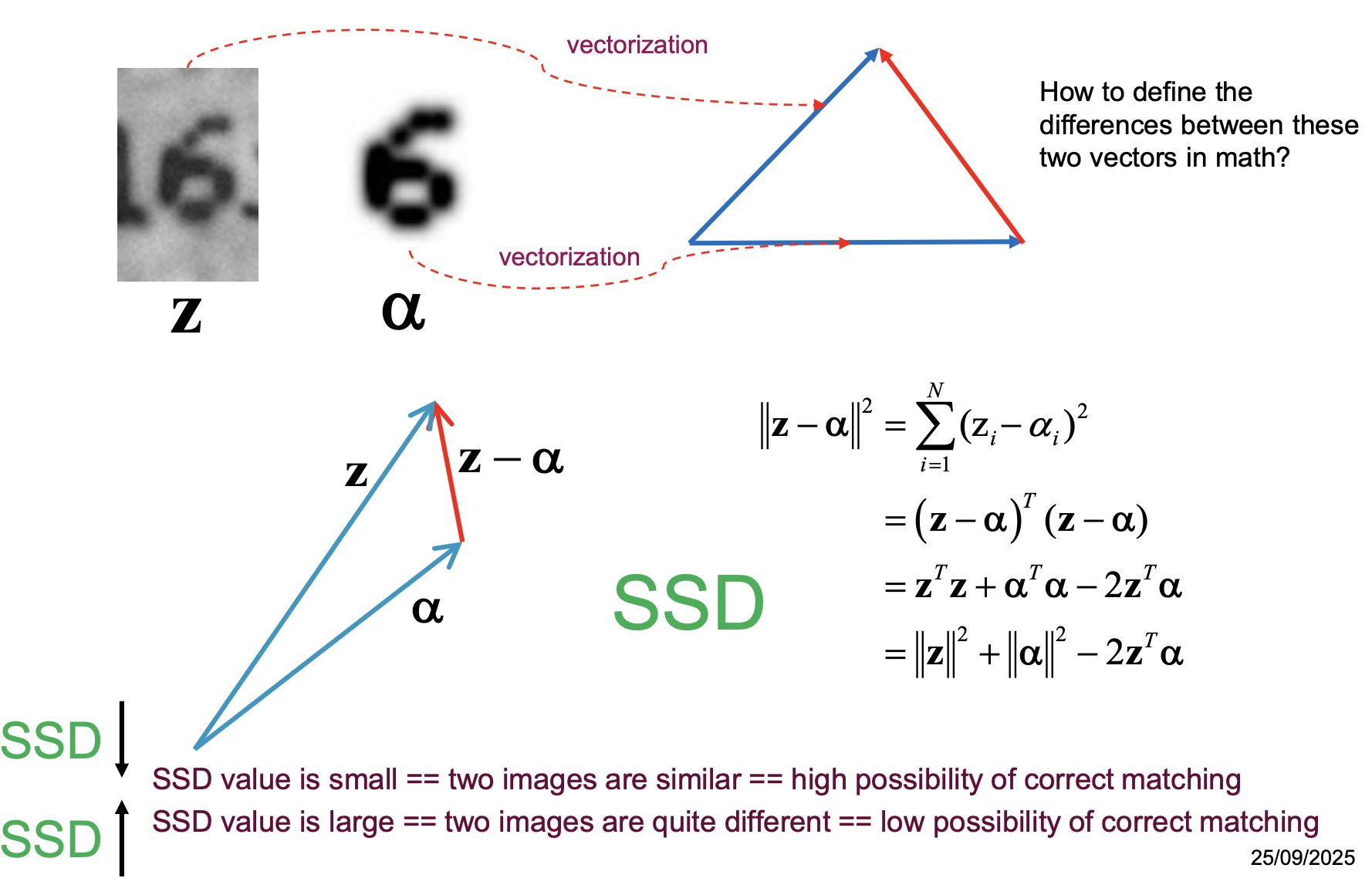

Matching Criterion: SSD (Sum of squared differences)

- type of distortion: unknown shifted position (𝑝, 𝑞)

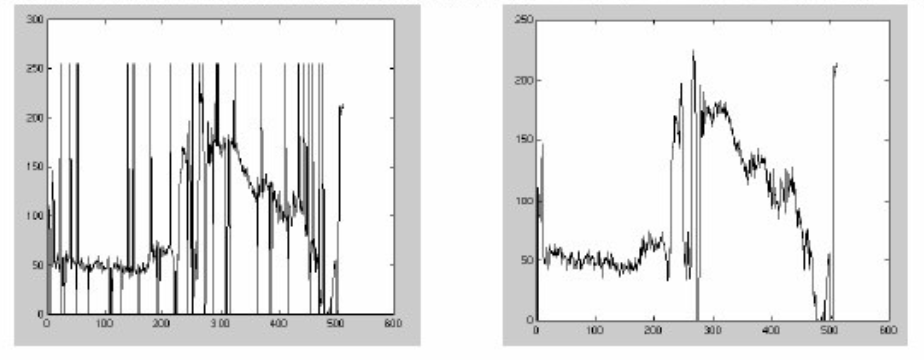

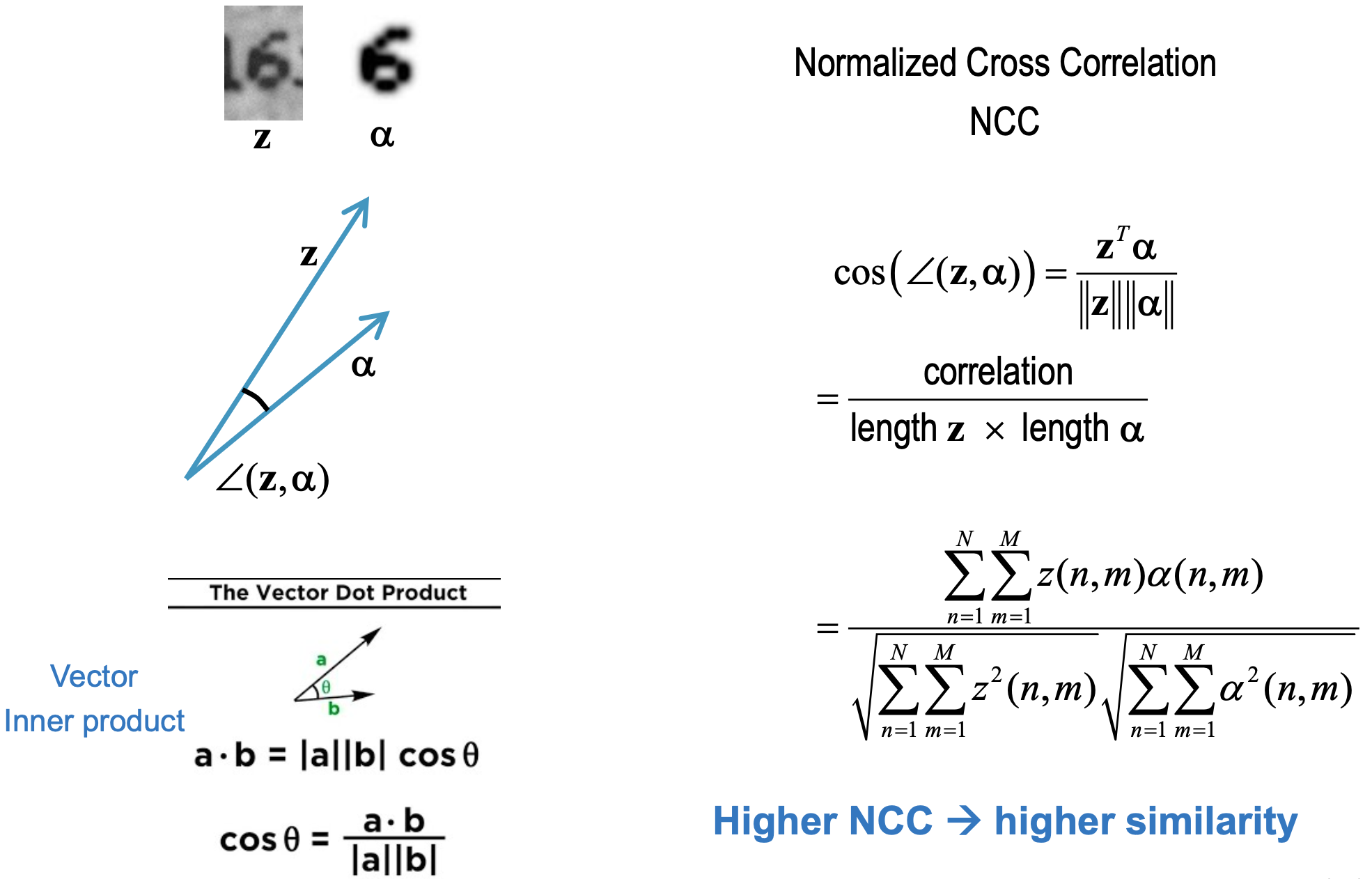

NCC (Normalized Cross Correlation)

- type of distortion: unknown amplitude: 𝐴

- Normalization solution:

- estimate from bg

- neutralize bg by subtraction (Normalize background in observe image and background in template image)

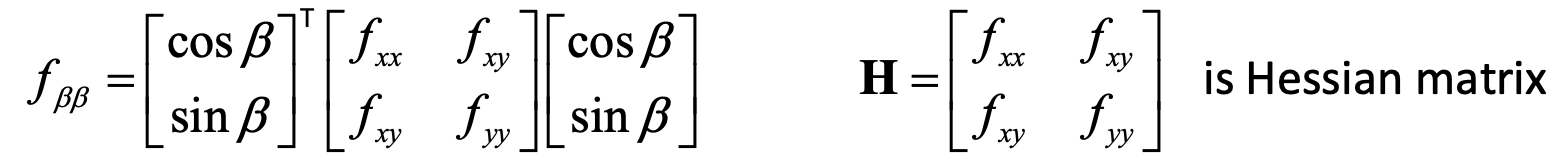

Line Detection

β = direction across line element

-

Idea: try normalization wrt orientation — this will be very computationally expensive (correlation with line templates)

- estimate locally the orientation of the line: β

- rotate the template over an angle of –β

- apply locally template matching

-

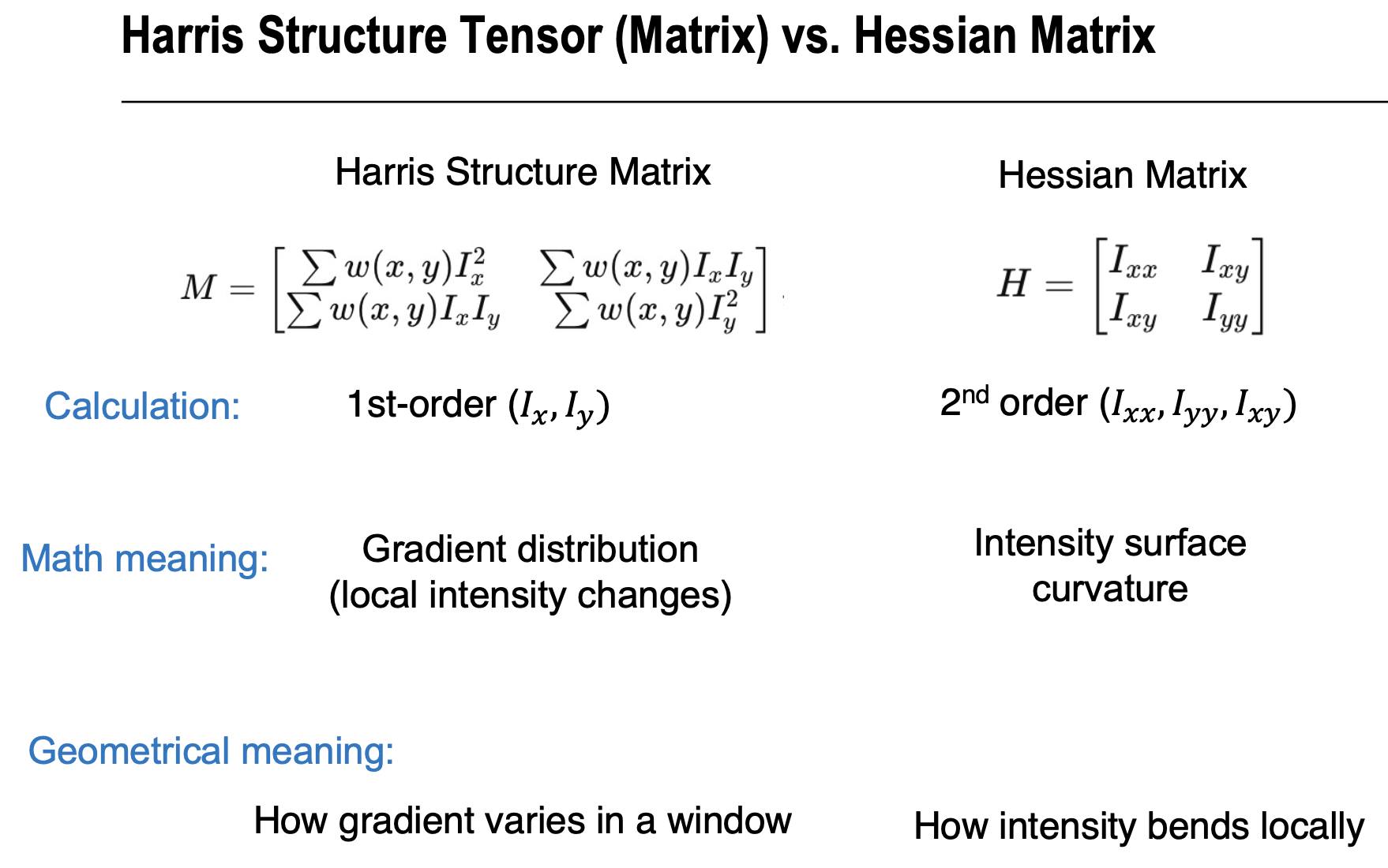

Solution: 2nd directional derivative in direction β (eigenvalues of Hessian matrix)

- β corresponds to the dominant eigenvector 𝐯 of the Hessian matrix

- The maximized equals the eigenvalue of the Hessian matrix

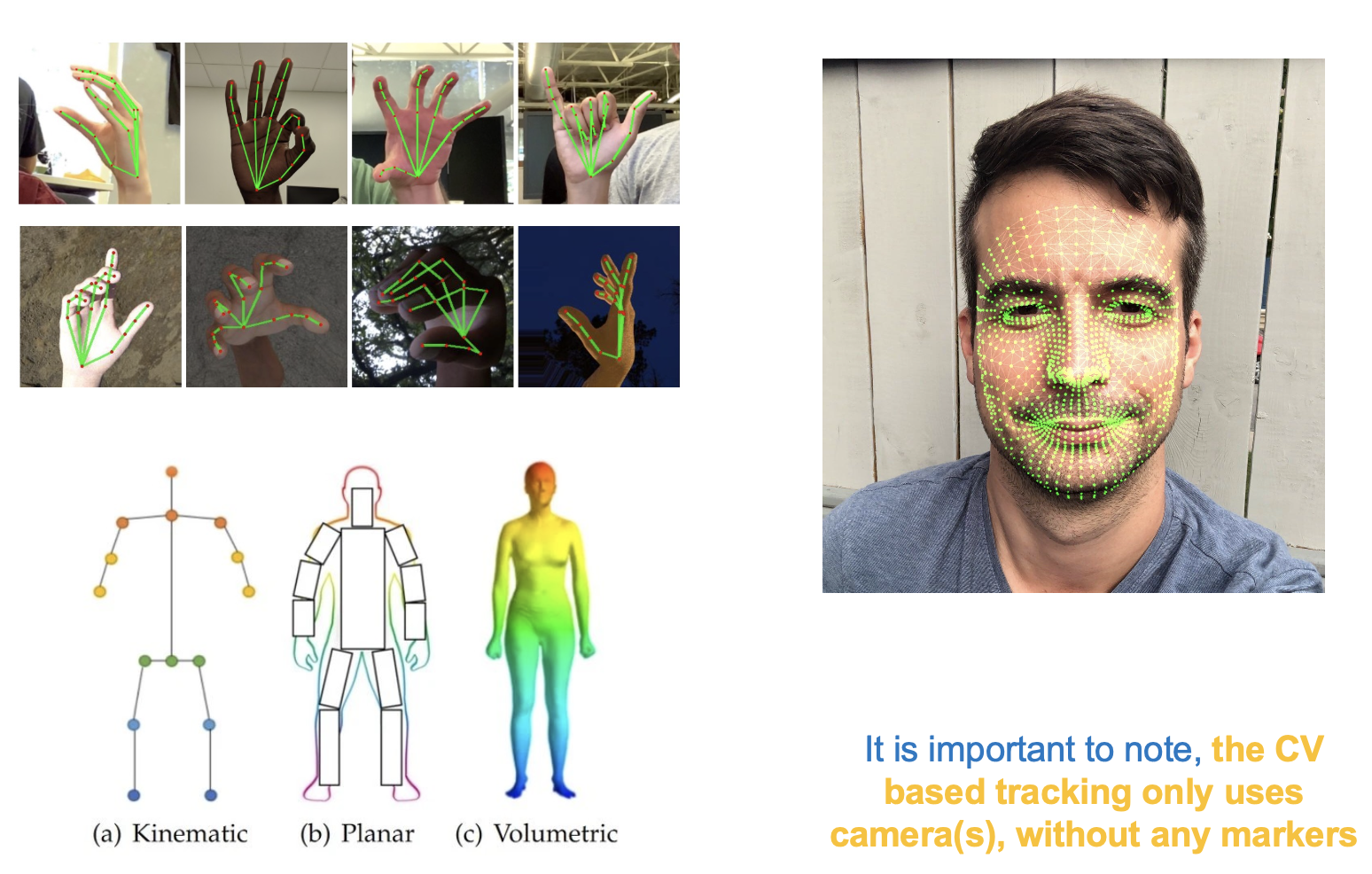

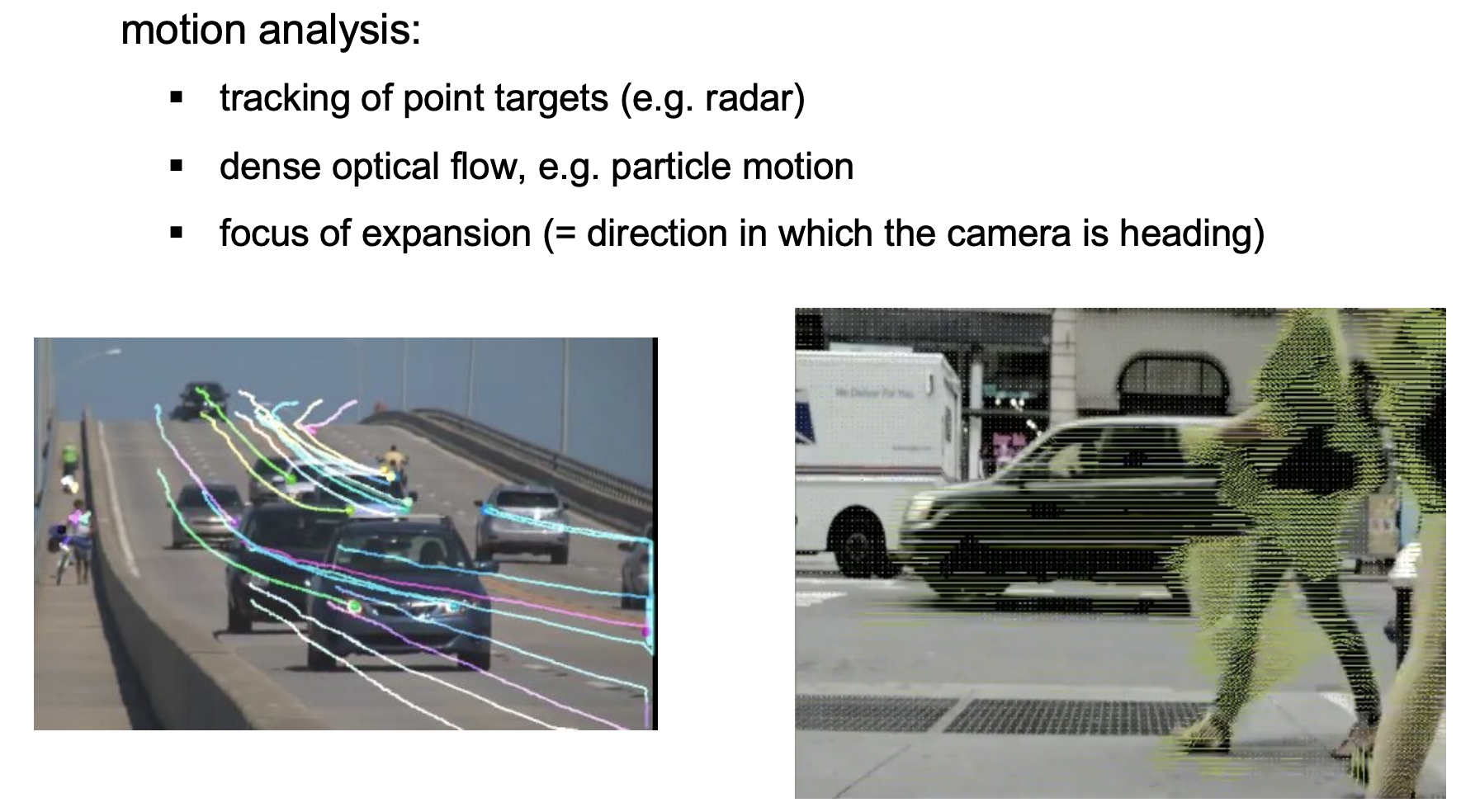

Lecture 9: Detection and tracking of interest points

Applications

- motion analysis

- range imaging

- object detection and parameter estimation

- point cloud

Harris Corner Detection

Harris’ first improvement

- Make the function less noise−sensitive by averaging over a neighborhood

- New term with average window

- defines and ellipsoid. Its contour lines are ellipses in the plane

- The shape of the ellipsoid is determined by and

Harris’ second improvement

- A point is an interest point iff is fast increasing for any combination of . That is, the ellipsoid must be peaked.

- Therefore, both and must be large.

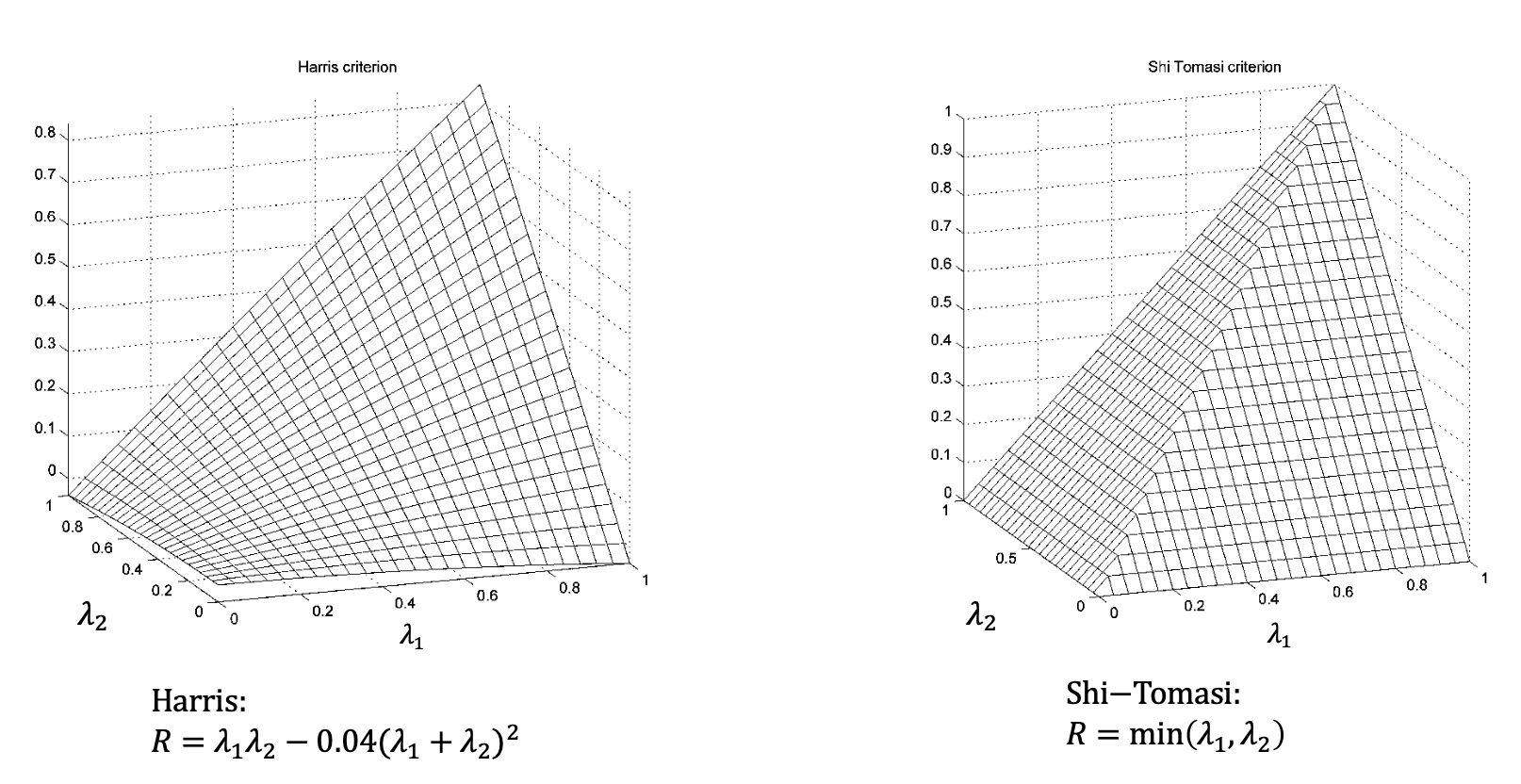

Harris criterion for an interest point

Shi−Tomasi ciriterion for an interest point (1994)

Harris’ third improvement

- Use a Gaussian weight function (less noise-sensitive):

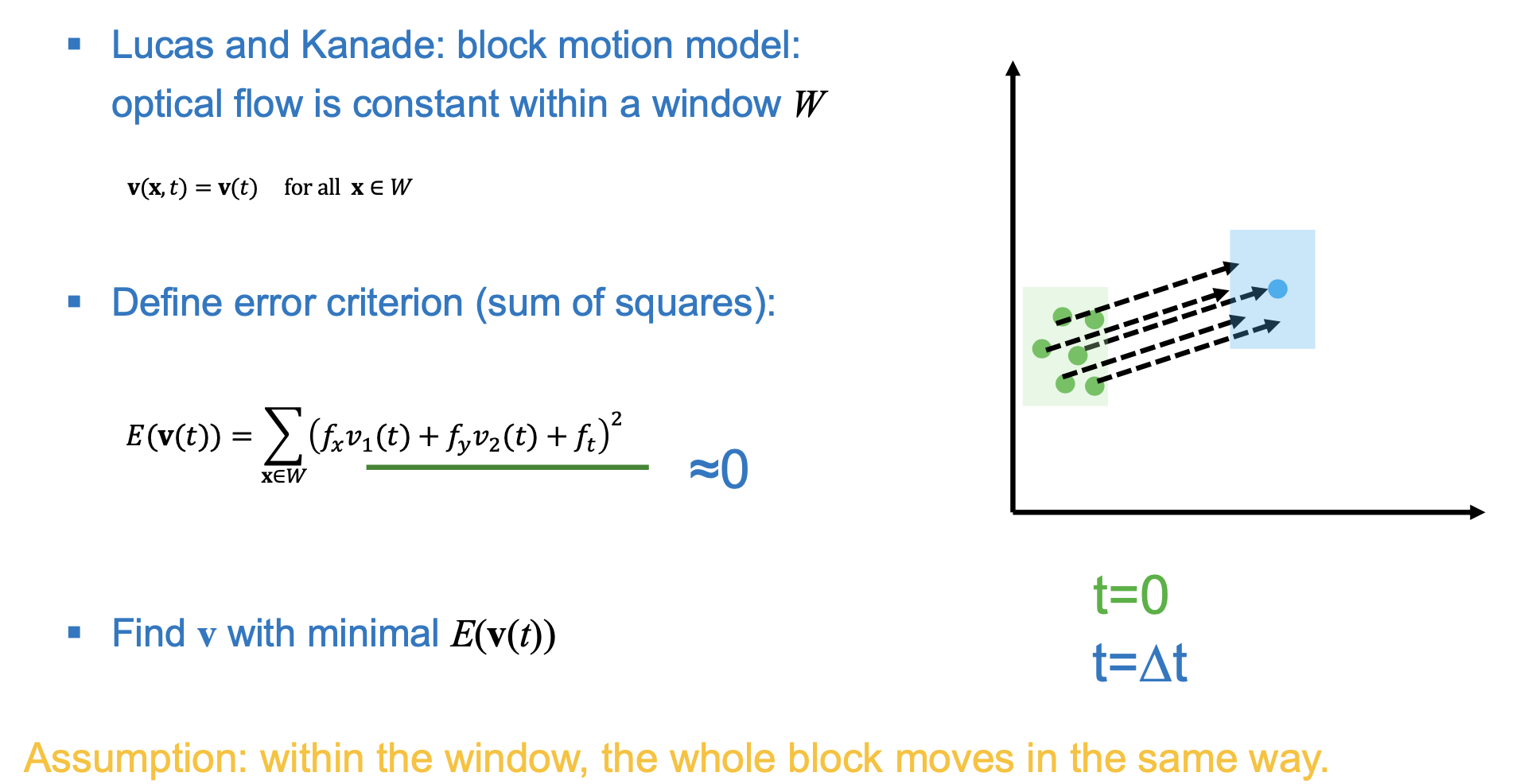

Lucas-Kanade: Point Tracking

Tracking

- Given two or more images of a scene, find the points in the second and next images that corresponds to the set of interest points in the first image

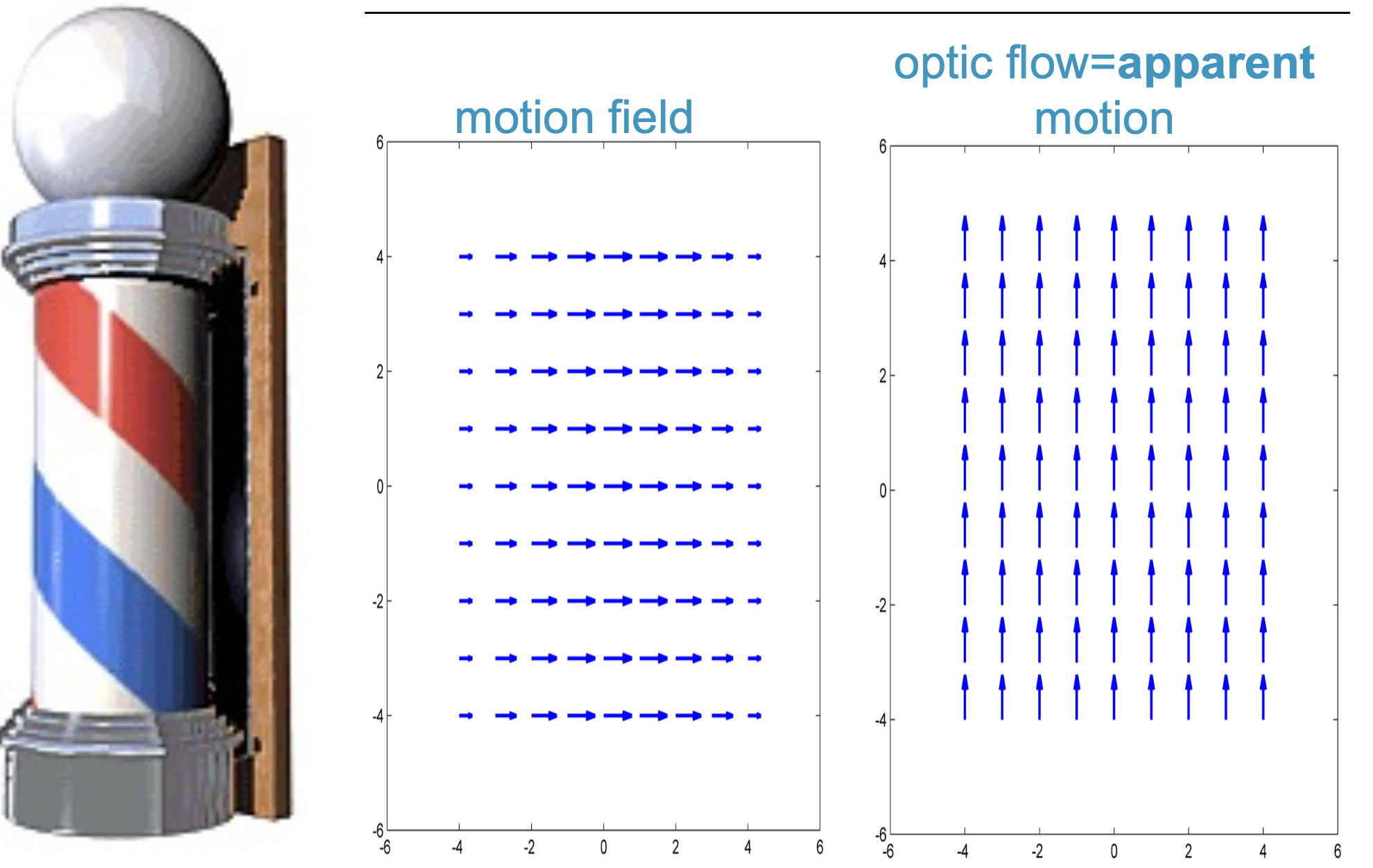

Optical Flow Motion Field

- Optical flow = appearance model

- Motion field = physical world

For the example above, think about how the lines go up as the result of the rotating pole inside.

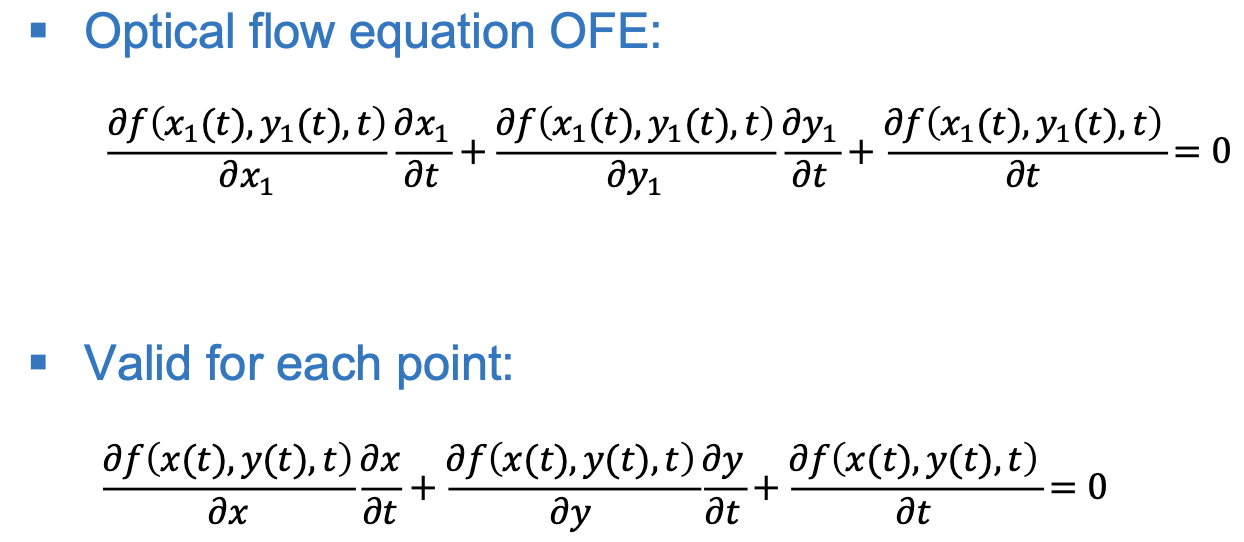

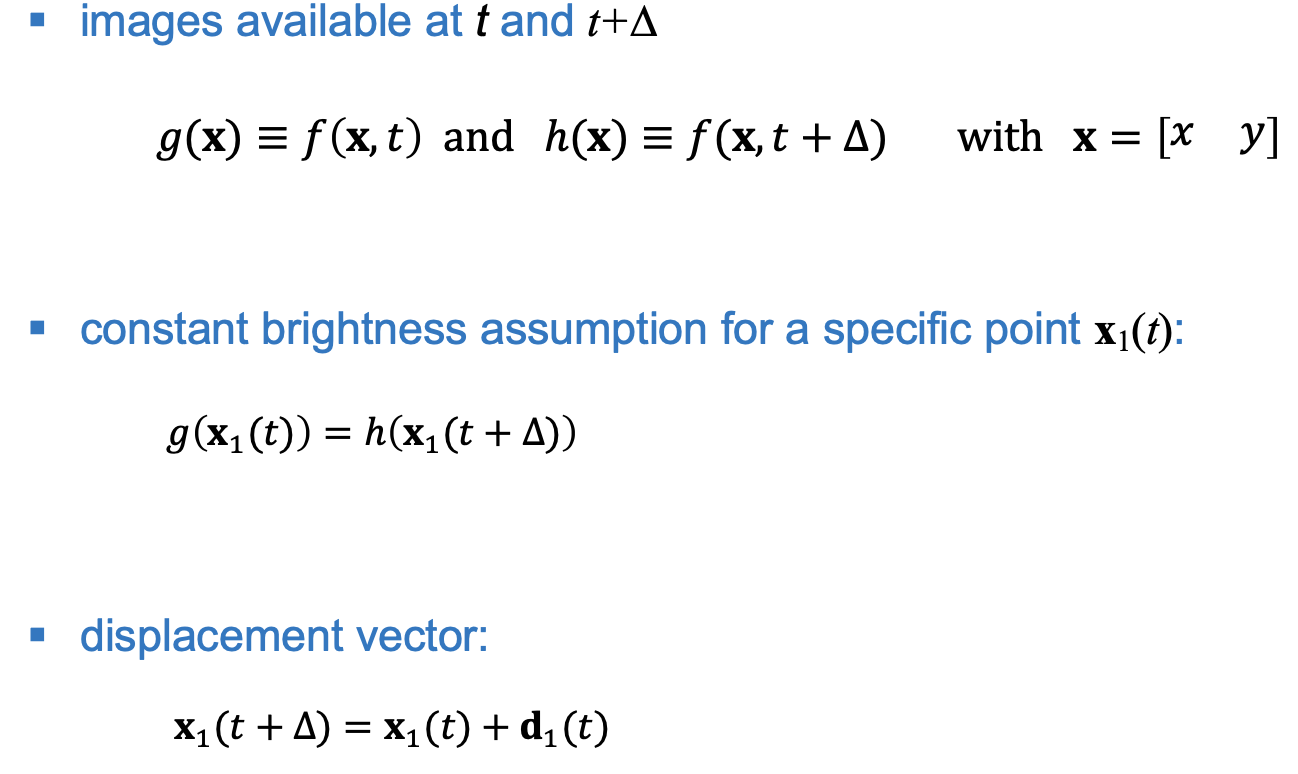

Optical Flow

- Constant brightness assumption: if the intensity of a pixel stays the same over a duration , then it’s derivative is 0 and thus stationary (?)

- is the apparent 2D motion (= optical flow) of the image at position and time .

- Minimization of :

- equating partial derivatives to zero

- solving for

- The two eigenvalues of M must be large

Discrete time

- We minimize SSD with respect to d:

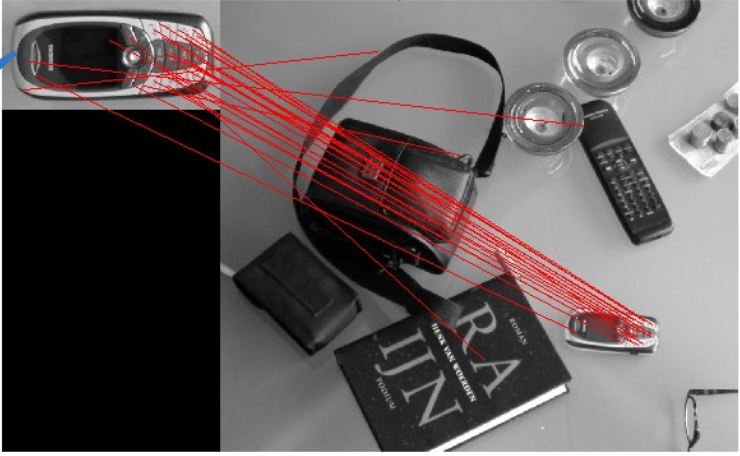

Lecture 10: Key point detection and matching

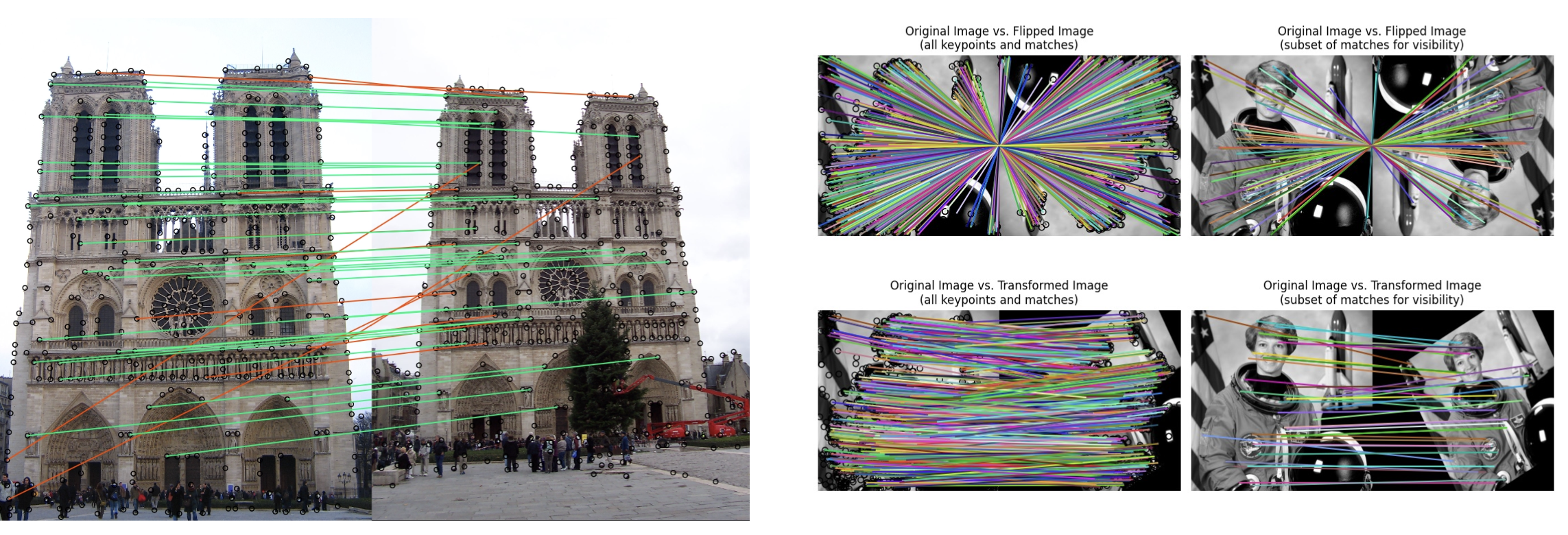

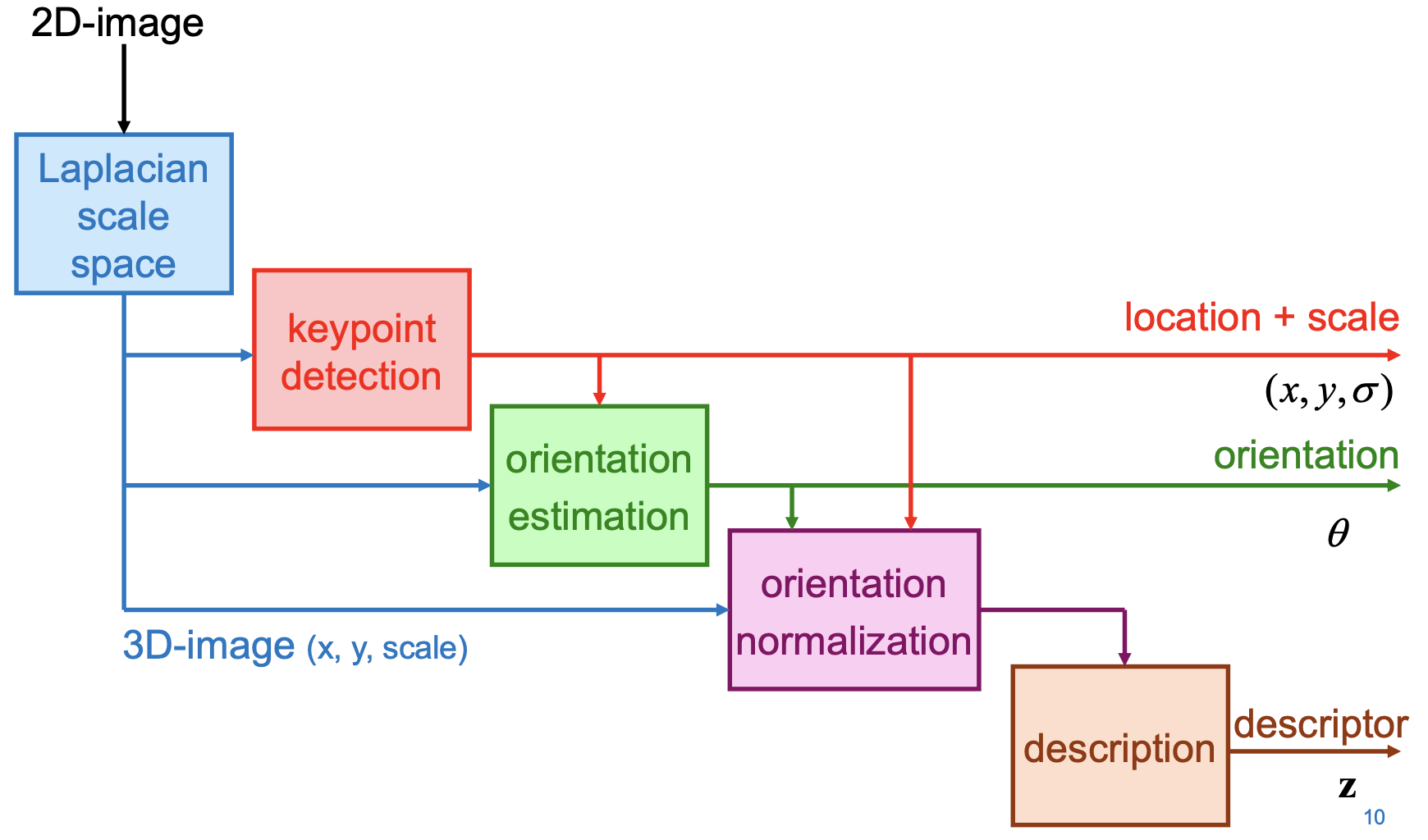

SIFT (Scale Invariant Feature Transform)

Keypoints in SIFT

- set of point features defined in an image

- each key point is attributed with:

- the local orientation

- the scale

- a descriptor (used to identify the local neighbourhood)

- useful properties:

- invariant to image translation, rotation and scaling

- invariant to contrast and brightness

- partially invariant to the 3D camera viewpoint

- distinctive

- stable

- noise insensitive

- zooming the image by a factor :

- does not change the location of a keypoint

- changes the scale of a keypoint by a factor

Laplacian of a Guassian (Inverted Sombrero)

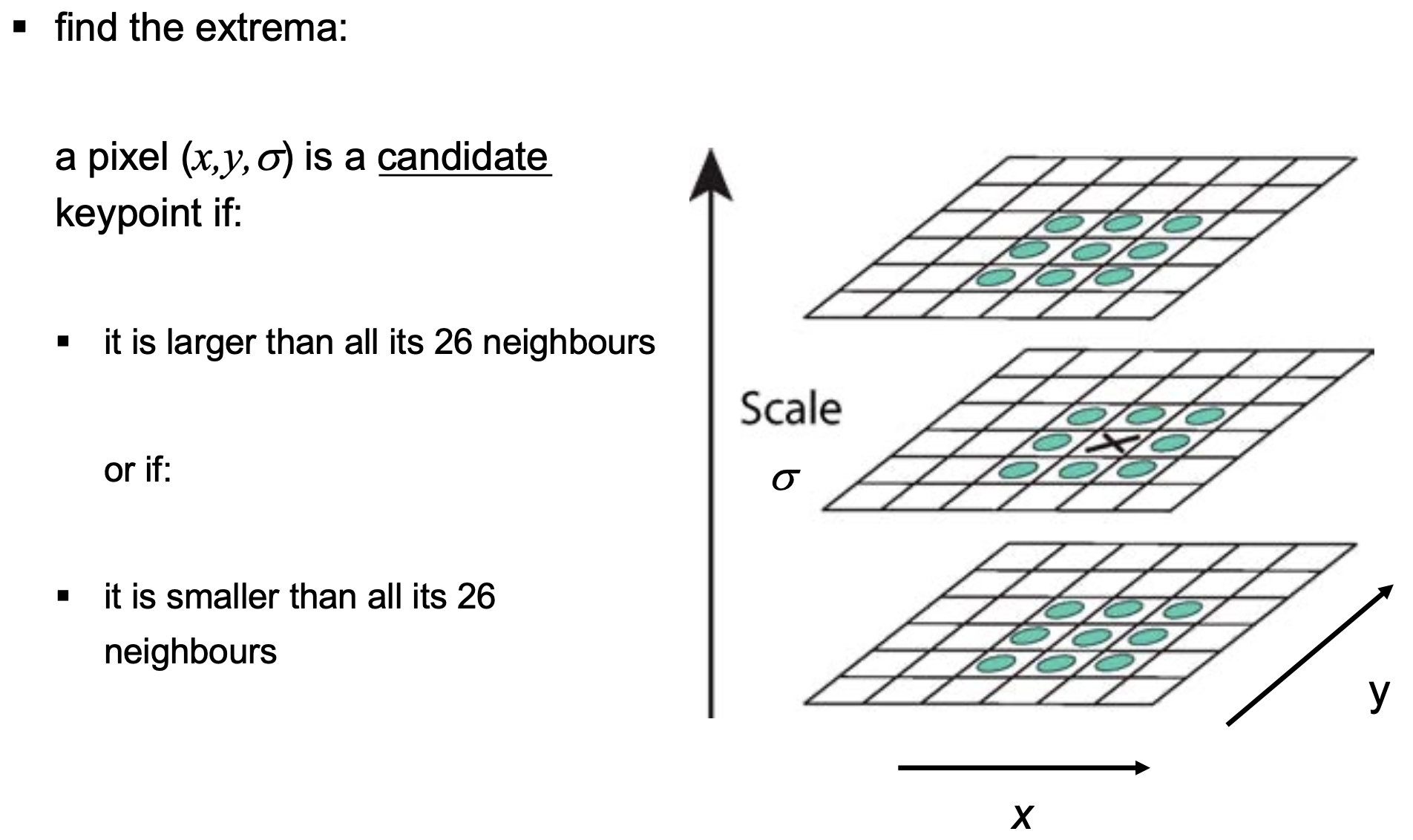

Detection of candidate keypoints

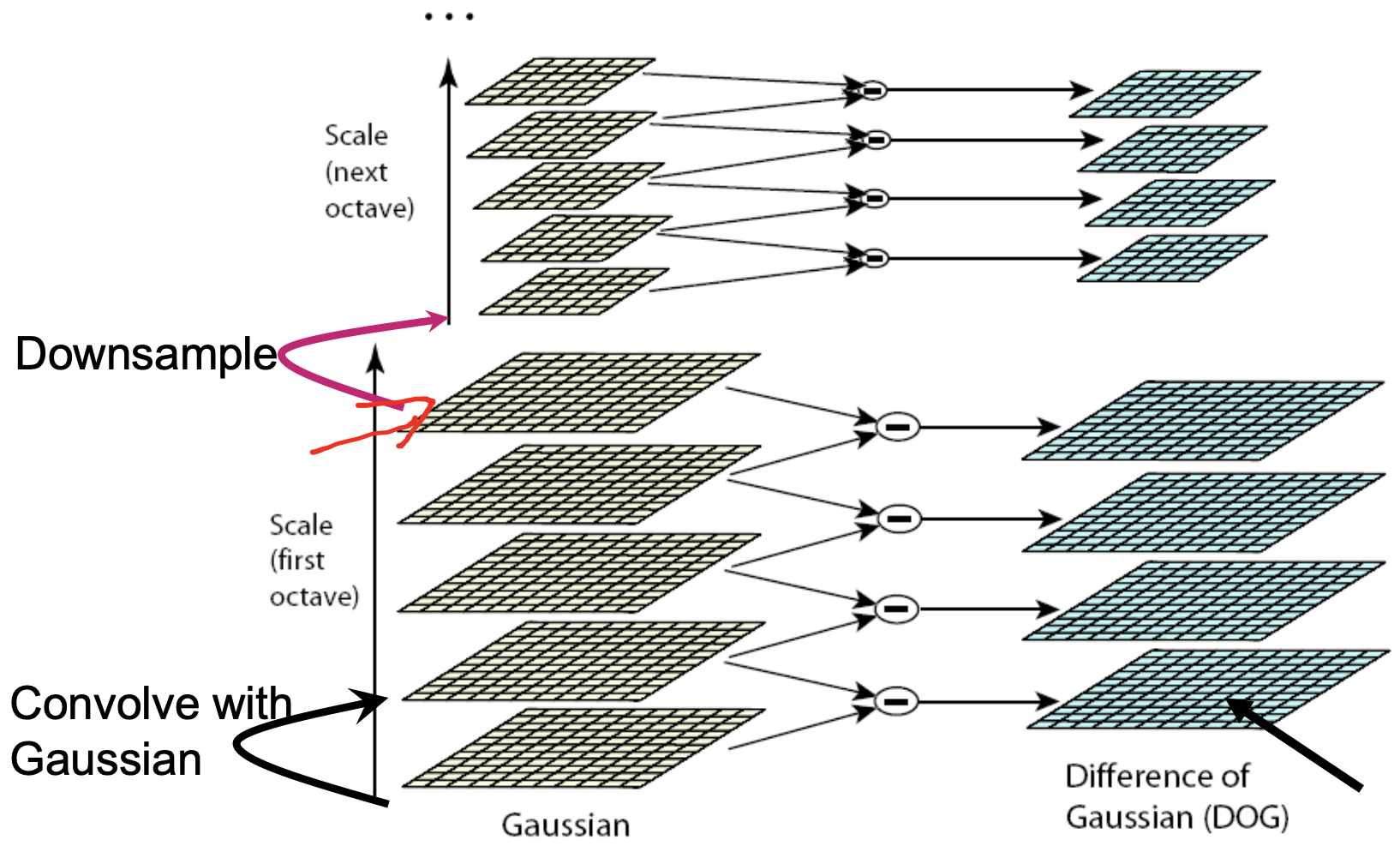

Efficient implementation of the LoG

- approximation of LoG by differences of Gauss (DoG):

- cascade of Gaussians

- Representations for matched keypoints are done through:

- adjacency matrix

- bipartite graphs

- table of edges

- table of pointers

- distance table

Applications

- image stitching

- stereo rectification

- landmark detection and matching for visual SLAM

- object recognition

Lecture 11: 3D Vision. Binocular Vision

-

Dense stereo

- reconstruction of 3D surface models of objects

-

Sparse stereo

- 3D information on a small number of points:

- finding the 3D positions of the points from multiple images

- finding the 3D pose of a camera relative to the points

- finding the pose of the camera relative to another camera

- visual SLAM: finding both the poses of cameras and the 3D positions of points

- 3D information on a small number of points:

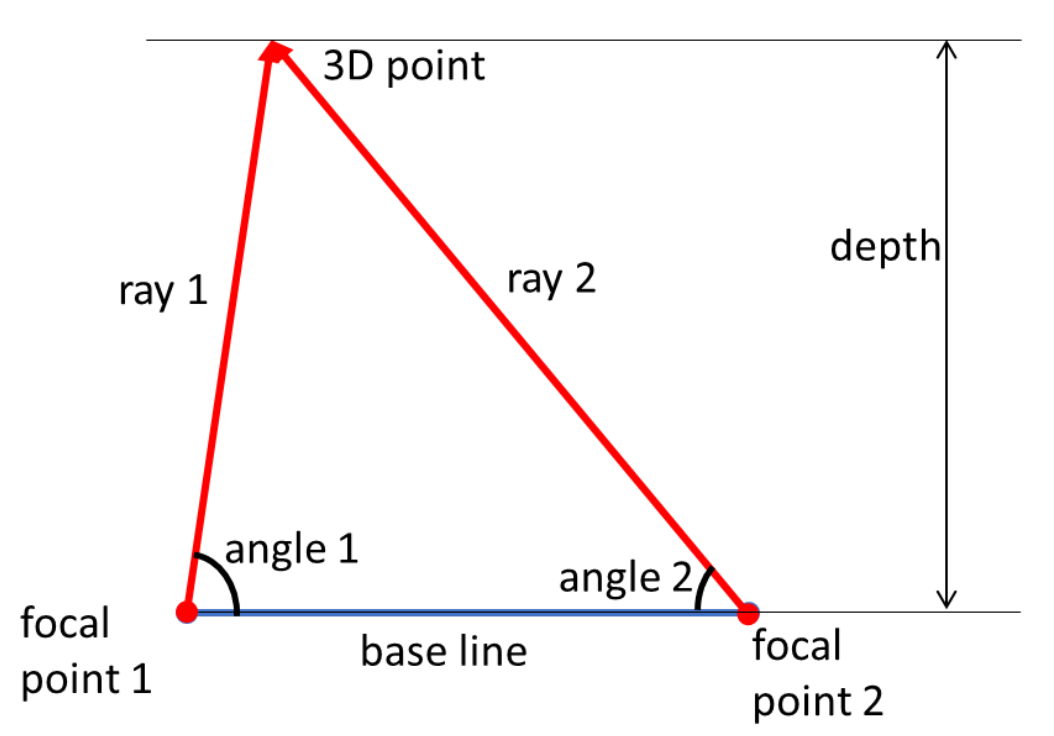

Triangulation

- Key relations:

- Triangulation (base line, two rays)

- Correspondence: representation of 3D point:

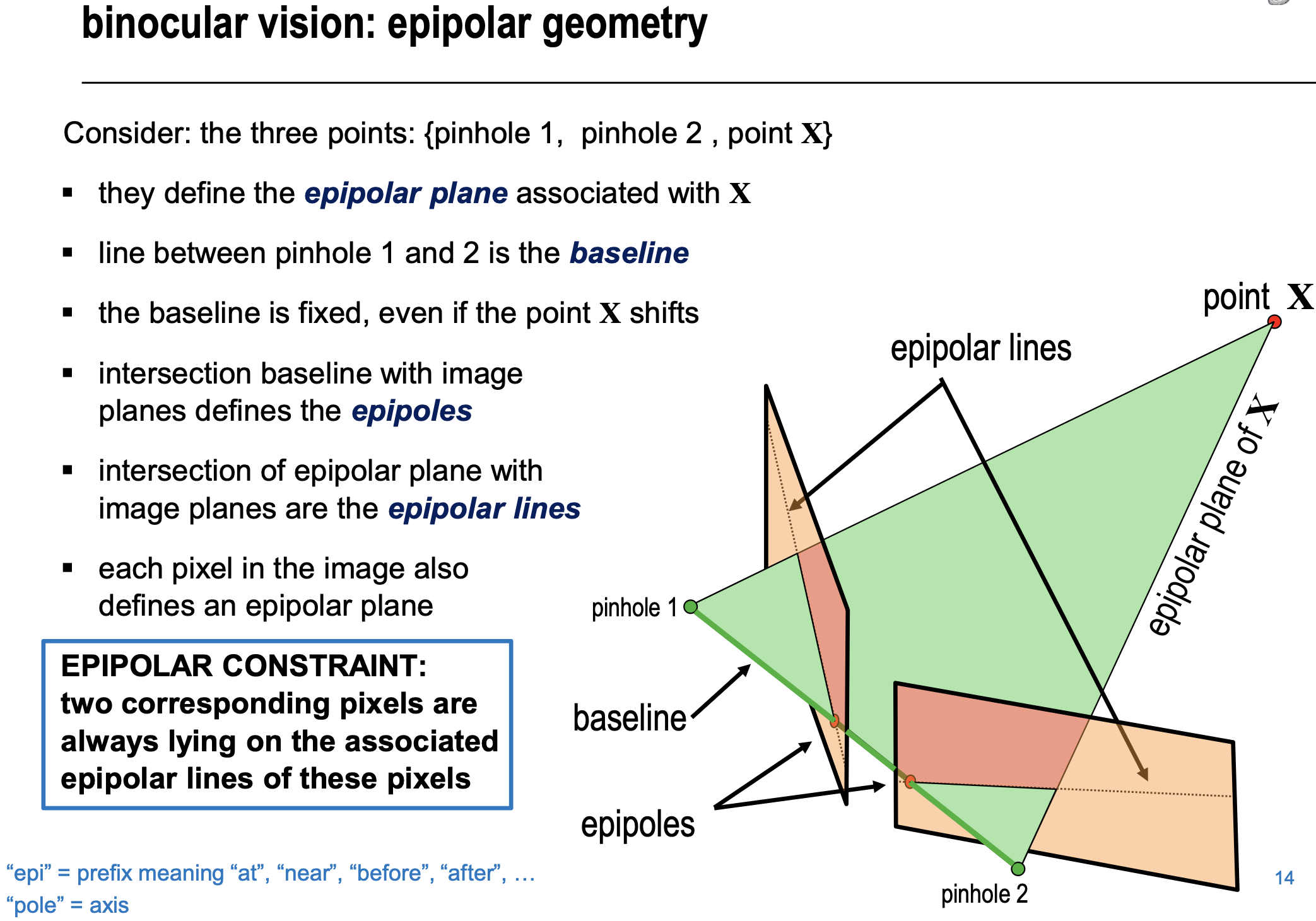

Epipolar Geometry

- How to find corresponding pixels?

- How to reconstruct the 3D position of object?

-

Epipolar constraint expressed with Essential Matrix:

-

E is the Essential Matrix:

-

Epipolar constraint in pixel coordinates: fundamental matrix

-

- Substitution in yields:

- define then:

- Epipolar constraint in pixel coordinates:

- is the fundamental matrix

- Epipolar constraint in pixel coordinates:

Rectification

- geometrical transformation of the images such that the epipoles are moved to infinity in the row-direction.

- simplifies the correspondence problem to a simple 1-D search along rows.

- needs calibration matrices K1 and K2, and fundamental matrix F

Rectification Steps

- determine the rotation axis and rotation angle between camera 1 and camera 2

- rotate camera 1 around this axis over half of the angle in counterclockwise direction

- rotate camera 2 around this axis over half of the angle in the other direction

- determine the direction between x-axis of the cameras with respect to the baseline vector

- using this direction, rotate the cameras such that their x-axis are aligned with the baseline vector

- Equalize the calibration matrices of both cameras

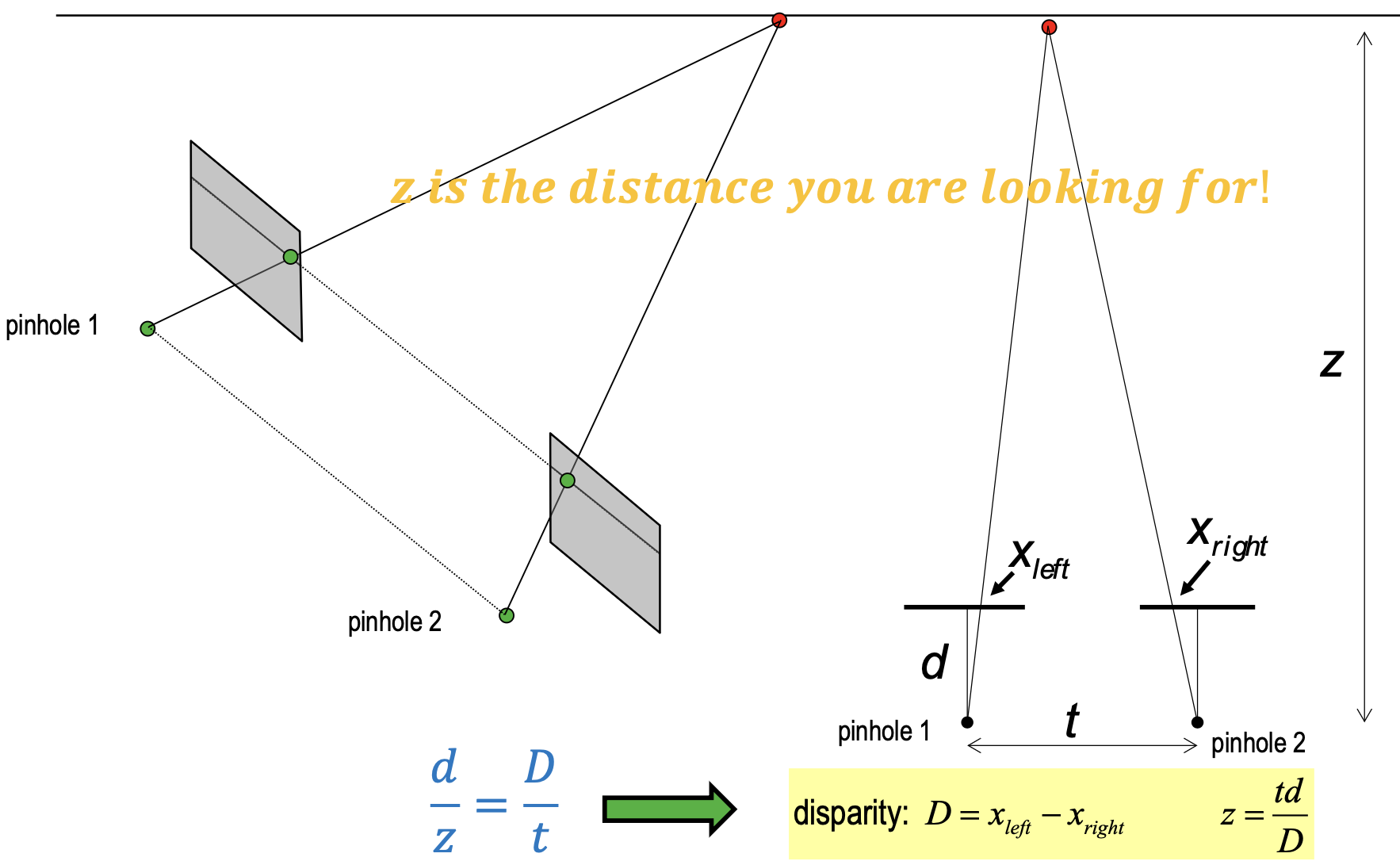

Disparity: difference of the seen pixels in two images

- Here we can see disparity and depth are inverse proportional

- Disparity is proportional to Baseline

Lecture 12: 1D signals and Depth Maps

1D Signals are very often seen in reality

- Earthquake

- Audio

- Temperature

- Bioelectrical Signal

- …

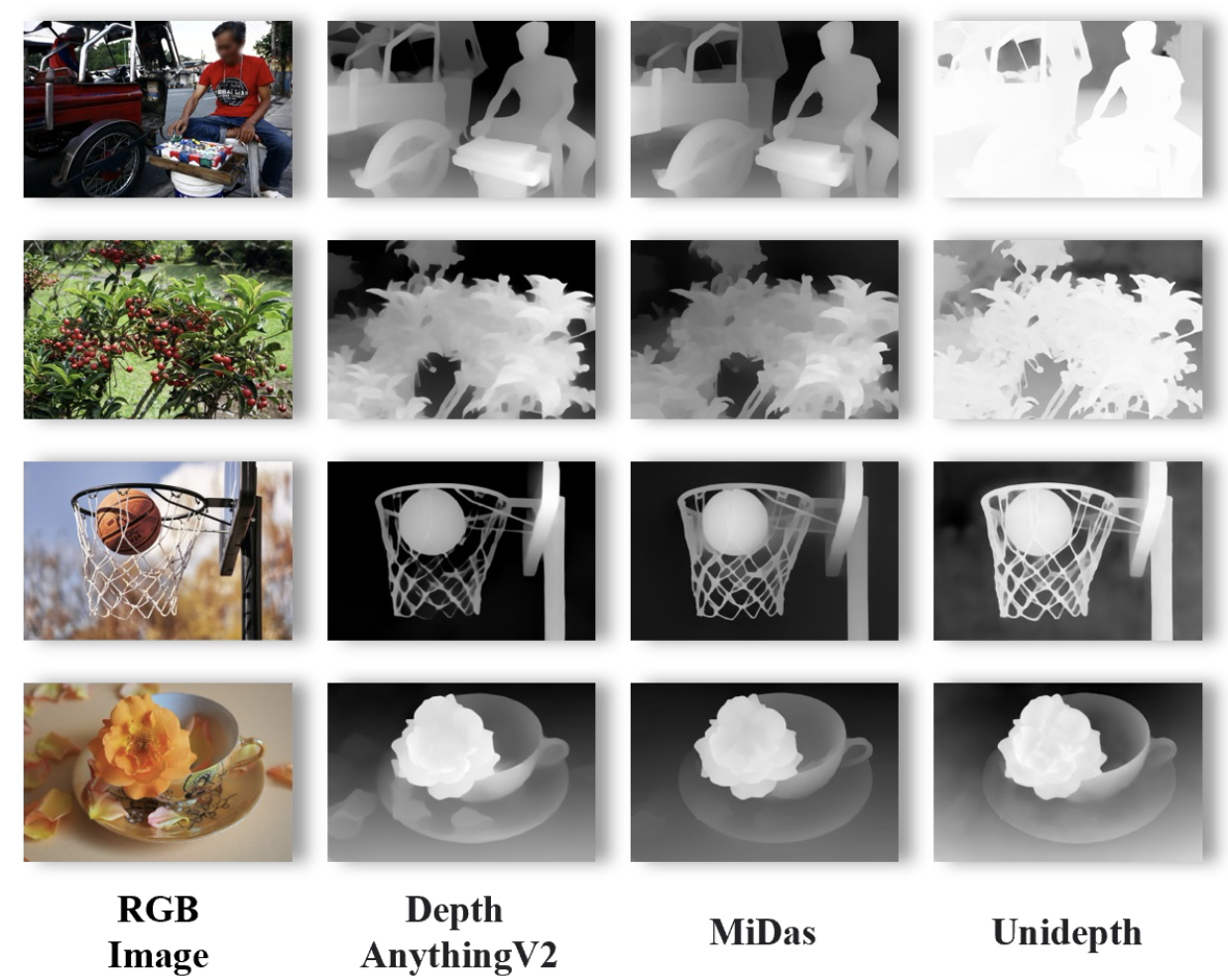

Monocular Depth Estimation

- Key Challenge: Scale Ambiguity

- With only one image, absolute meters are unknown models often predict relative depth that needs a later scale/shift alignment.

How does the human eye judge near vs. far?

- Occlusion: if one object blocks another, it’s closer.

- Relative / known size: the same object looks smaller when farther; familiar objects act as rulers.

- Linear perspective: parallel lines converge toward a vanishing point.

- Texture & contrast gradients: textures get denser and lower-contrast with distance (aerial haze).

- Lighting & shadows: shadow position/shape reveals spatial layout.

- Depth of field: in-focus plane is sharp; foreground/background blur more.

- Motion parallax: when you move, nearer objects shift faster across your view.