The structure will be:

- Robot and Human modelling

- Intention detection and expression

- Verbal communication

- Decision making

- Learning human behavior

- Task sharing and use cases

- Safety and ergonomics

- Ethics

Lecture 1: Introduction

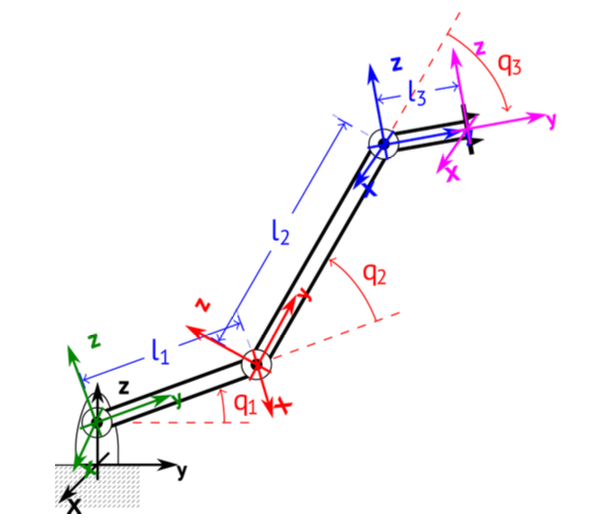

HRI = even more interdisciplinary than Robotics because we take the social world in consideration and the interactions the robot has with humans.

HRI

- Robotics,

- Philosophy,

- Humans,

- Design,

- AI,

- HCI (Human Computer Interaction) i.e. Sociology & Anthropology

Robotics Robots navigate and manipulate the physical world

HRI Robots interact with people in the social world

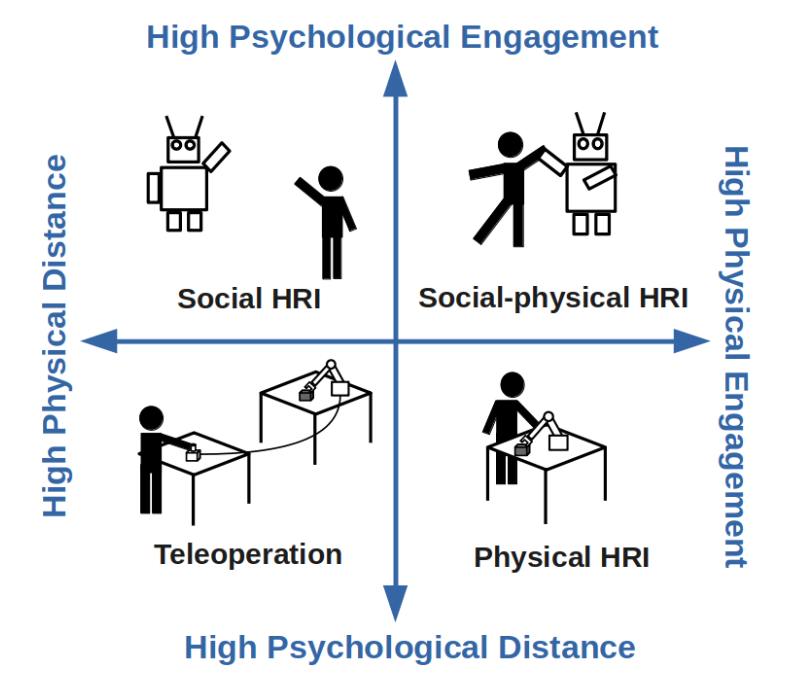

There’s levels to this shit

- Scientists combine the goal of Understanding the World with Explicit Knowledge to develop theories on how humans perceive robots and use the Human axis to conduct controlled behavioral studies.

- Engineers focus on Transforming the World through Technology and Explicit Knowledge, prioritizing the development of robust hardware and reliable software architectures that allow the robot to function.

- Designers bridge the gap by utilizing Implicit Knowledge and the Human axis to ensure the interaction is intuitive, focusing on the "how it feels" aspect of the robot's presence in a social environment.

Lecture 2: Robot Modelling

Robot Modelling

- Robot morphology and types

- Sensors and outputs

- Kinematics

- Challenges

Therefore, we could define a robot as an autonomous machine capable of sensing its environment, carrying out computations to make decisions, and performing actions in the real world.

Hardware: Robot Morphology

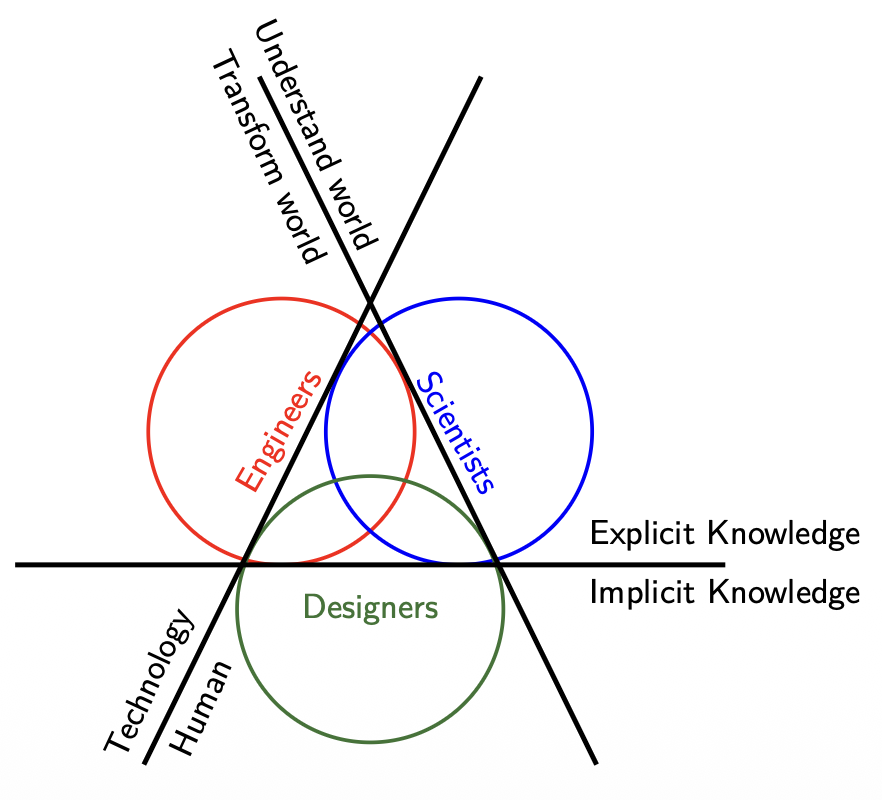

The Uncanny Valley is a critical concept in HRI that dictates how important robot morphology is.

- Affinity and Likeness: As a robot becomes more human-like, our affinity for it increases.

- The Dip: When a robot is “almost” human but not quite perfect, there is a sharp drop in affinity where it becomes creepy or repulsive (labeled as “corpse” or “zombie” levels).

- The Movement Multiplier: Movement (the dashed line) amplifies these feelings. A likable robot becomes more endearing when it moves, but a creepy robot becomes significantly more disturbing.

Software: Sensors and outputs

Here we have 3 possible architectures:

- Reactive (simply sense using the sensors and then act using actuators). Open loop.

- Sense-Plant-Act (Introduce the Planning phase to the prior concept). It’s also closed-loop.

- Behavior-Based (Here we already have decision-making abilities such as avoiding objects or exploring the world).

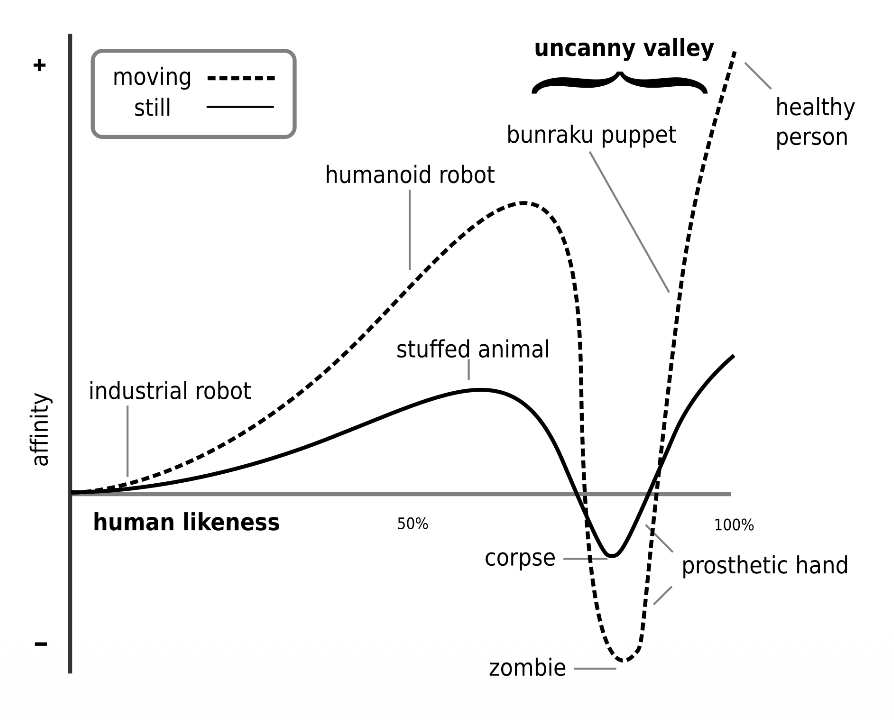

Robot Modelling: Forward Kinematics

We describe the pose of the end-effector using a 4x4 transformation matrix (affine transformation — it makes transformations from one state to another simpler by combining the Rotation and Translation vectors into one matrix)

Forward Kinematics is the process of calculating the final position and orientation of the end effector (the robot’s “hand”) based on the angles of its joints () and the lengths of its links ().

- Chaining Transformations: We calculate individual transformation matrices for each joint ) and multiply them together to get the total transformation .

- The final matrix is a function of joint positions and link lengths .

Example

Simply understand what each value represents in the following steps and where it should be inserted if we want a Rotation around an axis or a translation along another.

Step 1: Base Frame to Joint 1

This represents a translation along the Z-axis and a rotation around the X-axis.

Step 2: Joint 1 to Joint 2

This involves a translation along the Y-axis by the length of the first link (l1) and a rotation around the X-axis.

Step 3: Joint 2 to Joint 3

This follows the same pattern, translating by link length and rotating by joint angle .

Step 4: Final Tool Tip Translation

This final matrix accounts for the length of the end effector link ().

Final Result: Forward Kinematics Matrix ()

This is the combined matrix representing the total position and orientation of the end effector relative to the base.

Therefore, we can deduce the definition:

Forward Kinematics

The FKM is a transformation matrix, a function of the joint positions and link lengths. If we know these variables, we can calculate the position and orientation of the end effector (or any other point).

Denavit-Hartenberg (DH) convention

The Denavit-Hartenberg convention define the relationship between consecutive joint frames, specifically from joint to joint . It reduces the transformation between links to four specific parameters.

- (Joint offset): The length along the Z-axis from joint i to joint i+1.

- (Joint angle): The rotation around the Z-axis between joint i and joint i+1.

- (Link length): The distance along the X-axis from joint i to joint i+1.

- (Link twist): The angle around the X-axis from joint i to joint i+1.

Robot Velocity: The Jacobian

The Jacobian is specifically defined as a matrix, where is the number of joint velocities. It relates joint velocities () to the 6D end-effector velocity vector () consisting of three linear velocities () and three angular velocities ().

Inverse Kinematics

The primary distinction between the two models is the direction of the calculation:

Difference between Forward and Inverse Kinematics

- Forward Kinematics: specific coordinate values are given to each joint where the end-effector will be located

- Inverse Kinematics: desired end-effector position what the joint coordinate values should be

As a rule of thumb, the end-effector is the terminal component that facilitates the robot’s interaction with its environment to accomplish its specific mission.

Example of Forward and Inverse Kinematics Modelling for a mobile robot (differential drive)

The robot’s state in the environment is defined by its Pose (), and its movement is dictated by the Control Input ().

- Pose (): The robot’s position and its orientation angle in the global frame.

- Control Input (): Consists of the robot’s linear velocity () and its angular velocity ().

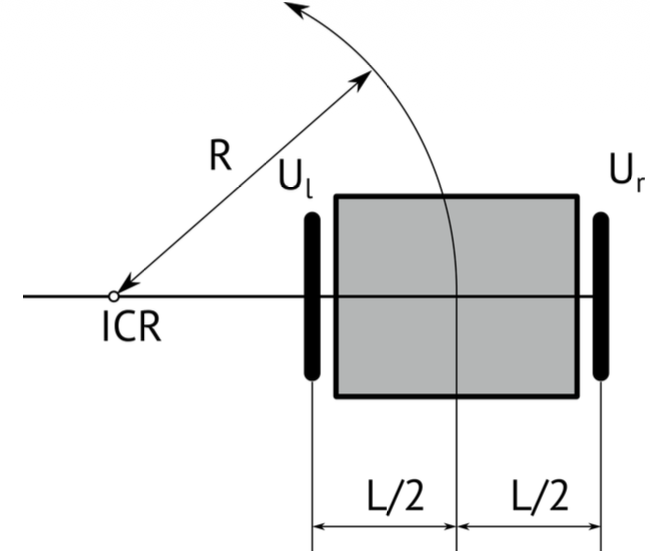

In differential drive, to follow a trajectory, the robot rotates around an Instantaneous Center of Rotation (ICR) which is the point around which the robot appears to be rotating at a specific moment.

Rotation Radius (R): The distance from the ICR to the center of the robot. It is determined by the wheel velocities and the distance between wheels .

Forward Kinematics:

If we define , the final step combines these relations into a single matrix that maps the rotational velocities of the wheels to the robot’s overall velocities .

Inverse Kinematics:

Inverse kinematics for a differential drive robot involves calculating the individual wheel velocities () required to achieve a desired global robot motion or a specific rotation radius .

It’s basically writing the output in terms of the input.

And if we define the kinematics model in the world frame in terms of a homogenous transformation, we get

How do we estimate the pose when we have noise? Slap a Kalman Filter

where is the process noise and is the measurement noise

The noise signals , are considered to be normally distributed with zero means and covariance matrices and respectively. We talk about covariance when we have multiple states and we have to estimate noise for each of them.

Basically, it’s a way to introduce uncertainty in our modelling.

In the ROS ecosystem, localization is organized through a standardized hierarchy of coordinate frames to ensure different sensors and algorithms can communicate effectively. This structure follows a specific chain: earth map odom base_link.

- earth: Can be used for connecting multiple robots on different maps

- map: Calculated based on discontinuous sensors (e.g. GPS)

- odom: Calculated based on continuous sensors, (e.g. IMUs)

- base_link: Attached on the robot, as forward, left, up

There are also two ROS standard systems:

- REP 103: Defines standard units of measure and coordinate conventions.

- REP 105: Specifically defines the coordinate frames for mobile platforms mentioned above.

Lagrangian of a robot

The Lagrangian of a robot provides a condensed way to describe its dynamic behavior, relating the forces or torques acting on the joints to the resulting motion.

The general dynamic equation:

- The matrix , contains information about the inertia of the system, therefore contains all the masses and moments of inertia.

- The matrix has elements related to the centrifugal and Coriolis terms.

- (Gravity Vector): This term represents the dependence of the robot’s potential energy on its position, accounting for gravity.

- (Torque): The vector of generalized forces or torques applied to the joints

This equation above is the inverse dynamics where we want to know what torque should be applied to achieve a specific acceleration . You use this to determine how much power your motors must output to move the robot in a specific way.

The forward dynamics tells us what acceleration we get if we apply a specific torque . You use this primarily for simulation to see how the robot will actually react to motor inputs.